Introduction to Parallelism

Table of Contents

1 Reading

Grossman 2.1–3.4

2 Why should you care about parallel computing?

- what you already know: one-thing-at-a-time or sequential programming

- Removing this assumption (multiple threads of execution or multithreaded programming) will make writing correct software much more complicated, so why do it?

- Gotta go fast!

- responsiveness, I/O latency, failure isolation

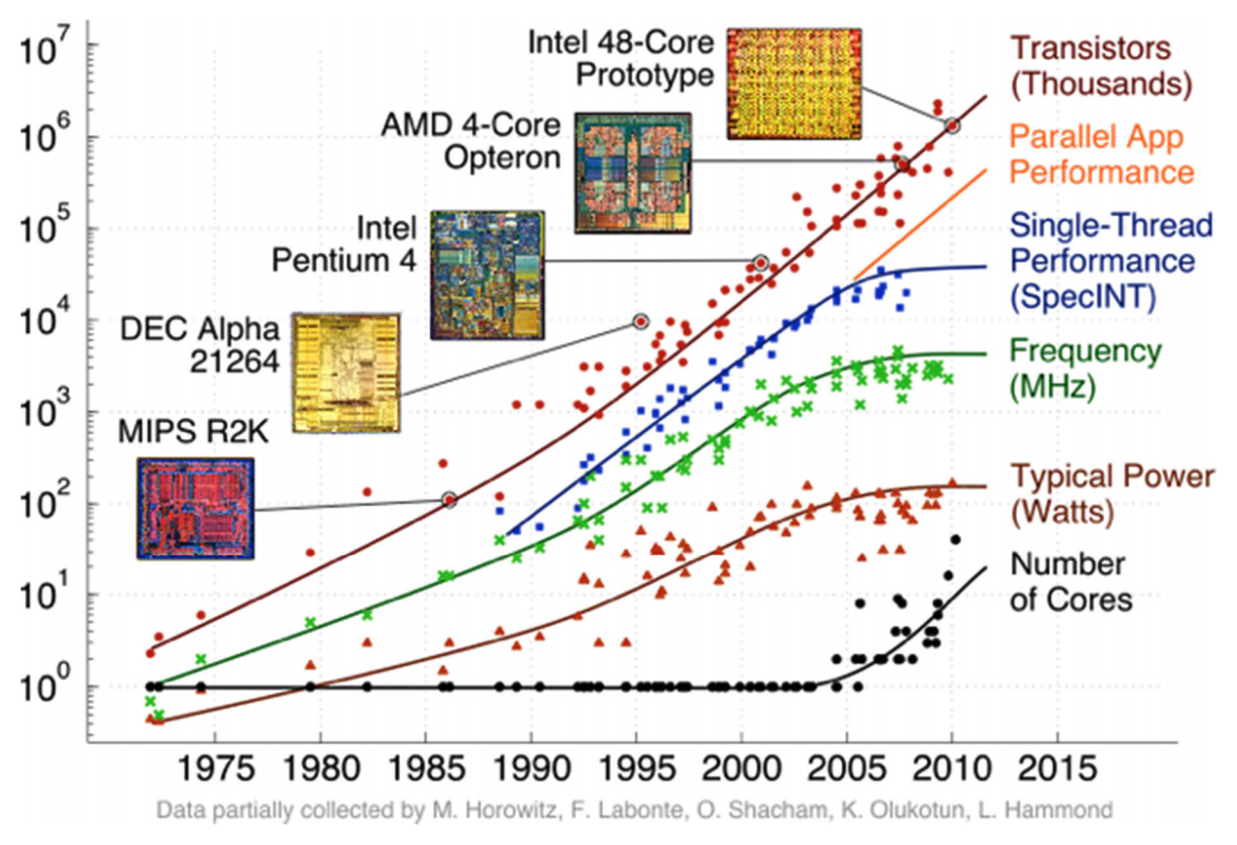

- Been around since the 1950s, but the switch from increasing clock rate to multiple processors on each chip means that software needs to be multithreaded if it is to take advantage of better hardware

3 parallelism vs concurrency

- parallelism: slicing potatoes, divide up the work (but stops helping at some point)

- work → many resources

- concurrency: shared resources such as an oven or stove burners, need to coordinate access to them

- many requests → resource

- given a stack, would the following situation primarily involve parallelism or concurrency?

- multiple threads pushing elements onto the stack

- multiple threads searching different parts of the stack to check if it contains an element e

- one thread pops from the stack at the same time another thread peeks

- distinction not absolute, parallel programs will often involve both

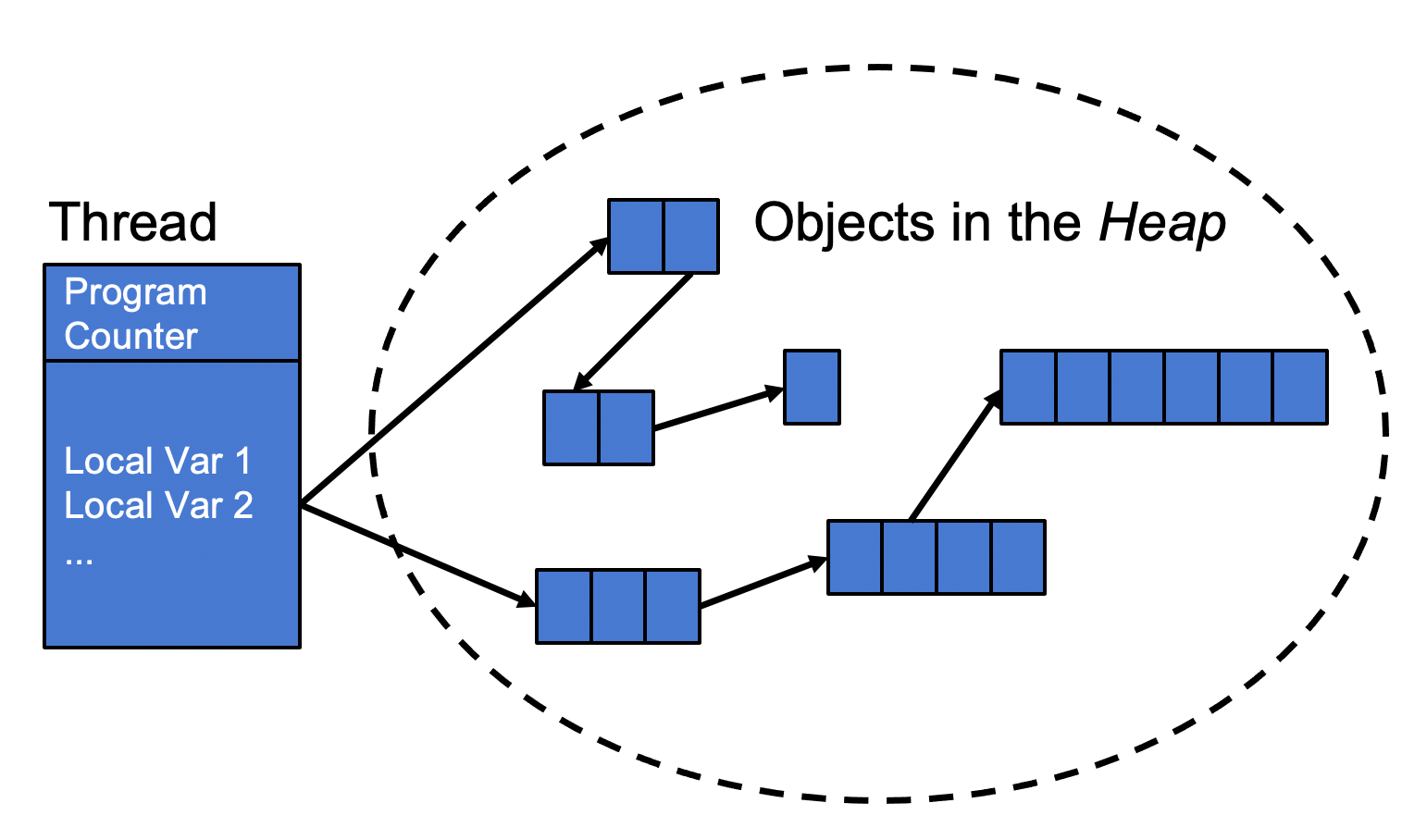

4 thread shared memory model

- a thread is a program running sequentially, multiple threads can run simultaneously

- OS controls assignment of active threads to processors, ensures threads take turns

- called scheduling, major topic in operating systems

- need a way for threads to communicate

- OS controls assignment of active threads to processors, ensures threads take turns

4.1 old story

- program counter (currently executing statement), local variables

- objects in the heap (i.e., those created by new)

- not to be confused with the heap data structure

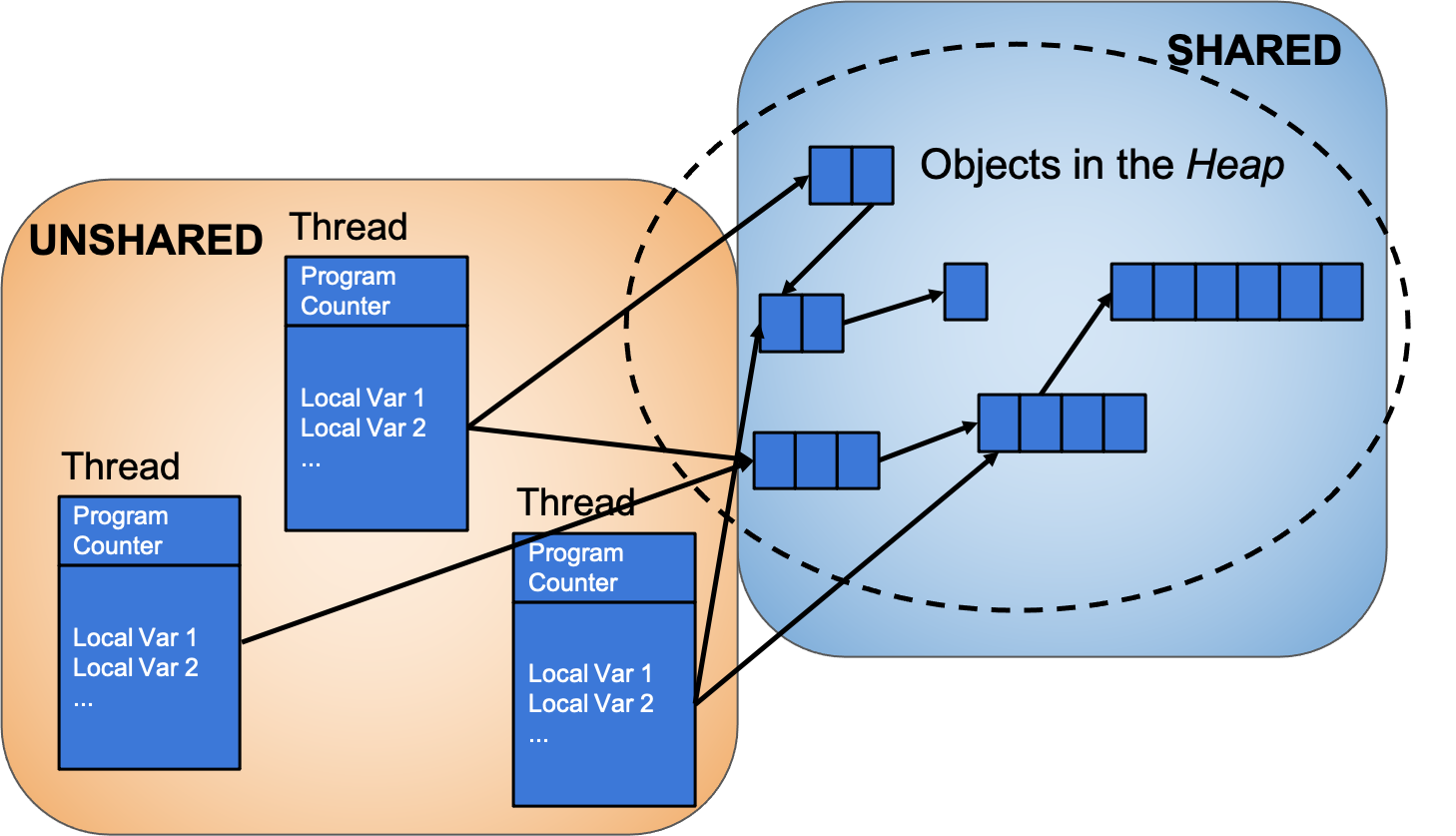

4.2 new story

- many threads, shared heap

- communicate by writing to a shared location in the heap that another thread reads from

- not the only model, but a common and very useful one

5 divide-and-conquer parallelism

- hard-coded one thread per processor

- problems?

- portability (different computer will have different numbers of processors) flexibility (available processors may change)

- load imbalance (some pieces of work could take a lot longer than others)

- problems?

- solution: use many more threads than processors

- whatever processors are available can always be working away at big pile of threads, small chunks of work greatly reduces chance of serious load imbalance

- recursively subdivide and compute subparts in parallel to sum array elements in some range low to high

- sketch out recursive algorithm

- if range contains only one element, return it as the sum

- else, in parallel: recursively sum low to middle and middle to high add results from parallel recursive sums

- for an array of size n, how many subdivisions? \(\log n\)

- in theory, optimal speedup gets us to \(O(log n + n/P)\) where \(P\) is the number of processors

- need to avoid overhead of creating new threads to sum tons of very small ranges

- sequential cutoff

6 Fork-Join Framework

- to actually implement parallelism in Java (and many programming languages), we use two fundamental operations:

fork: split off a new thread from the current onejoin: wait for a thread to finish

/* fork-join pseudocode, not the actual messy Java see the reading for the how creating threads looks in Java */ public int parallelSum(int[] arr, int lo, int hi) { if (hi - lo < SEQUENTIAL_THRESHOLD) { /* compute sequential sum */ return result; } else { Thread left = fork(parallelSum(arr, lo, (lo + hi) / 2)); Thread right = fork(parallelSum(arr, (lo + hi) / 2, hi)); int leftAns = left.join(); int rightAns = right.join(); return leftAns + rightAns; } }

- This is similar to the recursive subdivision we saw in mergesort (repeatedly dividing input in half until we reach a base case)

- Key difference is that we are processing each half simultaneously

7 Example

Arrays.sortvsArrays.parallelSort