CS 332 s20 — The Process Model

Table of Contents

1 Background: Regions of Memory

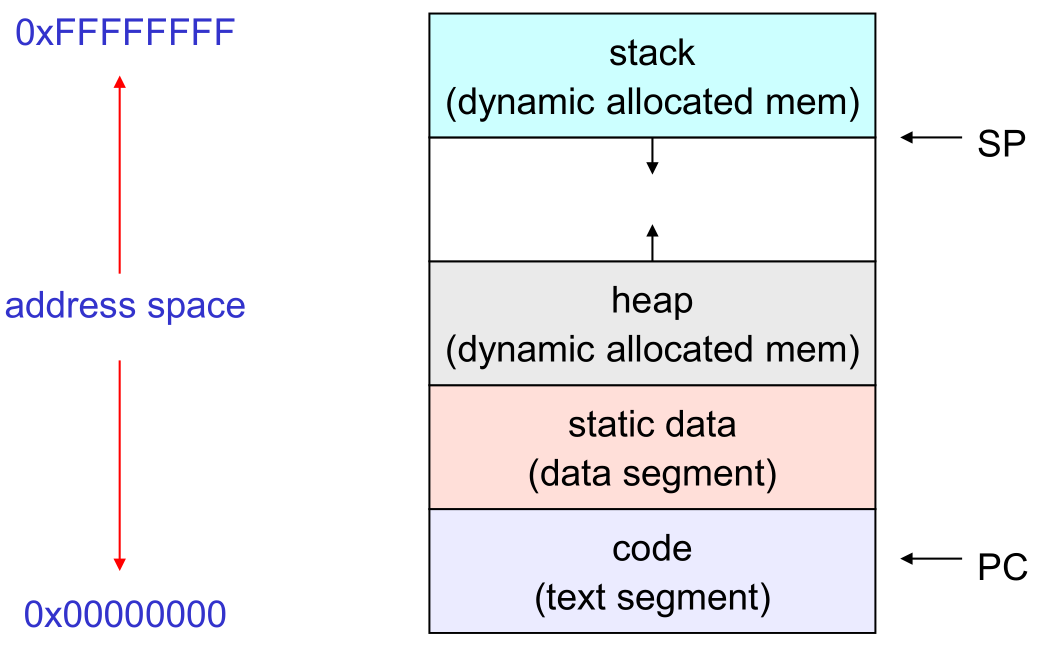

Though processes typically interact with memory as a long array of bytes, it is actually separated into various segments or memory regions. Memory allocated at compile time is located on the stack, which resides at high addresses in memory. As more data is added to the stack, the region grows to include lower addresses, so we say the stack grows down.

Memory allocated dynamically using malloc is placed on the heap, which resides somewhere in the middle of memory.

As more data is added to the heap, the region grows to include higher addresses, so we say the heap grows up.

The other three regions (static data, literals, and instructions) are fixed in size and get initialized when a program starts running.

This C Tutor example demonstrates how strings are allocated in various regions of memory. Note that C Tutor labels the heap-allocated string and the string literal as both being in the "Heap", but pay attention to the pointer values that get printed out. The stack-allocated string is located at a high address, the heap-allocated string at a middle address, and the static literal at a low address.

2 Reading: The Process

Read The Abstraction: The Process (chapter 4, p. 27–36) from the OSTEP book. Remember that the whole book is available as a single pdf here if you prefer that. Key questions to think about:

- What kinds of data/resources are associated with a process?

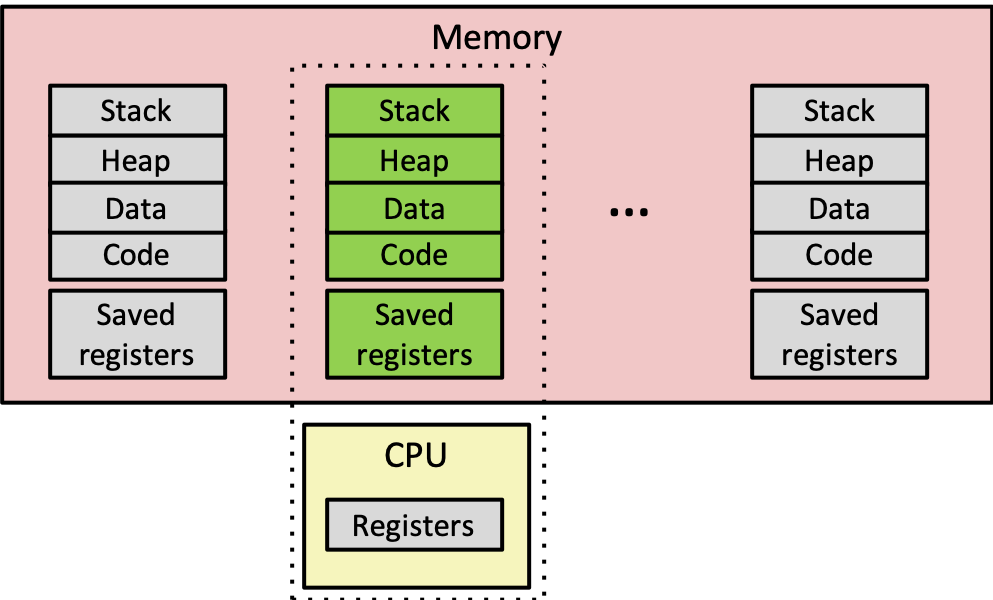

- What would figure 4.1 look like when running multiple instances of the Program (i.e., multiple processes)?

Important terms to understand from the reading:

- process

- address space

- program counter (instruction pointer)

- stack

Figure 4.5 mentions xv6, which is a teaching operating system like osv. Take a look at include/kernel/proc.h to see how osv organizes process data. In osv, each process has a single thread, which keeps track of the process state, context, and trapfram (see include/kernel/thread.h). Note that osv separates architecture-dependent and -independent code, so you'll find the definitions for the context and trapframe structs in arch/x86-64/include/arch/trap.h and arch/x86-64/include/arch/cpu.h.

3 Notes on Processes

3.1 What is a Process?

- The process is the OS's abstraction for execution

- A process is a program in execution

- Simplest (classic) case: a sequential process

- An address space (an abstraction of memory)

- A single thread of execution (an abstraction of the CPU)

- A sequential process is:

- The unit of execution

- The unit of scheduling

- The dynamic (active) execution context

- vs. the program + static, just a bunch of bytes

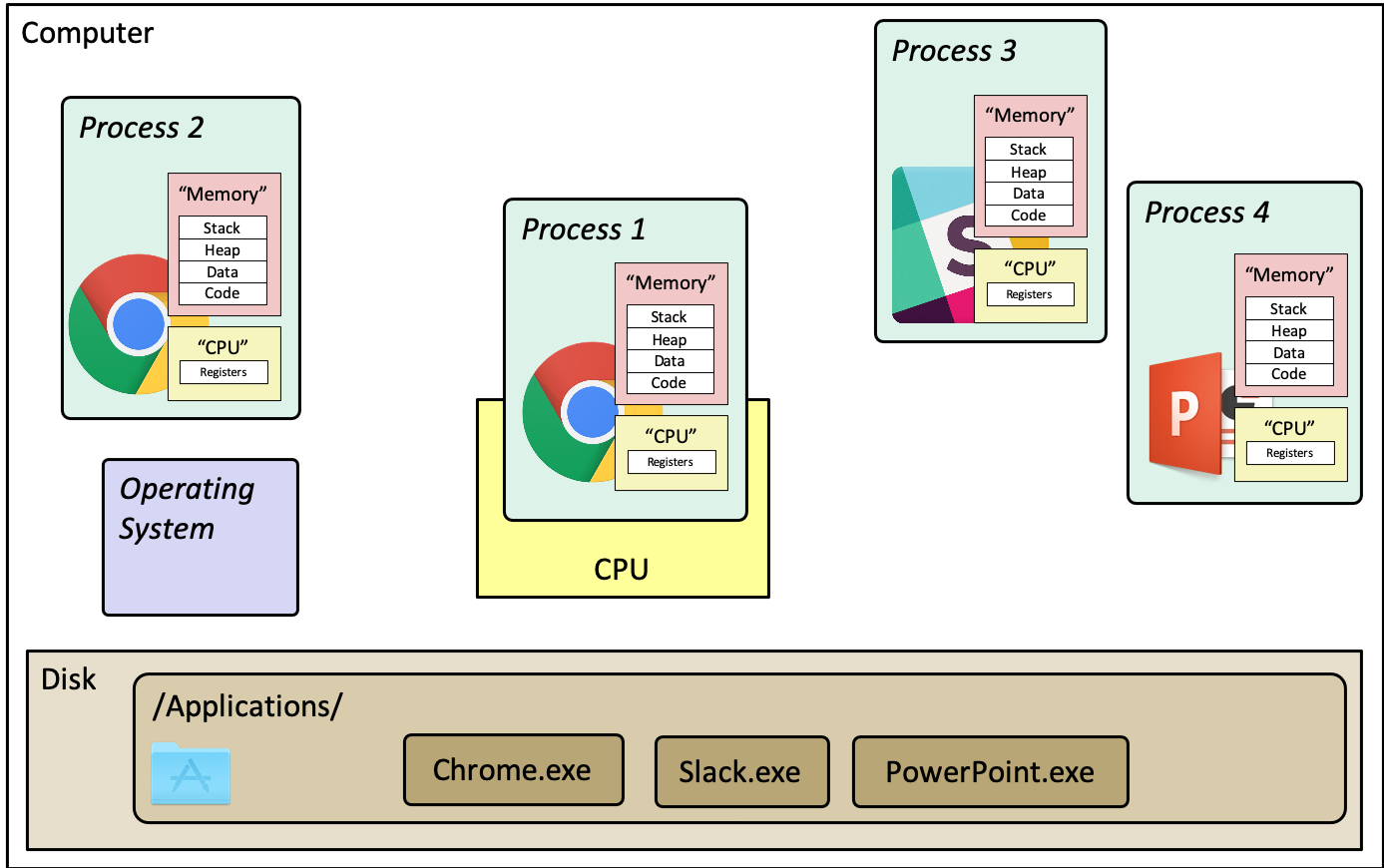

Figure 3: A diagram illustrating the high-level idea of a process. Each process has it's own "memory" and "CPU", which in reality are virtualized shared resources. A program (like Chrome.exe) can have multiple running instances (processes). Note that the operating system is not a process—it's just a block of code.

3.2 What's in a process?

- A process consists of (at least):

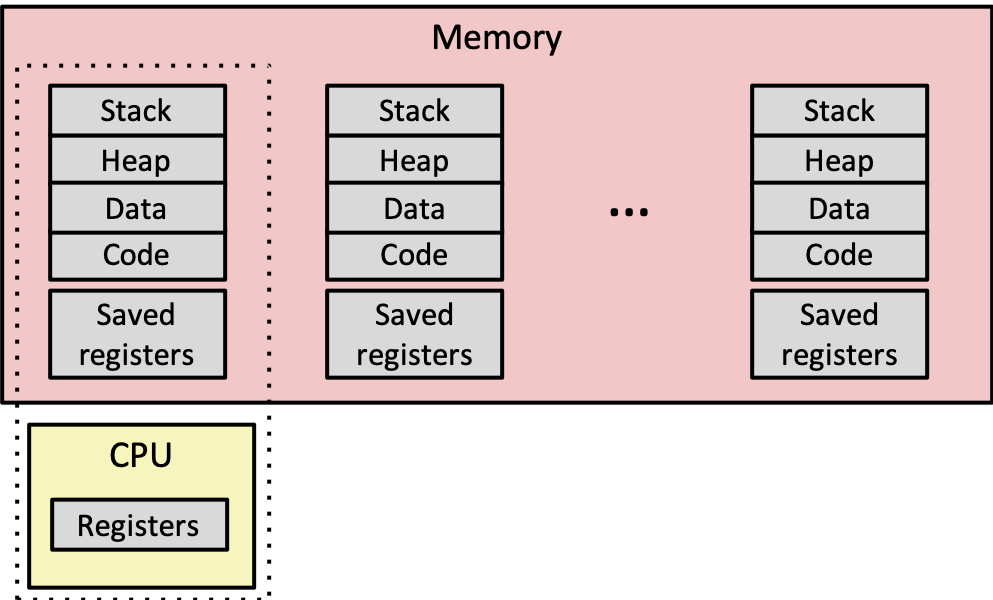

- An address space, containing

- the code (instructions) for the running program

- the data for the running program (static data, heap data, stack)

- CPU state, consisting of

- The program counter (PC), indicating the next instruction

- The stack pointer

- Other general purpose register values

- A set of OS resources

- open files, network connections, sound channels, …

- An address space, containing

- In other words, it's all the stuff you need to run the program

- or to re-start it, if it's interrupted at some point

3.2.1 A process's address space (idealized)

SP is the stack pointer, PC is the program counter

3.3 OS Process Representation

- (Like most things, the particulars depend on the specific OS, but the principles are general)

- The name for a process is called a process ID (PID)

- An integer

- The PID namespace is global to the system

- Only one process at a time has a particular PID

- Operations that create processes return a PID

- E.g.,

fork()

- E.g.,

- Operations on processes take PIDs as an argument

- E.g.,

kill(),wait()

- E.g.,

- Much more on these calls in a later topic

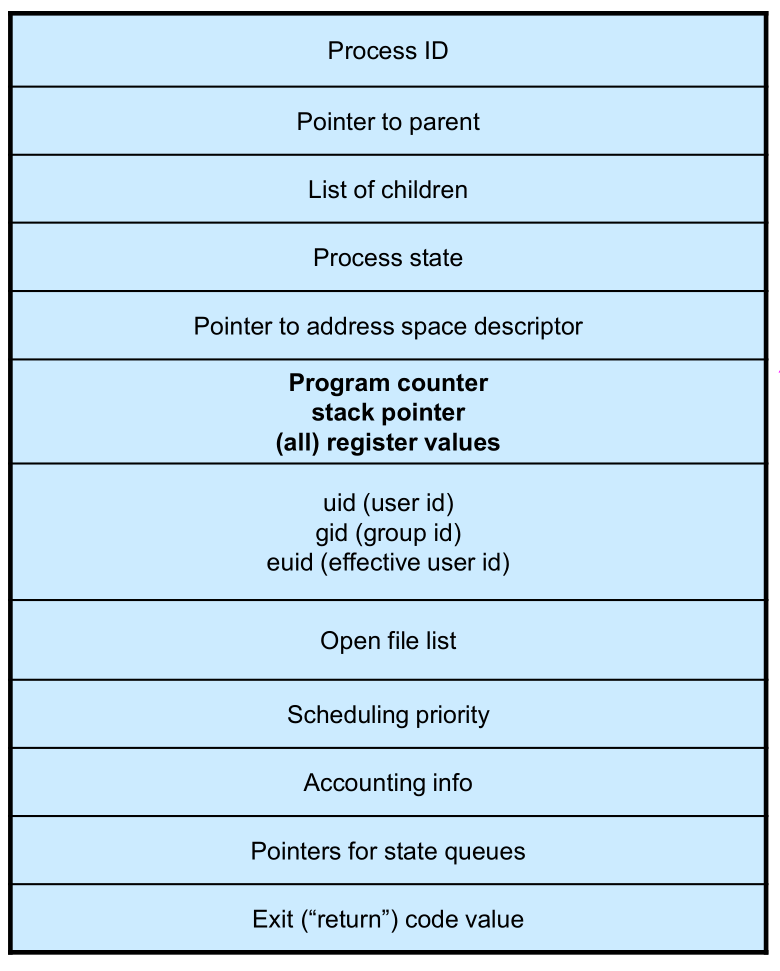

- The OS maintains a data structure to keep track of a process's state

- Called the process control block (PCB) or process descriptor

- Identified by the PID

- OS keeps all of a process's execution state in (or linked from) the PCB when the process isn't running

- PC, SP, registers, etc.

- when a process is unscheduled, the execution state is transferred out of the hardware registers into the PCB

- (when a process is running, its state is spread between the PCB and the CPU)

- Note: It's natural to think that there must be some esoteric techniques being used

- fancy data structures that you'd never think of yourself

- Wrong! It's pretty much just what you'd think of!

3.3.1 The PCB

- The PCB is a data structure with many, many fields:

- process ID (PID)

- parent process ID (PPID)

- execution state

- program counter, stack pointer, registers

- address space info

- UNIX user id (uid), group id (gid)

- scheduling priority

- accounting info

- pointers for state queue

- …

Figure 5: This is (a simplification of) what each of those PCBs looks like inside!

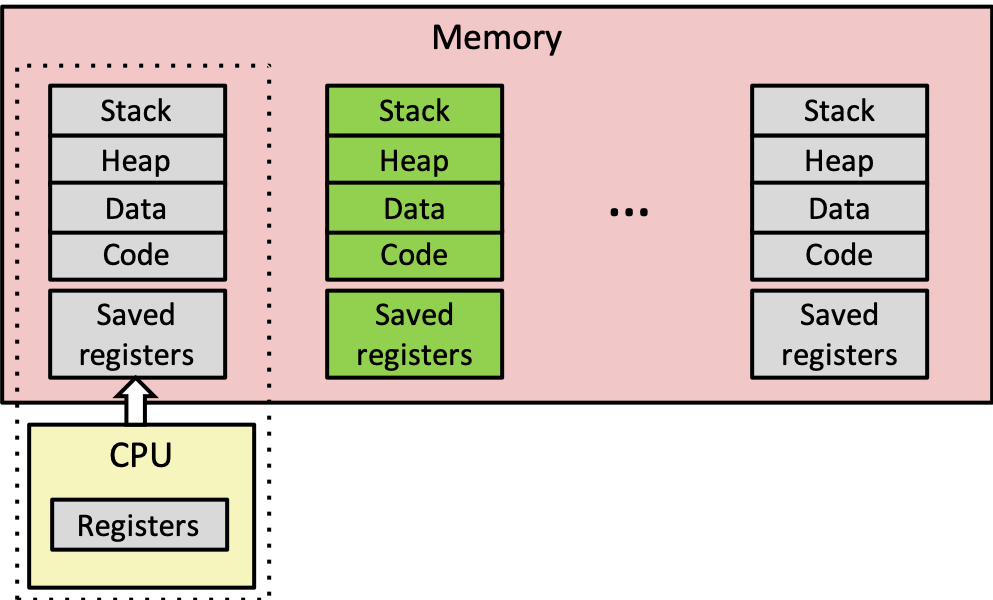

- PCBs and CPU state

- When a process is running, its CPU state is inside the CPU

- PC, SP, registers

- CPU contains current values

- When the OS gets control because of a system call or other source of control transfer, the OS saves the CPU state of the running process in that process's PCB

- When the OS returns the process to the running state, it loads the hardware registers with values from that process's PCB + general purpose registers, stack pointer, instruction pointer

- The act of switching the CPU from one process to another is called a context switch

- systems may do 100s or 1000s of switches/sec.

- takes a few microseconds on today's hardware

- the diagram below shows a very simplified view of a context switch

- the address space for each process lives in shared physical memory, isolated from each other by the OS

- Choosing which process to run next is called scheduling (more on this in a future topic)

- When a process is running, its CPU state is inside the CPU

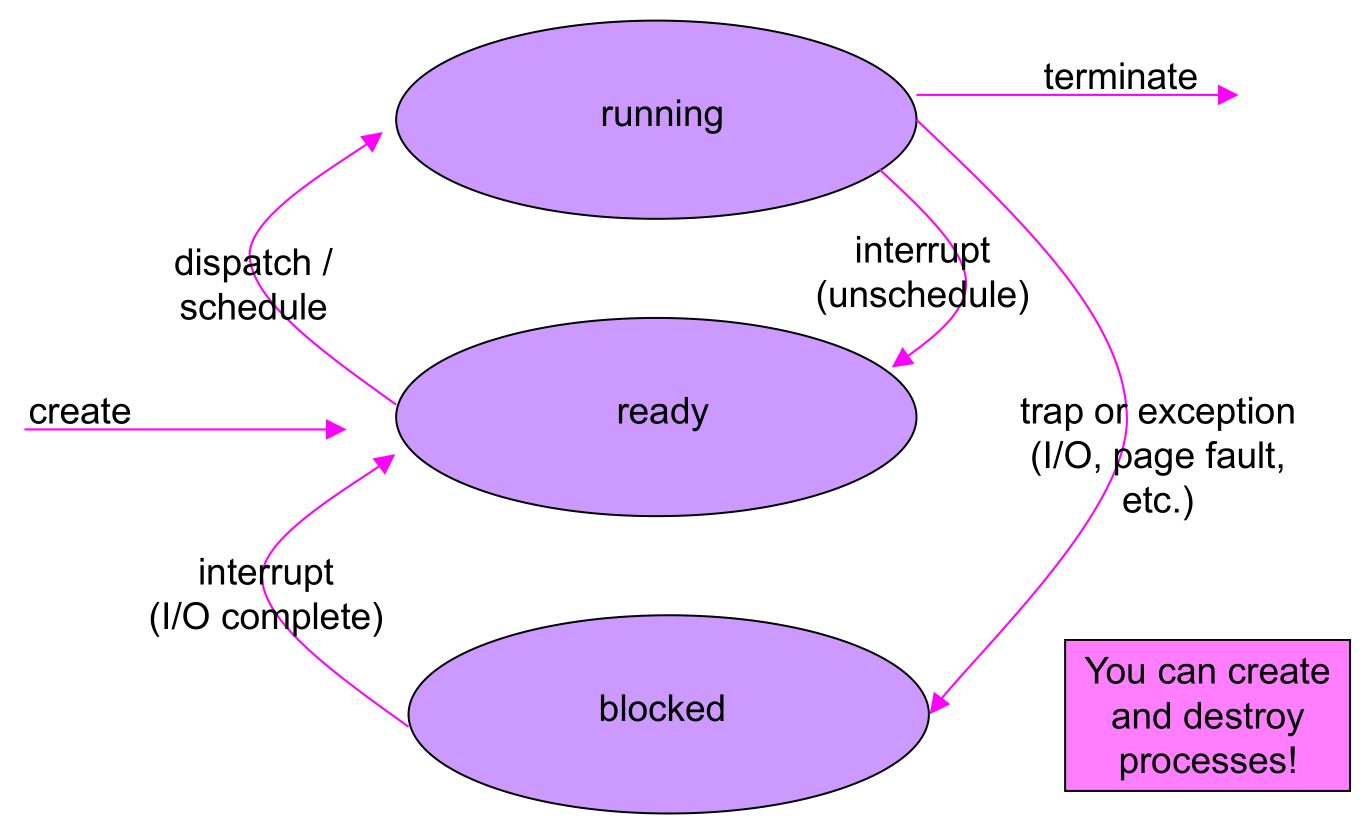

3.4 Process Execution States

- Each process has an execution state, which indicates what it's currently doing

- ready: waiting to be assigned to a CPU

- could run, but another process has the CPU

- running: executing on a CPU

- it's the process that currently controls the CPU

- waiting (aka blocked): waiting for an event, e.g., I/O completion, or a message from (or the completion of) another process

- cannot make progress until the event happens

- ready: waiting to be assigned to a CPU

- As a process executes, it moves from state to state

- UNIX: run

ps, STAT column shows current state - which state is a process in most of the time?

- UNIX: run

4 Homework

- Take the review quiz on today's material

- Download the process-run.py simulation and work through the associated questions on p. 38-39 of OSTEP chapter 4 (nothing to turn in)

- Start lab 1 if you haven't already!

Footnotes:

Because a process can move out of the running state and be replaced with a different running process. When the swapped-out process is run again, its register context will need to be loaded back onto the CPU. Hence, the OS must save it at the time a process is descheduled or blocked.

A zombie process is one that has terminated, but whose data has not been deallocated. This state is useful because it allows the parent process to examine the terminated process' return code (e.g., to check if it completed successfully).