CS 332 w22 — Semaphores and Advanced Locks

Table of Contents

1 Introduction

Consider this sitation:

Suppose a library has 10 identical study rooms, to be used by one student at a time. Students must request a room from the front desk if they wish to use a study room. If no rooms are free, students wait at the desk until someone relinquishes a room. When a student has finished using a room, the student must return to the desk and indicate that one room has become free.

If the rooms represent some shared resource, and the students represent threads, could we implement this situation using a mutual exclusion lock?

In controlling access to the study rooms, we are hampered by the fact that a lock is binary—it's either BUSY or FREE. But the system has 11 states: between 0 and 10 study rooms will be free (assuming the rooms are interchangeable). It would be useful to have a synchronization object with more than two states.

2 The Semaphore

- A semaphore has a value

- When created, a semaphore's values can be initialized any non-negative integer

sem_wait(sem_t *sem)atomically decrements the value by 1, and then blocks if the resulting value is negativesem_post(sem_t *sem)atomically increments the value by 1. If any threads are waiting insem_wait, wake one up- no other operations are allowed, including reading the current value

- up and down are traditionally referred to as

PandV, and can also be calleddownandup

2.1 Mutual Exclusion or General Waiting

- can be used for mutual exclusion (like a lock) or general waiting for another thread to do something (similar to condition variables)

- when a semaphore's value is initialized to 1,

sem_wait()is equivalent tolock_acquire()andsem_post()is equivalent tolock_release() - general waiting requires somewhat more complex approaches:

- initialize semaphore with the value of some finite resource, requesting threads wait if all are busy (like the study room example)

- a role analogous to

thread_join—the precise order of events does not matter, the call returns right away if the forked thread has finished and waits otherwise - initialize the semaphore at 0

- each call to down waits for the corresponding thread to to call up, returning immediately if up is called first

- when a semaphore's value is initialized to 1,

2.2 Semephores Considered Harmful

- can be difficult to coordinate shared state with general waiting

- down does not release a held lock like

condvar_waitdoes - need to release lock before, programmer needs to carefully construct program to work in this case

- unlike

condvar_signal, which has no effect if no threads are waiting, up causes the next down to proceed without blocking whenever it happens

- down does not release a held lock like

- two different abstractions, semaphore for mutual exclusion and semaphore for general waiting, can make code unclear and confusing

- locks and condition variables make this distinction obvious

- programmer has to carefully map shared object's state to the semaphore's value

- whereas a condition variable with an associated lock can wait on any aspect of object's state

2.3 Useful in Interrupt Handlers

- Locks and condition variables typically a better choice, but semaphores useful for synchronizing communication between I/O devices and waiting threads

- Communications between hardware and kernel can’t be coordinated with software lock—instead hardware and drivers use atomic memory operations

- Interrupt handler for device I/O needs to wake up waiting thread

- Use a condition variable to signal without the lock? (naked notify)

- Corner case: thread checks shared memory for I/O to process, but before it waits, hardware updates shared memory and interrupt handler signals

- signal has no effect with no waiting threads, when the thread calls wait, signal has been missed

- Because semaphores maintain state (i.e., value) they prevent this case

- Call to

sem_postnever lost, regardless of ordering

- Use a condition variable to signal without the lock? (naked notify)

3 Reader/Writer Locks

Often a good idea to optimize for the common case. That is, if a situation occurs often, it can be well worth it to optimzation that situation even if in rare cases the effect will be negative. One such situation is data that is frequently read and rarely written. We've discussed how multiple threads accessing read-only data does not require any synchronization. The idea here is to design a new kind of lock to allow this benign paralellism while still protecting against lost updates and other concurrency bugs. Cue the Reader-Writer Lock.

- Purpose is to protect shared data, like a standard mutual exclusion lock

- Allows multiple reader threads to simultaneously access shared data

- Only one writer thread may hold the

RWLockat any one time- Writer thread can read and write, while reader threads can only read

- Prevents any other access (writer or reader)

- Commonly used in databases, supporting frequent fast queries while allowing less frequent updates

- OS kernel also maintains frequently-read, rarely-updated data structures

struct rwlock { // synchronization variables struct lock mutex; struct condvar read_go; struct condvar write_go; // state variables int active_readers; int active_writers; int waiting_readers; int waiting_writers; } void init_rwlock(struct rwlock *rw) { init_lock(&rw->mutex); init_condvar(&rw->read_go); init_condvar(&rw->write_go); rw->active_readers = 0; rw->active_writers = 0; rw->waiting_readers = 0; rw->waiting_writers = 0; } /* Wait until no active or waiting writes, then proceed */ void start_read(struct rwlock *rw) { // use the lock to make operations atomic lock_acquire(&rw->mutex); rw->waiting_readers++; while(read_should_wait(rw)) { // <- always wait inside a loop condvar_wait(&rw->read_go, &rw->mutex); } rw->waiting_readers--; rw->active_readers++; lock_release(&rw->mutex); } /* Done reading. If no other active reads, a write may proceed */ void done_read(struct rwlock *rw) { // use the lock to make operations atomic lock_acquire(&rw->mutex); rw->active_readers--; // we should signal if we're the last active read and a write is waiting if (rw->active_readers == 0 && rw->waiting_writers > 0) { condvar_signal(&rw->write_go); } lock_release(&rw->mutex); } /* Read waits if any active or waiting write ("writers preferred") */ bool read_should_wait(struct rwlock *rw) { return rw->active_writers > 0 || rw->waiting_writers > 0; } /* Wait until no active read or write, then proceed */ void start_write(struct rwlock *rw) { // use the lock to make operations atomic lock_acquire(&rw->mutex); rw->waiting_writers++; while(write_should_wait(rw)) { condvar_wait(&rw->write_go, &rw->mutex); } rw->waiting_writers--; rw->active_writers++; lock_release(&rw->mutex); } /* Done writing. A waiting write or all reads may proceed */ void done_write(struct rwlock *rw) { // use the lock to make operations atomic lock_acquire(&rw->mutex); rw->active_writers--; assert(rw->active_writers == 0); if (rw->waiting_writers > 0) { condvar_signal(&rw->write_go); } else { condvar_broadcast(&rw->read_go); } lock_release(&rw->mutex); } /* Write waits for active read or write */ bool write_should_wait(struct rwlock *rw) { return rw->active_writers > 0 || rw->active_readers > 0; }

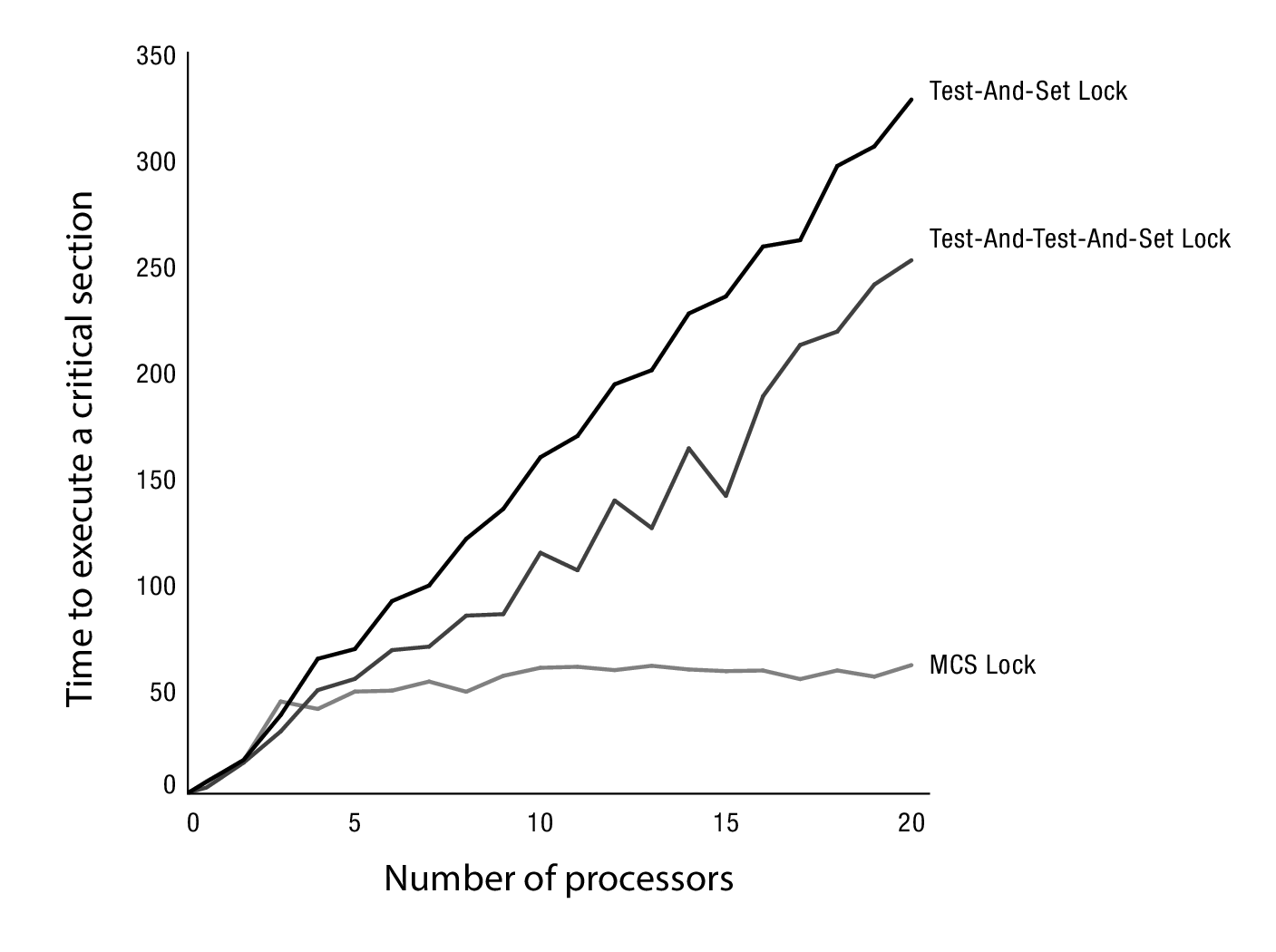

4 Optimizing Under Lock Contention

- Standard lock implementation performs well when the lock is usually free

- When there's a lot of competition to acquire a lock, performance can suffer

- What happens if many processors try to acquire the lock at the same time?

- Hardware doesn't prioritize the thread trying to release the lock

- Many processor's trying to gain exclusive access to the memory location of the lock

- Could a processor check if the lock is FREE before acquiring?

- When lock is freed, must be communicated to other processors

- Significant latency as new lock value gets loaded into each processor's cache

- Maybe we have processors check less frequently?

- More scalable solution: assign each waiting thread a separate memory location to check

4.1 MCS Lock

- The MCS is the most widely used implementation of this idea

- Named after its authors, Mellor-Crummey and Scott

Takes advantage of atomic

compare_and_swapinstruction- Operates on a word of memory

- Check that the value of the memory word matches an expected value

- e.g., no other thread did a

compare_and_swapfirst

- e.g., no other thread did a

- If value has changed, try again

- If not, memory word is set to a new value

// psuedocode x = SHARED_DATA; while(!compare_and_swap(&SHARED_DATA, x, NEW_VALUE)) { // someone changed SHARED_DATA between when // we read it and compare_and_swap // read SHARED_DATA again and repeat x = SHARED_DATA; } // once we get here, we know we have atomically // set SHARED_DATA to NEW_VALUE

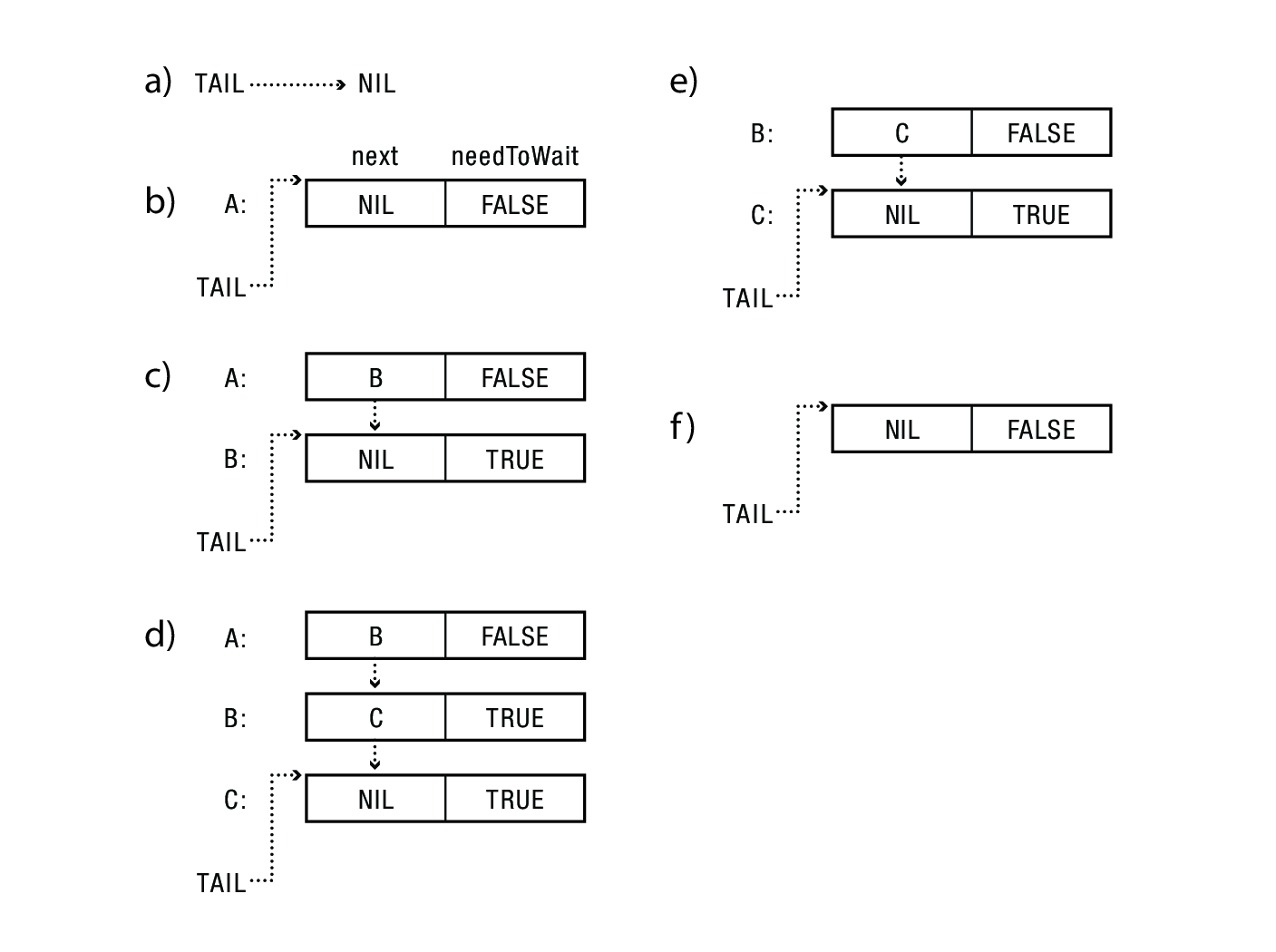

- Maintain a list of threads waiting for the lock

- Front of list holds the lock

- New thread uses

compare_and_swapto atomically add itself to the end of the list

- For each thread, track next waiting thread and variable to busy wait on

- Per-thread

needToWaitflag lets them wait on separate memory locations

- Initially (a)

tailis NULL indicating that the lock is FREE - To acquire the lock (b), thread A atomically sets

tailto point to A's lock data - Additional threads B and C queue by adding themselves (atomically) to the

tail(c) and (d); they then busy-wait on their respective lock data'sneedToWaitflag. - Thread A hands the lock to B by clearing B's

needToWaitflag (e) - B hands the lock to C by clearing C's

needToWaitflag (f) - C release the lock by setting

tailby to NULL if and only if no one else is waiting—that is, ifftailsill points to C's lock data

The MCS lock has a performance advantage when there are lots of waiting threads. If the lock is usually free, the extra overhead is not worth it.

4.2 RCU Lock

- A standard reader-writer lock can become a nasty bottleneck for rea-dominated workloads

- If readers have short critical sections, the need to acquire a mutex before and after each read in order to update the reader count limits the rate at which readers can enter the critical section

- i.e., a reader-writer lock requires readers to start a read one-at-a-time, and with large numbers of concurrent reads, this will become an issue

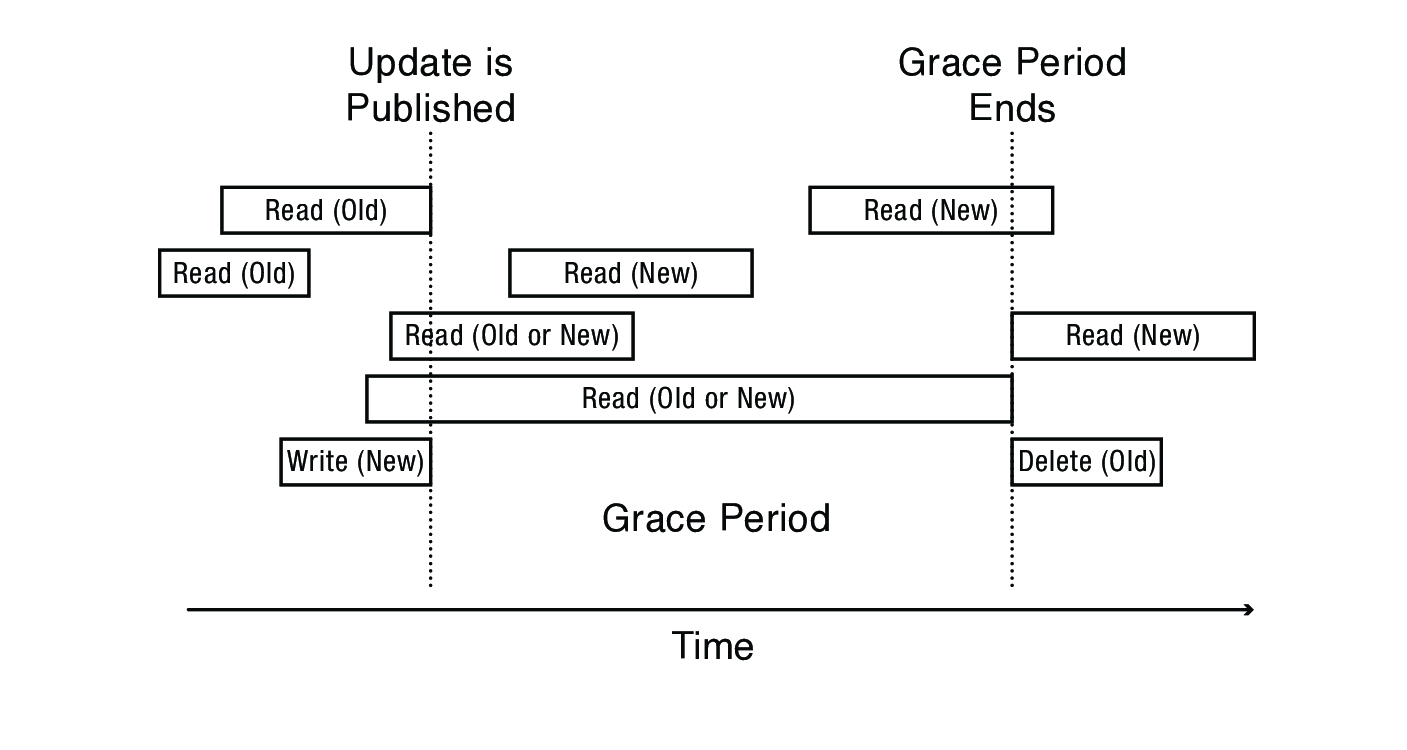

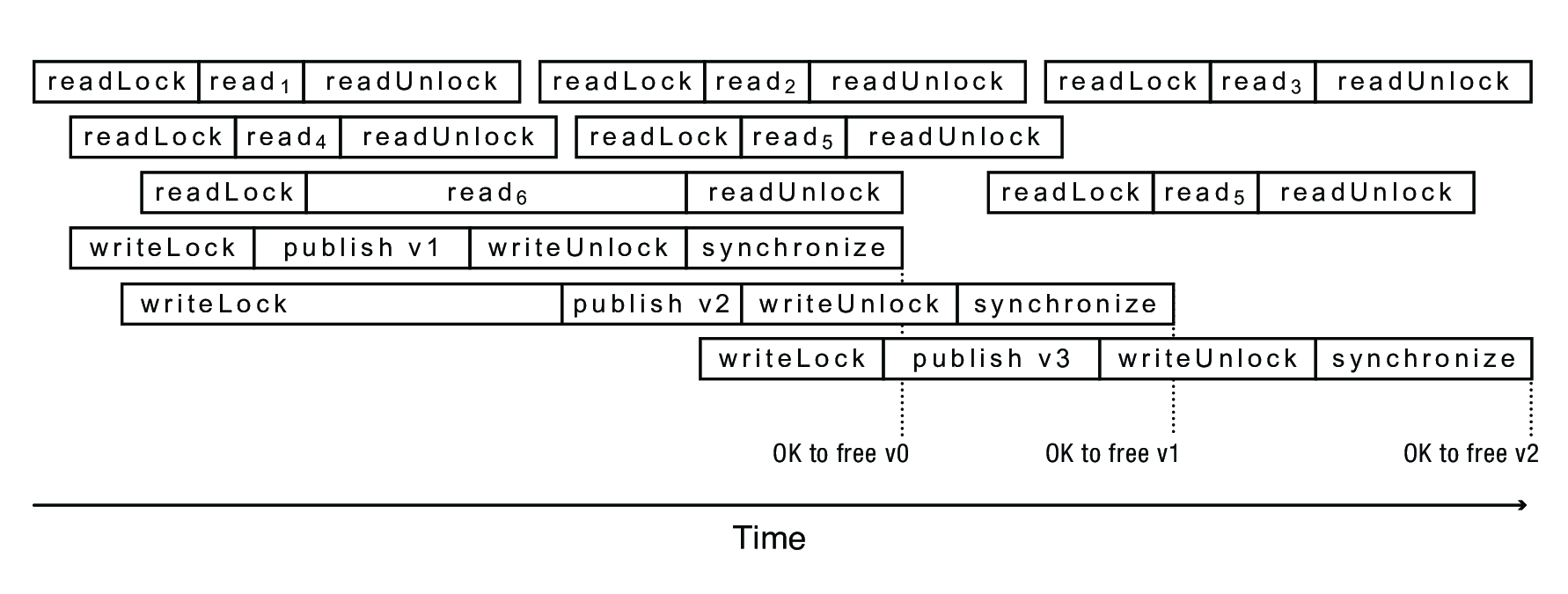

- The read-copy-update (RCU) approach optimizes the overhead for readers at the expense of writers

- Goal: very fast reads to shared data

- Reads proceed without first acquiring a lock

- Assumes write is (very) slow and infrequent

- Multiple concurrent versions of the data

- Readers may see old version for a limited time

- When might this be ok?

- Restricted update

- Writer computes new version of data structure

- Publishes new version with a single atomic instruction

- Relies on integration with thread scheduler

- Guarantee all readers complete within grace period, and then garbage collect old version