CS 332 w22 — Caches and Address Translation

Table of Contents

1 Caching

1.1 Definitions

- Cache

- Copy of data that is faster to access than the original

- Hit: if cache has copy

- Desired data is resident in the cache

- Miss: if cache does not have copy

- Load missing data into the cache

- Overwrite (evict) resident data to make room, if necessary

- Cache block

- Unit of cache storage (range of memory locations)

- Temporal locality

- Programs tend to reference the same memory locations multiple times

- Example: instructions in a loop

- Spatial locality

- Programs tend to reference nearby locations

- Example: data in a loop

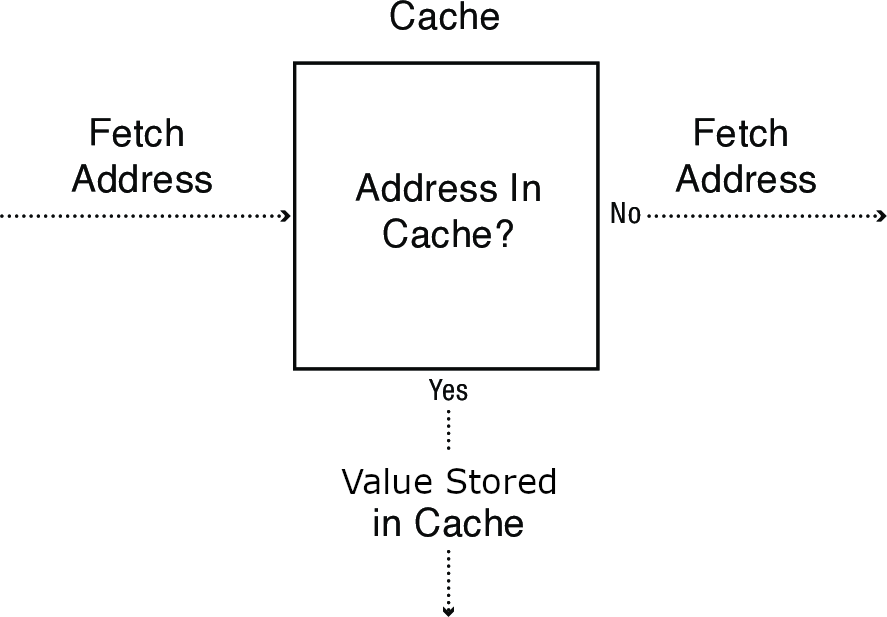

1.2 Reading from a Cache

- Cache logic implemented in hardware

- Completely transparent to the program

- But performance impact can be huge!

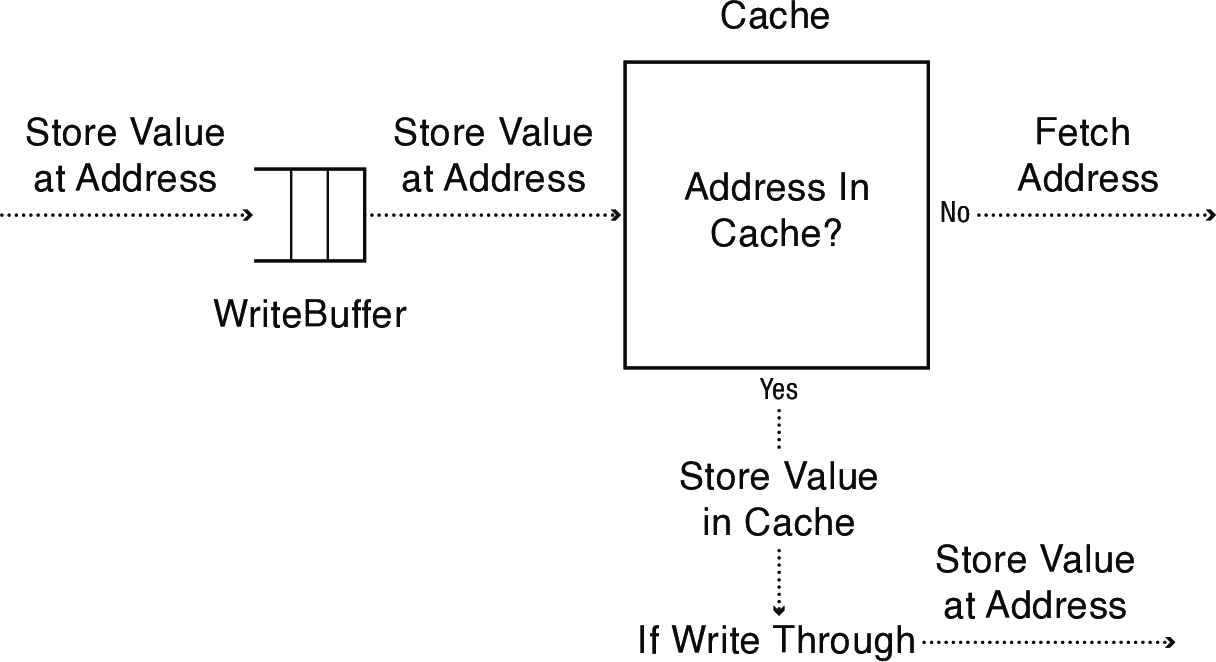

1.3 Writing to a Cache

- Two kinds of cache write behavior

- Write through: changes sent immediately to next level of storage

- Write back: changes stored in cache until cache block is replaced

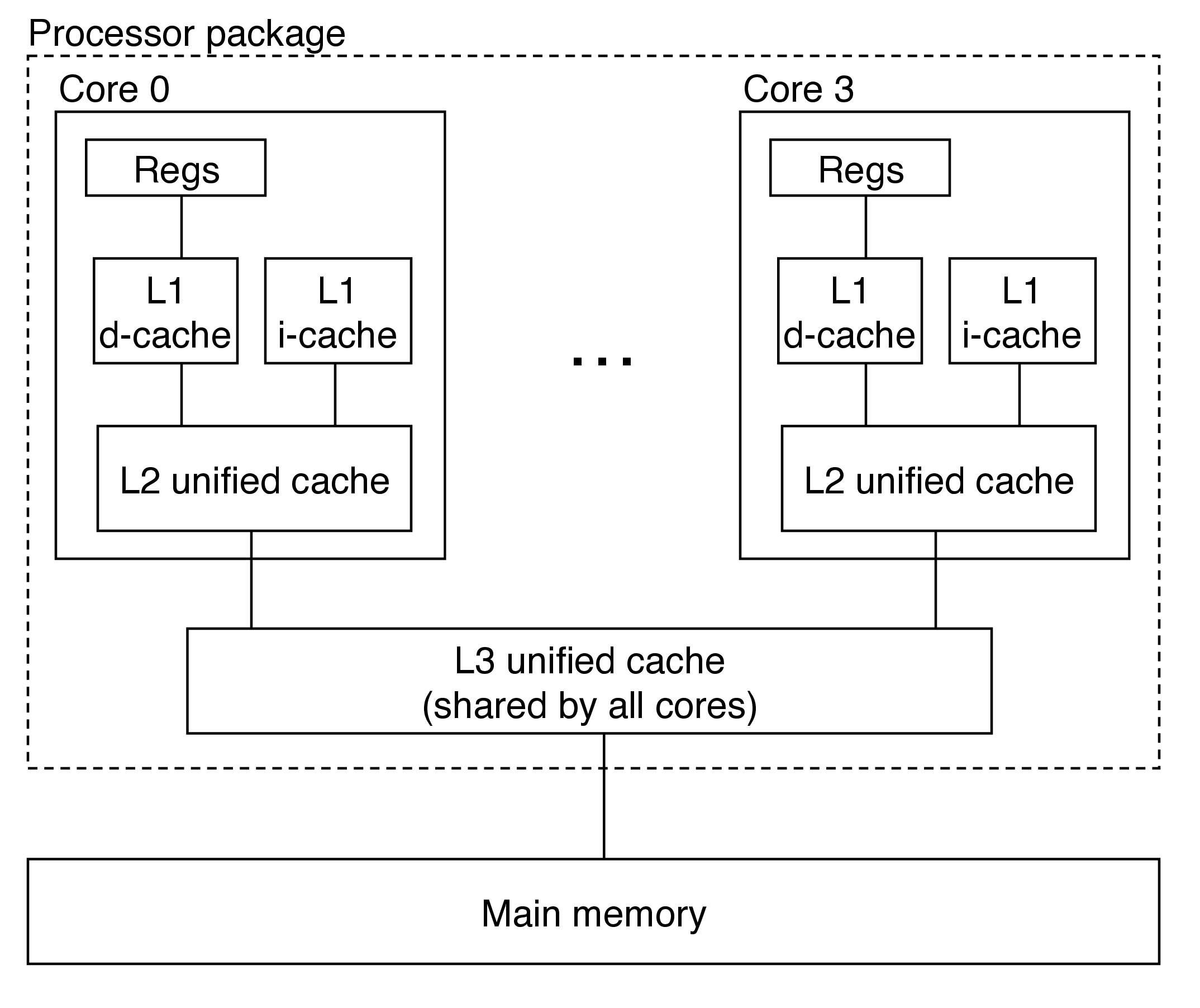

1.4 Example Cache Architectures

- Intel Core i7 (pictured above)

- L1 caches are 32 or 48 KB

- L2 caches range from 256 KB to 2 MB depending on specific model

- L3 caches ranges from 4 MB to 24 MB

- Apple M1 chip:

- 4 high-performance cores and 4 energy-efficient cores

- M1 Pro and M1 Max have 8 high-performance and 2 energy-efficient

- Each performance core has a 192 KB instruction cache and 128 KB data cache (L1)

- Each efficient core has a 128 KB instruction cache and 64 KB data cache (L1)

- Performance cores share at 12 MB L2 cache, efficient cores share a 4 MB L2 cache

- The entire system on a chip (SoC) has a shared 16 MB cache

- 4 high-performance cores and 4 energy-efficient cores

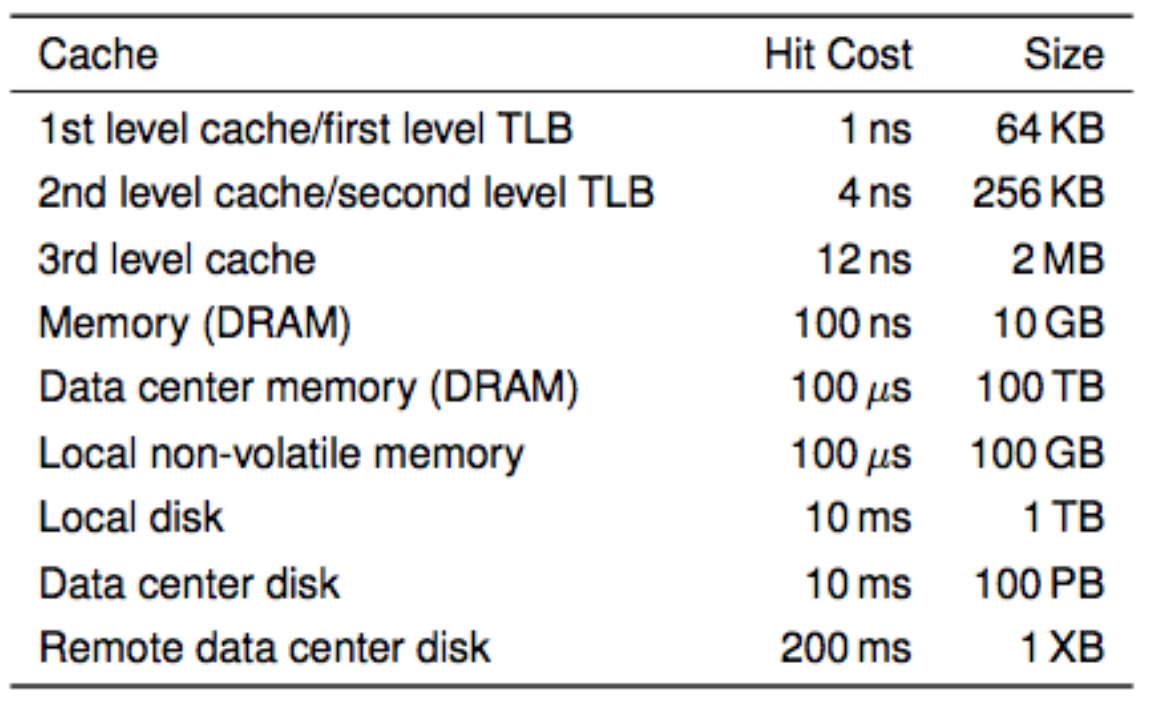

1.5 Memory Hierarchy

- Going up the hierarchy: memory gets smaller, faster, and more expensive (per byte)

- Going down the hierarchy: memory gets bigger, slower, and cheaper (per byte)

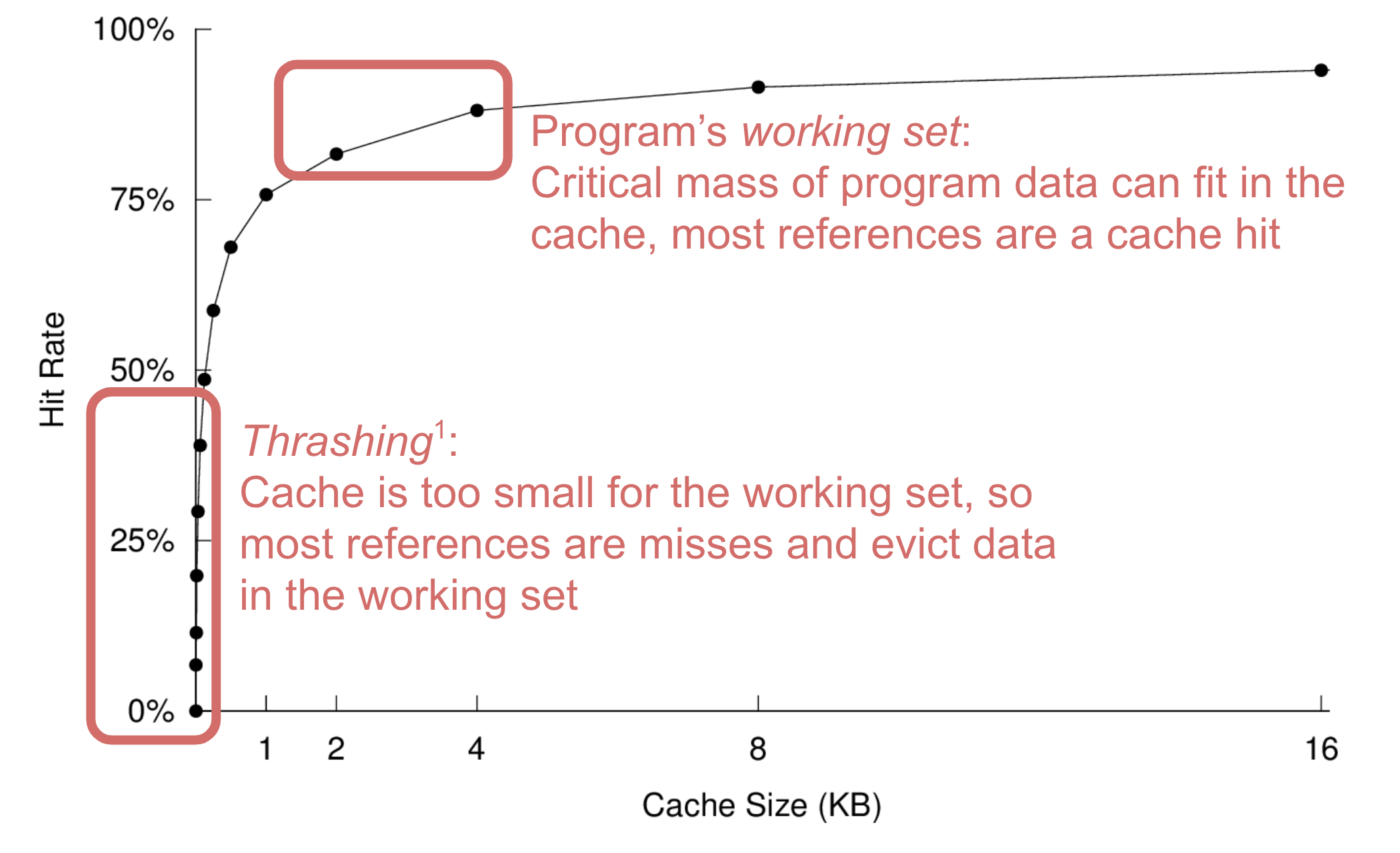

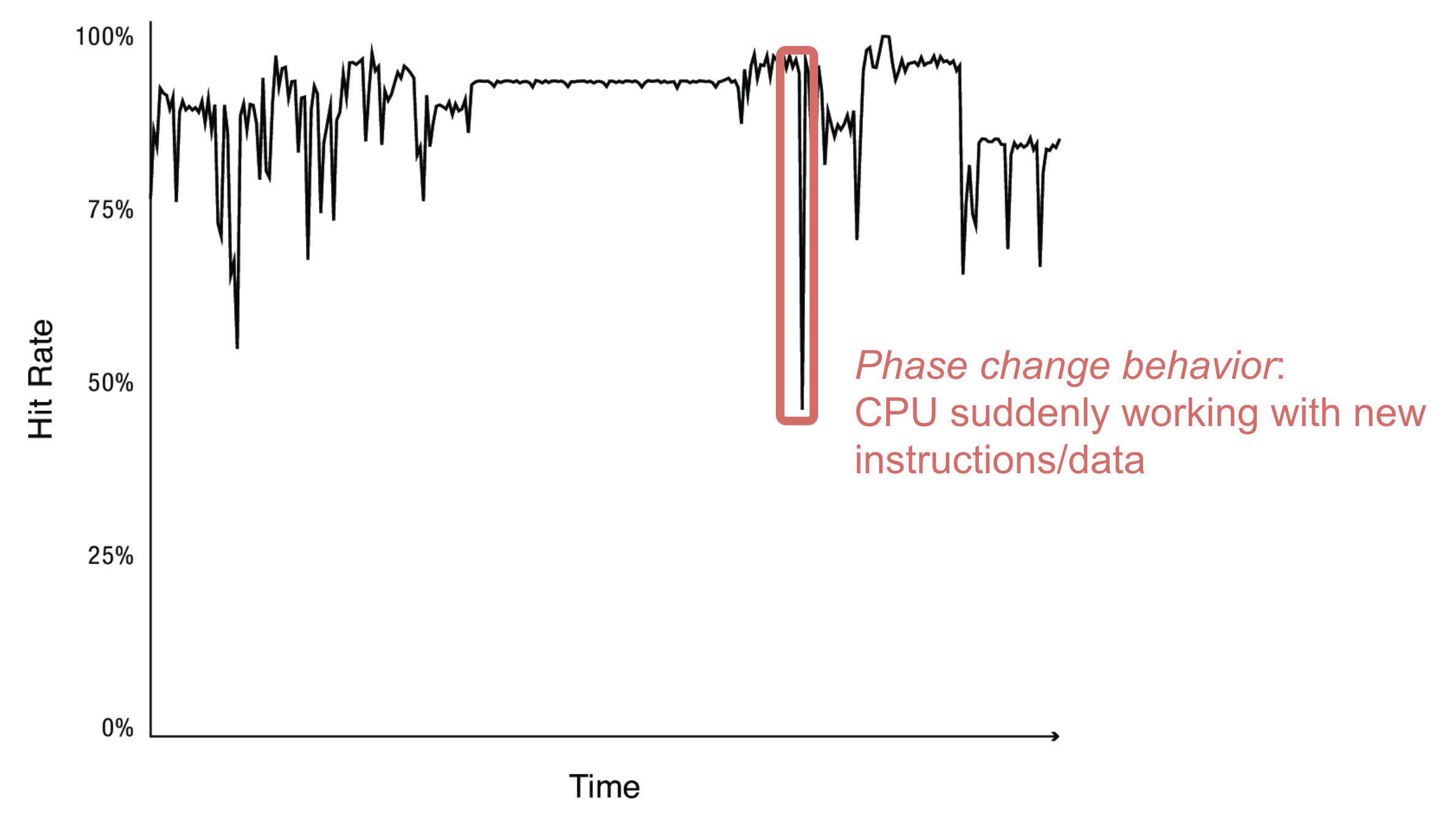

1.6 When Caches Work and When They Do Not

Events like context switches can cause a burst of misses:

1.7 Types of Caches

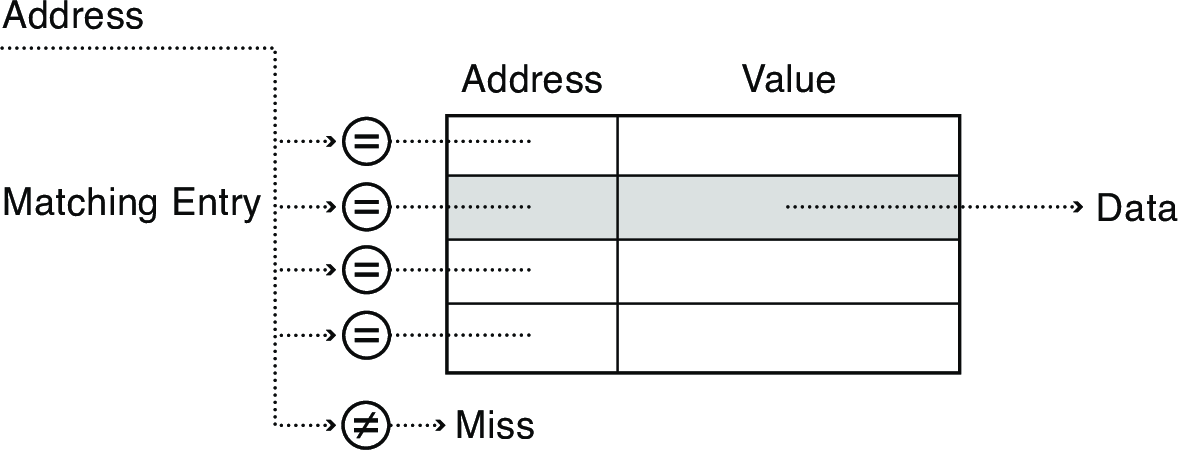

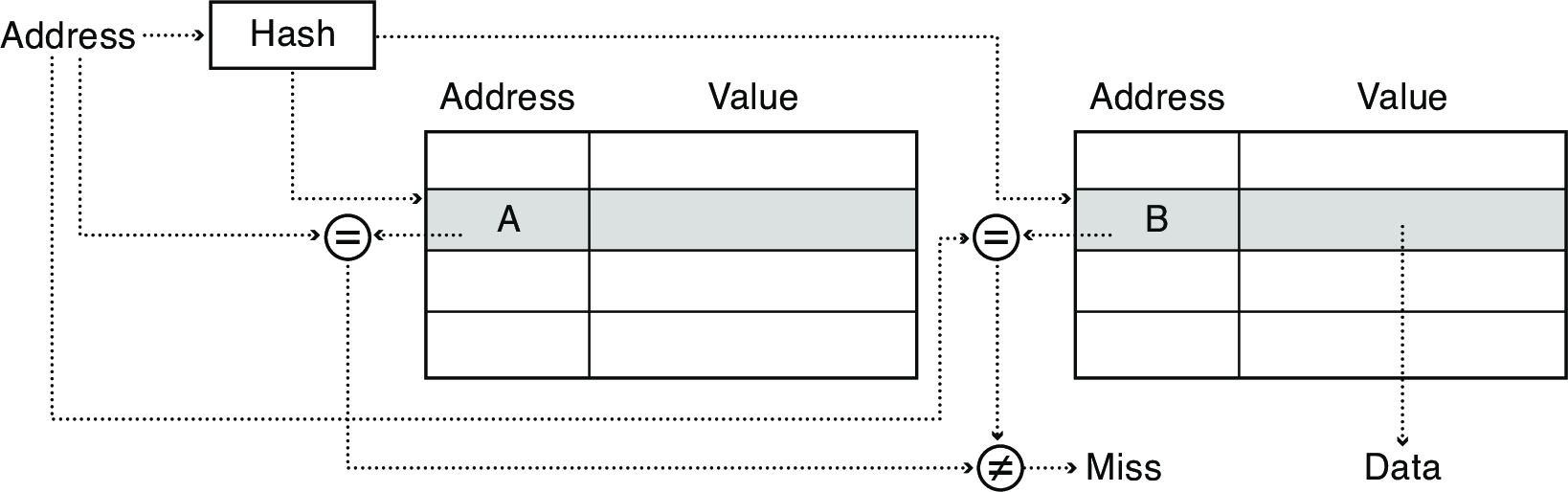

- Fully Associative cache

Cache checks address against every entry and returns matching value, if any

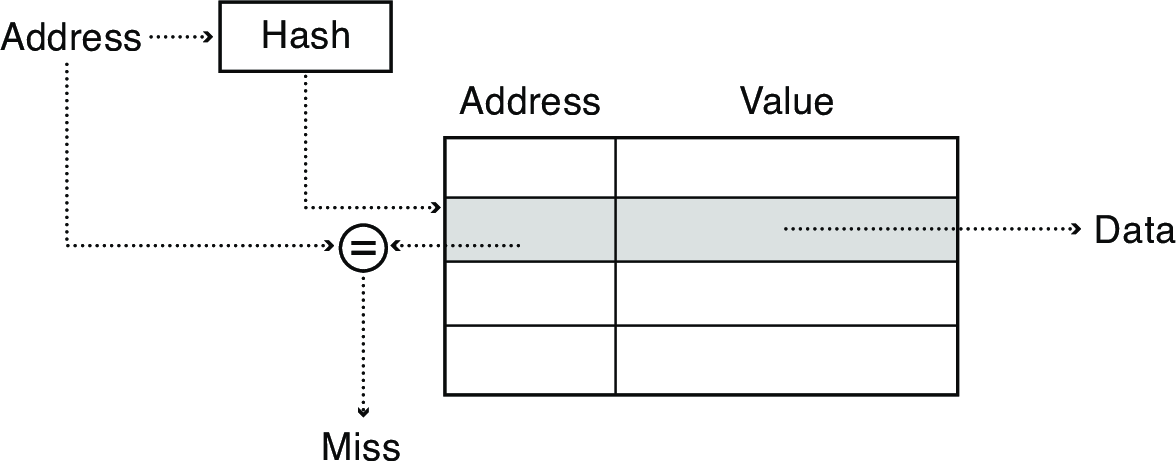

- Direct Mapped cache

Cache hashes address to determine which location to check for a match

- Set Associative cache

- Cache hashes address to determine which location to check

Checks each set in parallel for a match

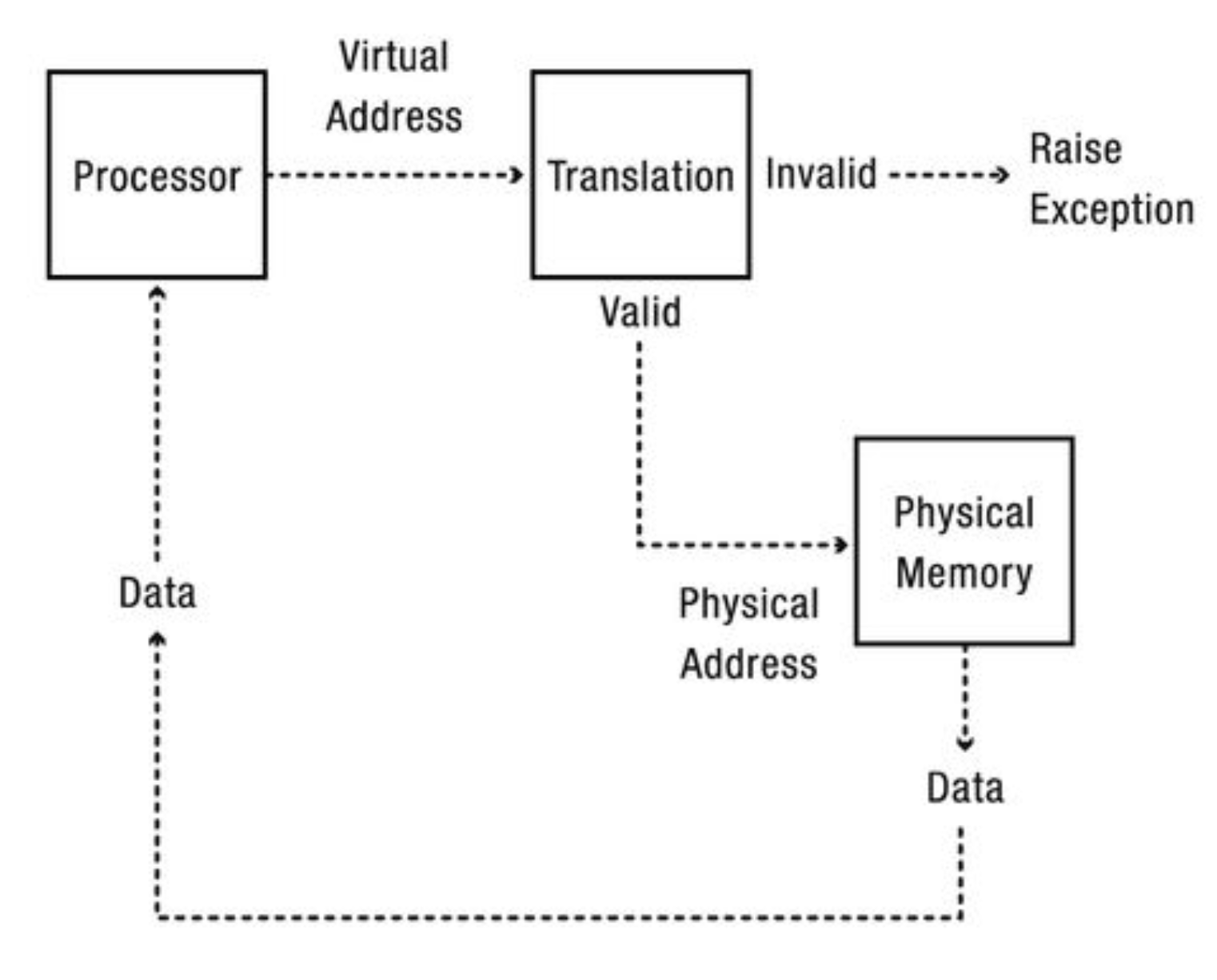

2 Address Translation

- Conversion from memory address the program thinks it's referencing to the physical location of that memory cell

- Virtual address to physical address

- The range of memory addresses a program can use is called its address space

- Program behaves correctly despite its memory being stored somewhere completely different than where it thinks it is stored

- A useful fiction, transparent to the programmer

- Goals:

- Memory protection

- Memory sharing: shared libraries, interprocess communication

- Sparse addresses: multiple regions of dynamic allocation (stack/heap)

- Efficiency: memory placement, runtime lookup, compact translation tables

- Portability

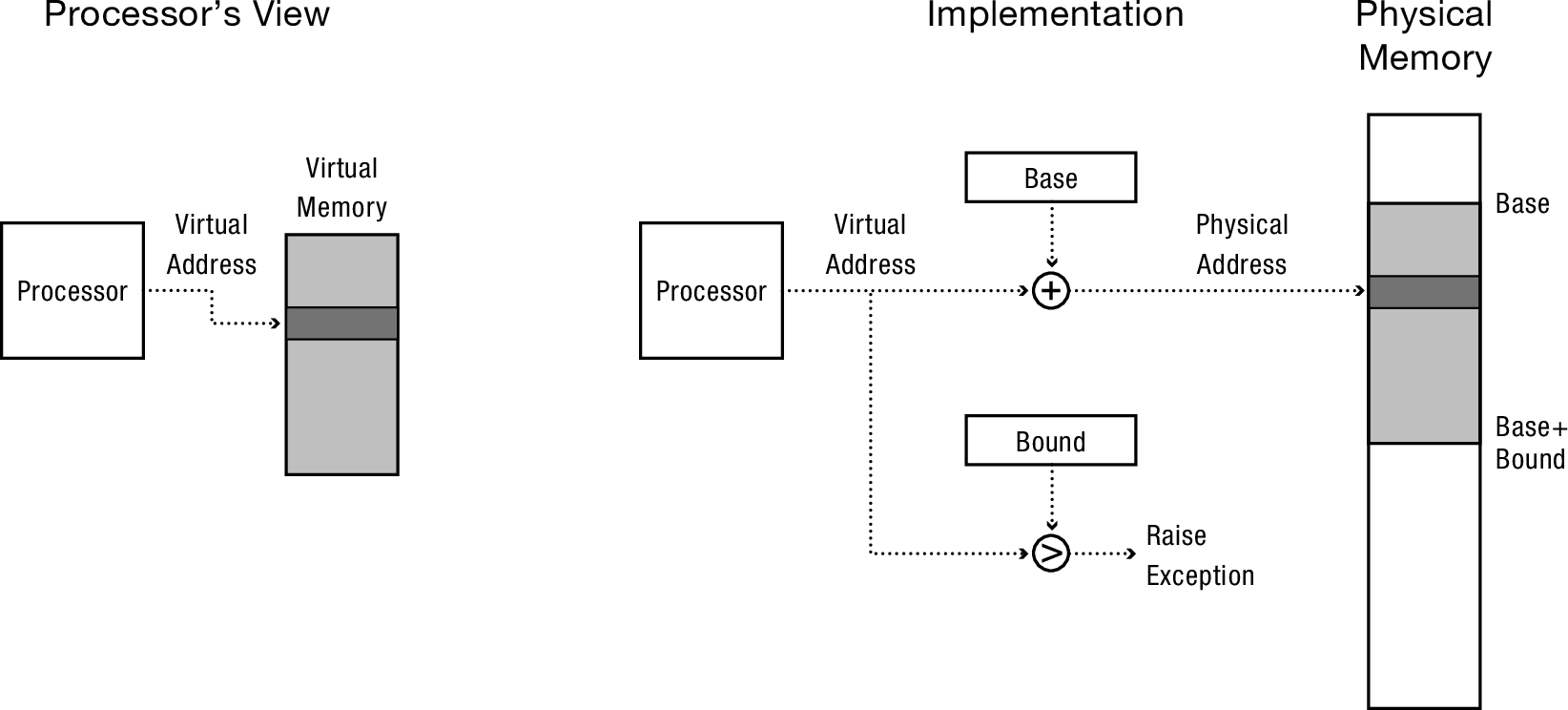

2.1 Base and Bound Hardware Translation

- Virtual addresses range from 0 to bound

- Physical addresses from base to base + bound

- base and bound specific to each process, stored in special registers

- Saved on context switch

- In a nutshell: each process reserves a range of physical memory

- Pros:

- Simple, fast, safe

- Can relocate physical memory without changing process

- Cons:

- Can't prevent process from overwriting its own code (only check exceeding bound)

- Can't share code/data with other processes

- Can't grow stack/heap as needed

3 Reading: Address Translation

OSTEP Chapter 15 (p. 151–161) provides a good walkthrough of address translation with examples and additional context.