CS 332 w22 — The Kernel Abstraction

Table of Contents

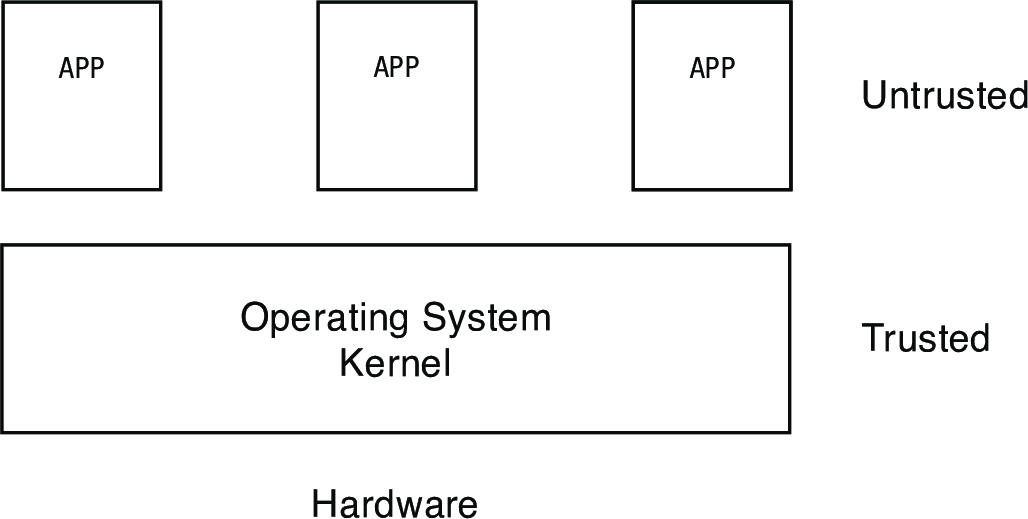

The operating system kernel has come up in nearly everything we've covered so far, so it's about time we dug into what's actually going on there. A key motivation behind the kernel is that we can never trust user applications. Whether through error, incompetence, or malign intent, user applications could cause terrible mischief if given unrestricted access to the system. The kernel serves as a vital intermediary to keep them under control.

1 Reading: Why Does an Operating System Have a Kernel?

Read the introduction (p. 1–3) of this reading from the OSPP book on the kernel abstraction.

2 Challenge: Protection

- How do we execute code with restricted privileges?

- Either because the code is buggy or if it might be malicious

- Some examples:

- A script running in a web browser

- A program you just downloaded off the Internet

- A program you just wrote that you haven't tested yet

2.1 A Problem

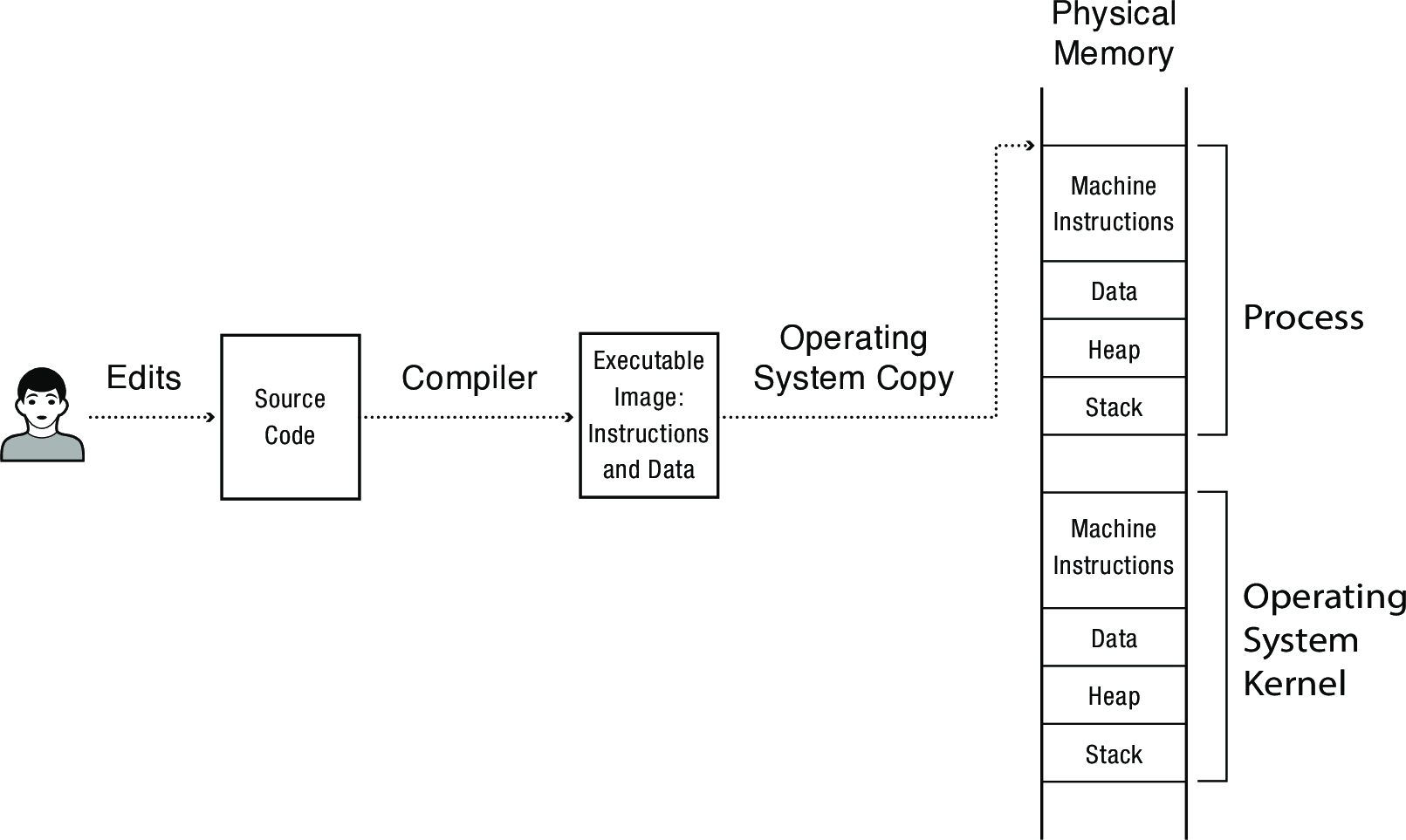

Untrusted or malicious code lives in the same memory on the same system as everything else, including the kernel:

Processes—the OS abstraction for executing a program with limited privileges—are one half of the solution for providing protection. The other half is dual-mode operation.

2.2 Solution: Dual-Mode Operation

- Kernel-mode: execute with complete privileges

- User-mode: execute with fewer privileges

- How can we implement execution with limited privilege?

- Execute each program instruction in a simulator

- If the instruction is permitted, do the instruction

- Otherwise, stop the process

- Basic model in JavaScript and other interpreted languages

- How do we go faster?

- Run the unprivileged code directly on the CPU!

2.2.1 Hardware Support

- Kernel mode

- Execution with the full privileges of the hardware

- Read/write to any memory, access any I/O device, read/write any disk sector, send/read any packet

- User mode

- Limited privileges

- Only those granted by the operating system kernel

- On the x86, mode stored in EFLAGS register

- x86 actually supports 4 privilege levels

- On the MIPS, mode in the status register

- Mode controls ability to execute privileged instructions

- Making them only available to the kernel

- What happens if code running in user mode attempts to execute a privileged instruction?

2.2.2 Crossing Protection Boundaries

- Q: So how does code running at user level (apps) do something privileged?

- e.g., how can it write to a disk if it can’t execute the I/O instructions that are needed to do I/O?

- A: Ask code that can (the OS) to do it for you.

- User programs must cause execution of a piece of OS code

- OS defines a set of system calls

- App code leaves a bunch of arguments to the call somewhere the OS can a find them

- e.g., on the stack or in registers

- One of the arguments is a name for which system call is being requested

- usually a syscall number

- App somehow causes processor to elevate its privilege level to 0 (teaser for our next topic)

3 Reading: Dual-Mode Operation

Read section 2.2 (p. 4–17) of this reading from the OSPP book on the kernel abstraction.

- You can skim 2.2.2, we will discuss issues of memory in much greater depth starting in week 7.

- Don't worry about the details of figures 2.3 and 2.4, the key takeaway is that dual-mode operation requires hardware support

Key questions to consider while you do the reading:

- What is the operating system kernel?

- Why do we want some instructions to be privileged even if this creates overhead for user processes?

- What is the role of a hardware timer and timer interrupts?

3.1 Quick Checks

4 Other Hardware Support

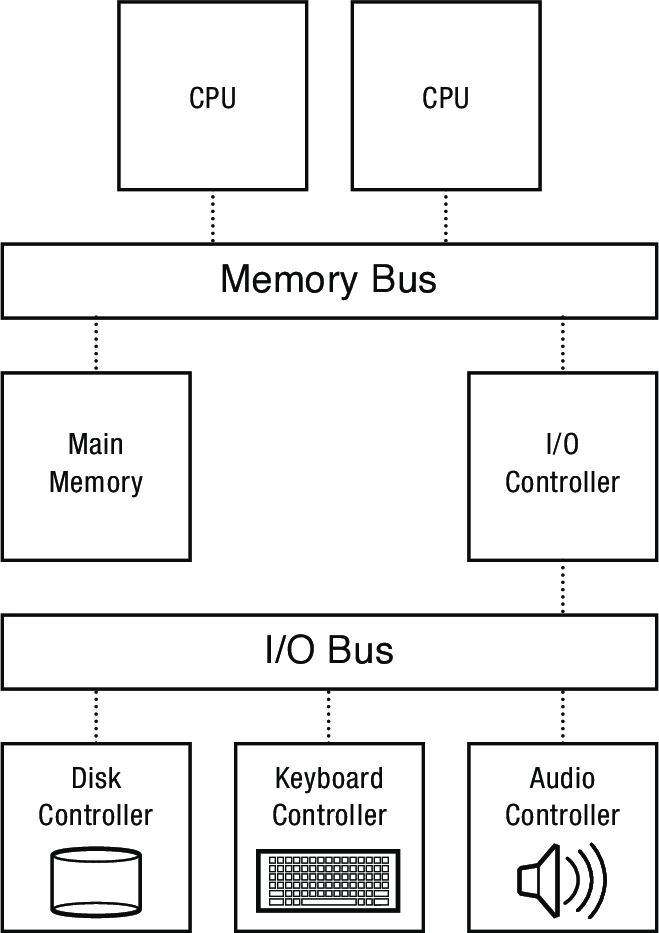

The operating system supports sharing of hardware and the protection of hardware. This includes allowing multiple applications to run concurrently and share resources, and preventing buggy or malicious applications from interfering with others. There are many mechanisms that can be used to achieve these high-level goals. The system architecture, including instruction set as well as hardware components, determines which approaches are viable (reasonably efficient, or even possible).

With dual-mode operation, we've seen one way in which hardware support helps accomplish this. Other important aspects of hardware support are summarized below.

4.1 Input/Output (I/O) control

- Issues:

- how does the OS start an I/O?

- special I/O instructions

- memory-mapped I/O

- how does the OS start an I/O?

- how does the OS notice an I/O has finished?

- polling: kernel repeatedly uses memory-mapped I/O to read a status register on the device

- interrupts: device sends an interrupt to the processor when I/O is complete

- because I/O is often slow and irregular, interrupts are preferred

- how does the OS exchange data with an I/O device?

- Programmed I/O (PIO): CPU executes instructions to transfer a few bytes at a time

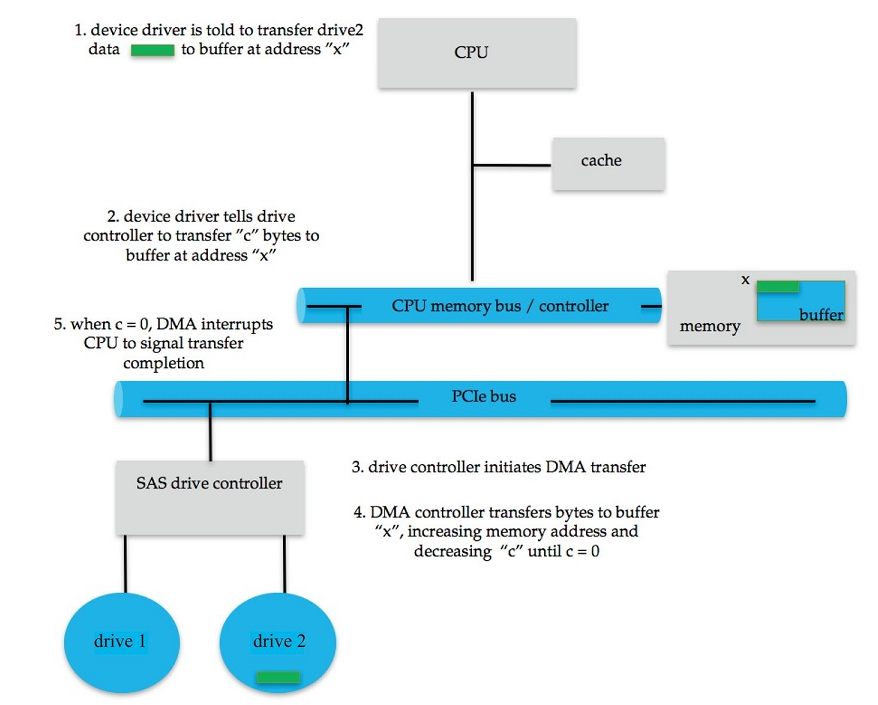

- Direct Memory Access (DMA): device copies a block of data directly from its own internal memory to system's main memory (see example diagram below)

4.1.1 Memory-Mapped I/O

- I/O devices typically connected to the system's memory bus

- Each device has a controller with a set of registers that can be written and read to transmit commands and data to and from the device

- e.g., a keyboard might have a register that can be read to get the most recent keypress

- To facilitate interacting with these registers, systems map them to a range of physical memory addresses on the memory bus

- Reads and writes to these addresses don't go to memory, they go directly to the I/O controller registers

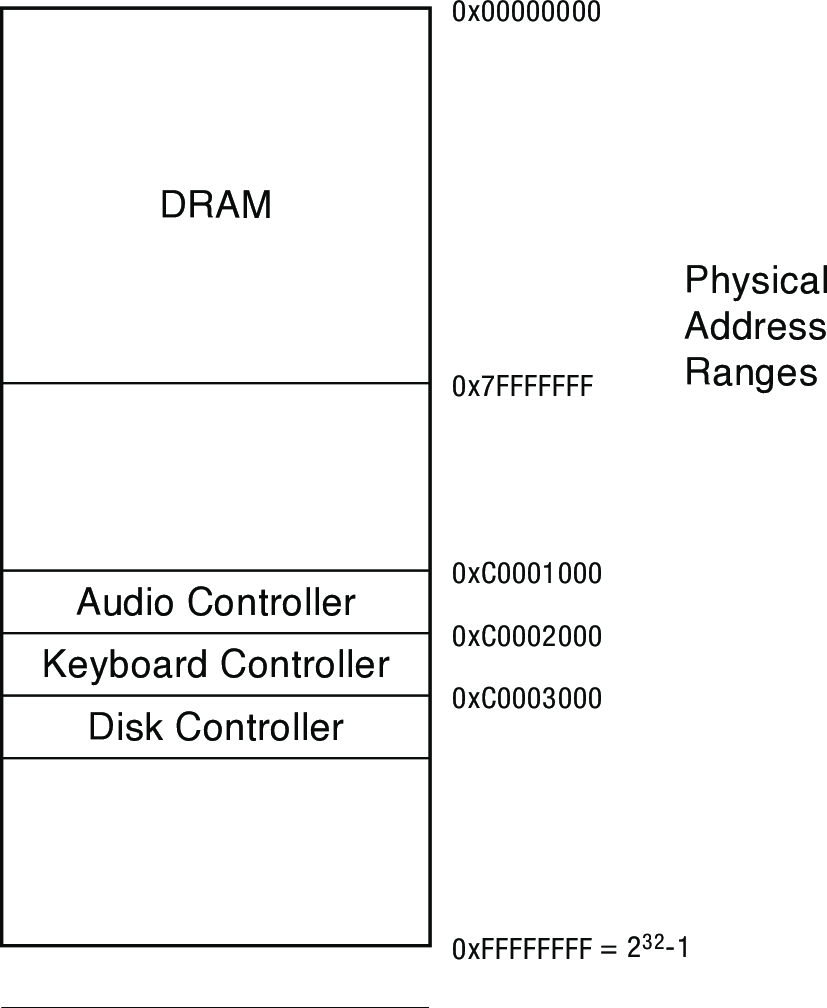

- The diagram below shows the physical address map for a system with a 32-bit physical address space (capable of addressing 4 GB of memory)

- The system's 2 GB of DRAM consume addresses

0x00000000to0x7fffffff - Controllers for each of the system's three I/O devices are mapped to addresses in the first few kilobytes above 3 GB

- The system's 2 GB of DRAM consume addresses

4.1.2 Asynchronous I/O

- Interrupts are the basis for asynchronous I/O

- device performs an operation asynchronously to CPU

- device sends an interrupt signal on bus when done

- in memory, a vector table contains list of addresses of kernel routines to handle various interrupt types

- CPU switches to address indicated by vector index specified by interrupt signal

- What's the advantage of asynchronous I/O?4

4.2 Timers

- How can the OS prevent runaway user programs from hogging the CPU (infinite loops?)

- use a hardware timer that generates a periodic interrupt

- before it transfers to a user program, the OS loads the timer with a time to interrupt

- how long should should this time be?

- when timer fires, an interrupt transfers control back to OS

- at which point OS must decide which program to schedule next

- very interesting policy question: we'll get into it in week 6

- Should access to the timer be privileged?

- for reading or for writing?

4.3 Synchronization

- Interrupts cause a wrinkle:

- may occur any time, causing code to execute that interferes with code that was interrupted

- OS must be able to synchronize concurrent processes

- Synchronization:

- guarantee that short instruction sequences (e.g., read-modify-write) execute atomically (i.e., all at once with nothing happening in between)

- one method: turn off interrupts before the sequence, execute it, then re-enable interrupts

- architecture must support disabling interrupts

- should disabling some interrupts be privileged? Should the user be able to disable any?

- architecture must support disabling interrupts

- another method: have special complex atomic instructions

- read-modify-write

- test-and-set

- load-linked store-conditional

4.4 Concurrent programming

- Management of concurrency and asynchronous events is biggest difference between "systems programming" and "traditional application programming"

- modern "event-oriented" application programming is a middle ground

- And in a multi-core world, more and more apps have internal concurrency

- Arises from the architecture

- That is, a much more restrictive architecture could force everything to be sequential (at great cost)

- Can be sugar-coated, but cannot be totally abstracted away

- Huge intellectual challenge

- Unlike vulnerabilities due to buffer overflows, which are just sloppy programming

4.5 Architectures are still evolving

- New features are still being introduced to meet modern demands

- Support for virtual machine monitors

- Hardware transaction support (to simplify parallel programming)

- Support for security (encryption, trusted modes)

- Increasingly sophisticated video / graphics

- Other stuff that hasn't been invented yet…

- In current technology transistors are (basically) free — CPU makers are looking for new ways to use transistors to make their chips more desirable

- Intel's big challenge: finding applications that require new hardware support, so that you will want to upgrade to a new computer to run them

Footnotes:

The mode is represented by a single bit (or more if there are more than two privilege levels) in the processor status register

This will trigger a processor exception that transfers control to an exception handler in the OS kernel. Typically, the kernel will just terminate the offending process.

Hardware timer interrupts are what ensures the OS can eventually regain control from any user process. If a user process could disable them, then it could lock up the processor with no way for the kernel to intervene.

CPU can continue other work in the meantime