CS 332 s20 — Paging: Faster Translations

Table of Contents

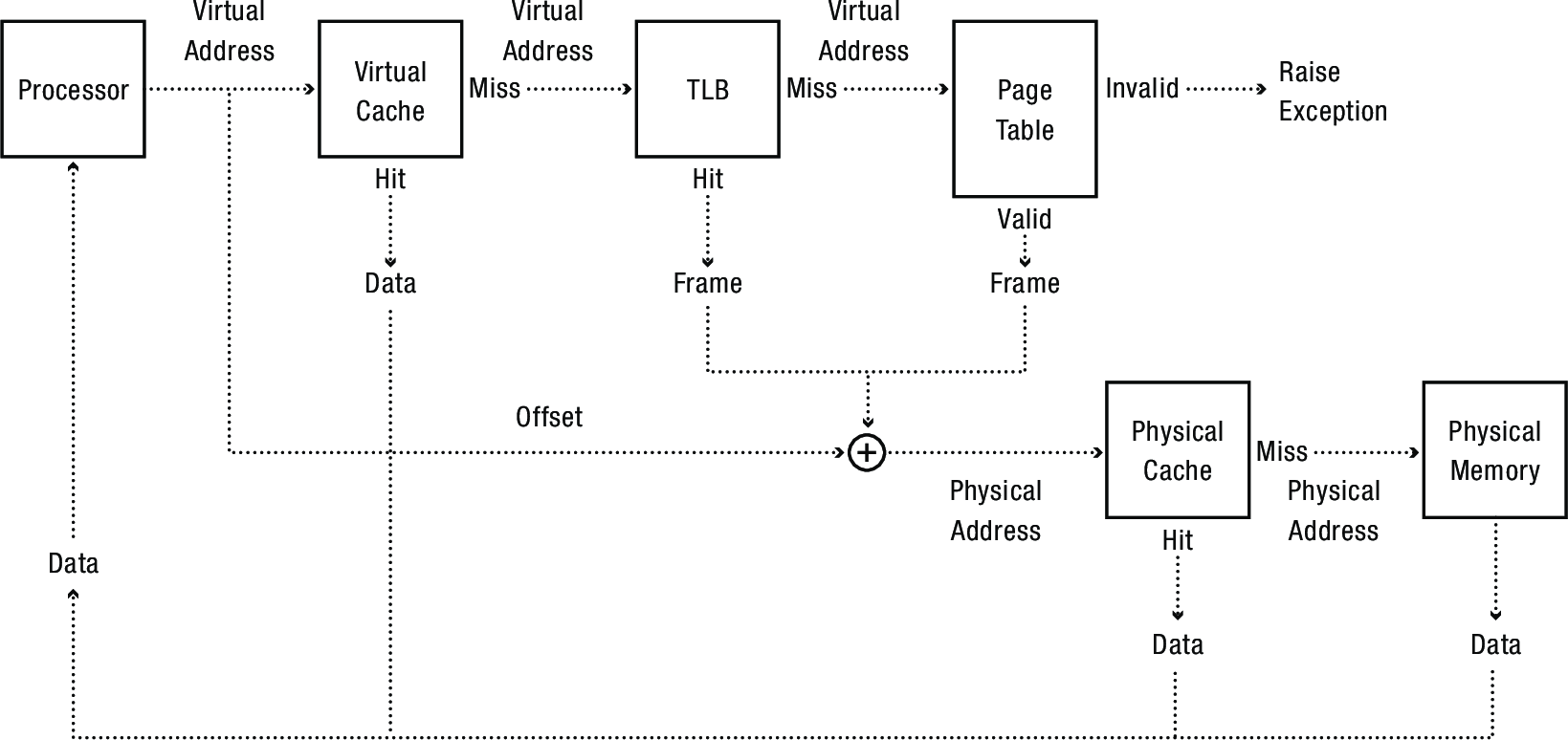

1 Memory lookups got you down? Use caches!

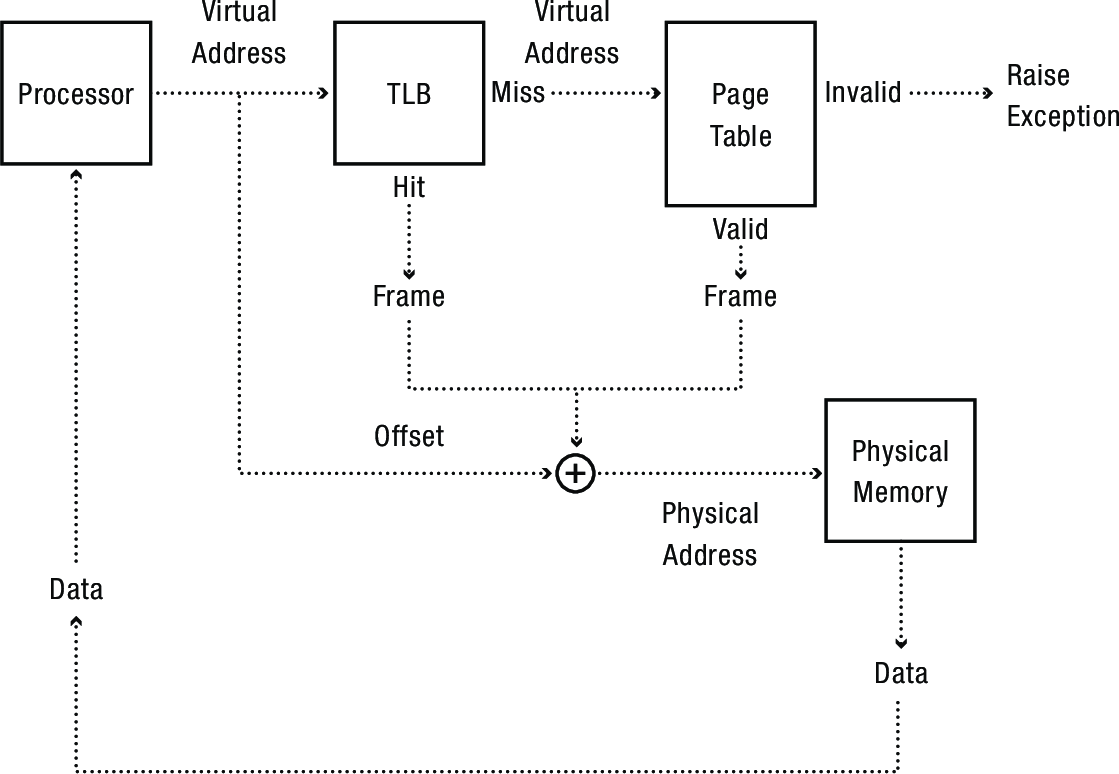

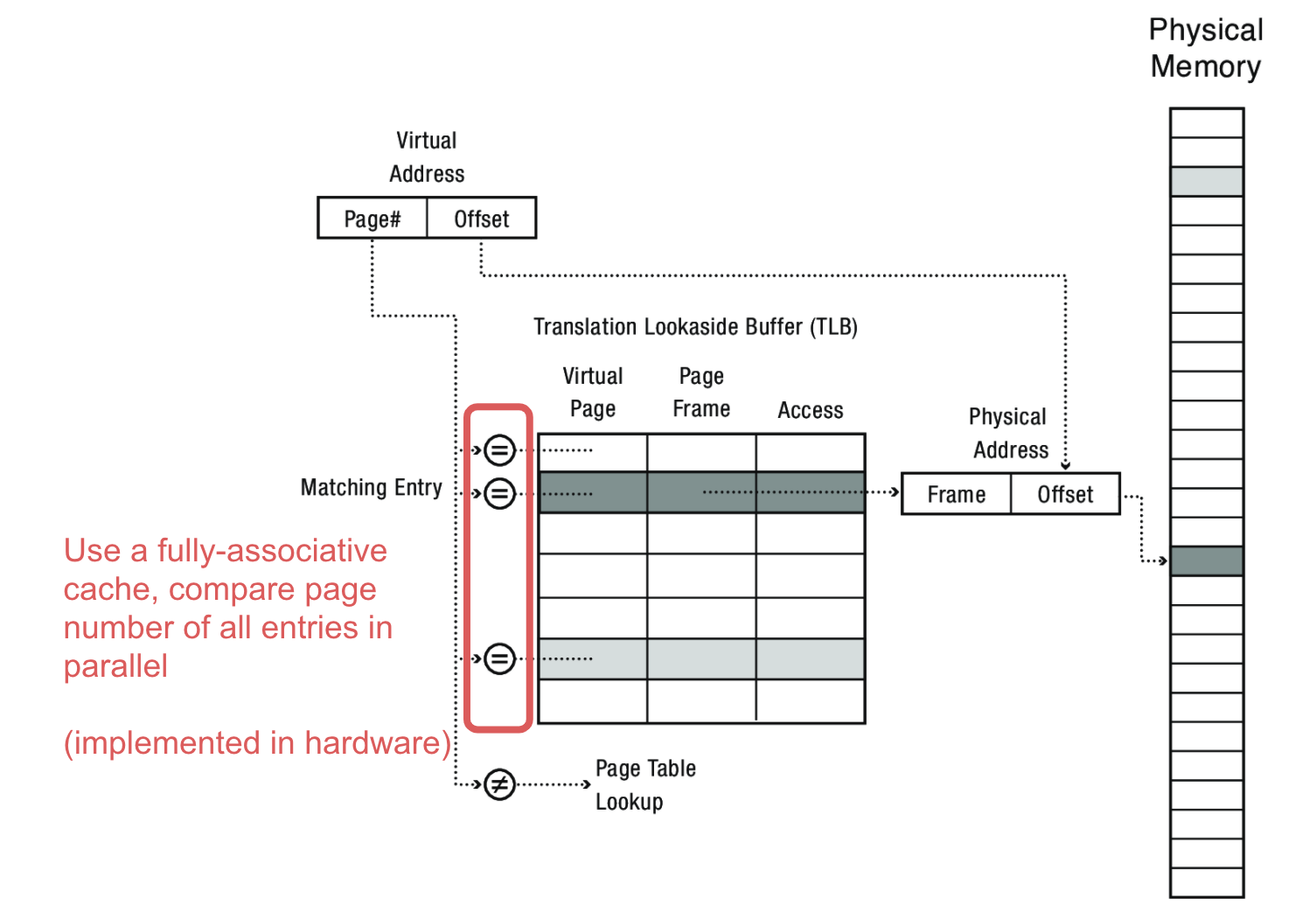

- Translation lookaside buffer (TLB)

- Cache of recent virtual page → physical page translations (a page table entry)

- If cache hit, use translation

- If cache miss, traverse the multi-level page table to perform the address translation

- Cost of translation =

- Cost of TLB lookup +

- Probility(TLB miss) * cost of page table lookup

- So if cost of TLB lookup is much less than the cost of page table lookup and the probability of misses is low, big performance win

- As with all caching, depends on locality

- If a program’s memory references are scattered across more pages than there are entries in the TLB, lots of misses

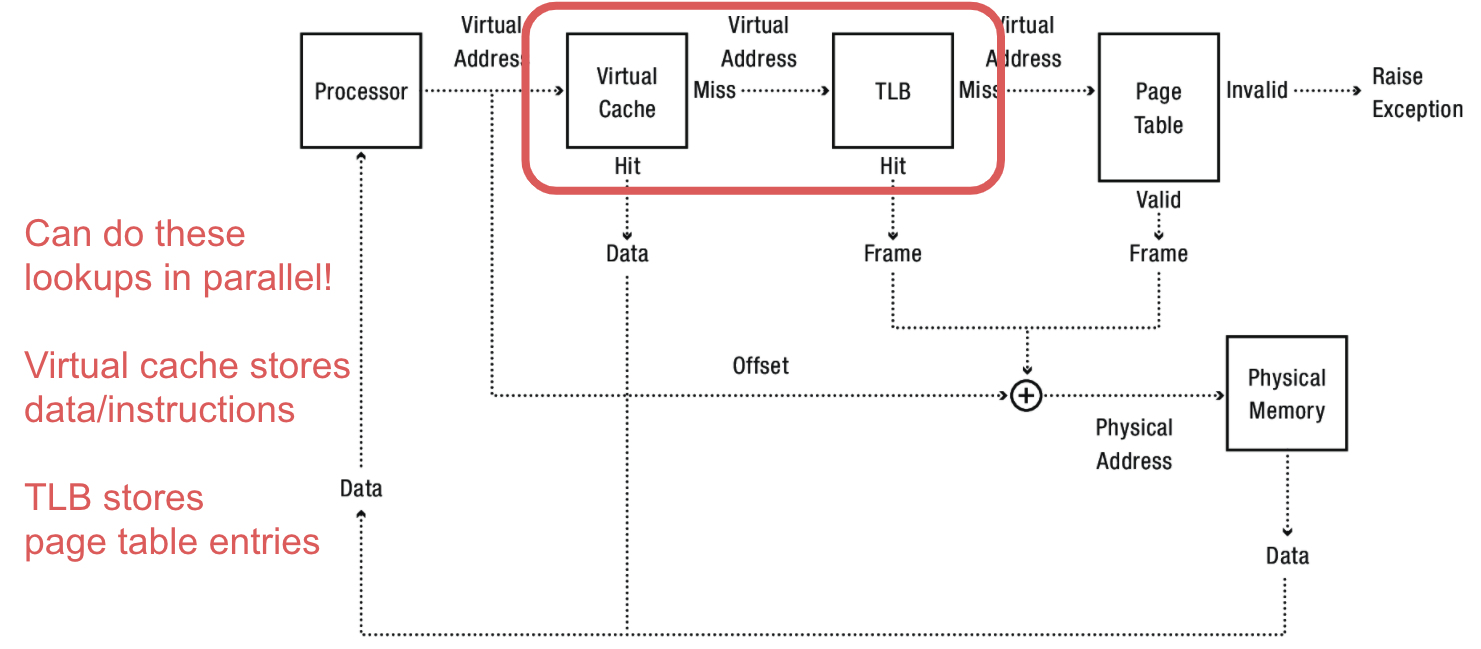

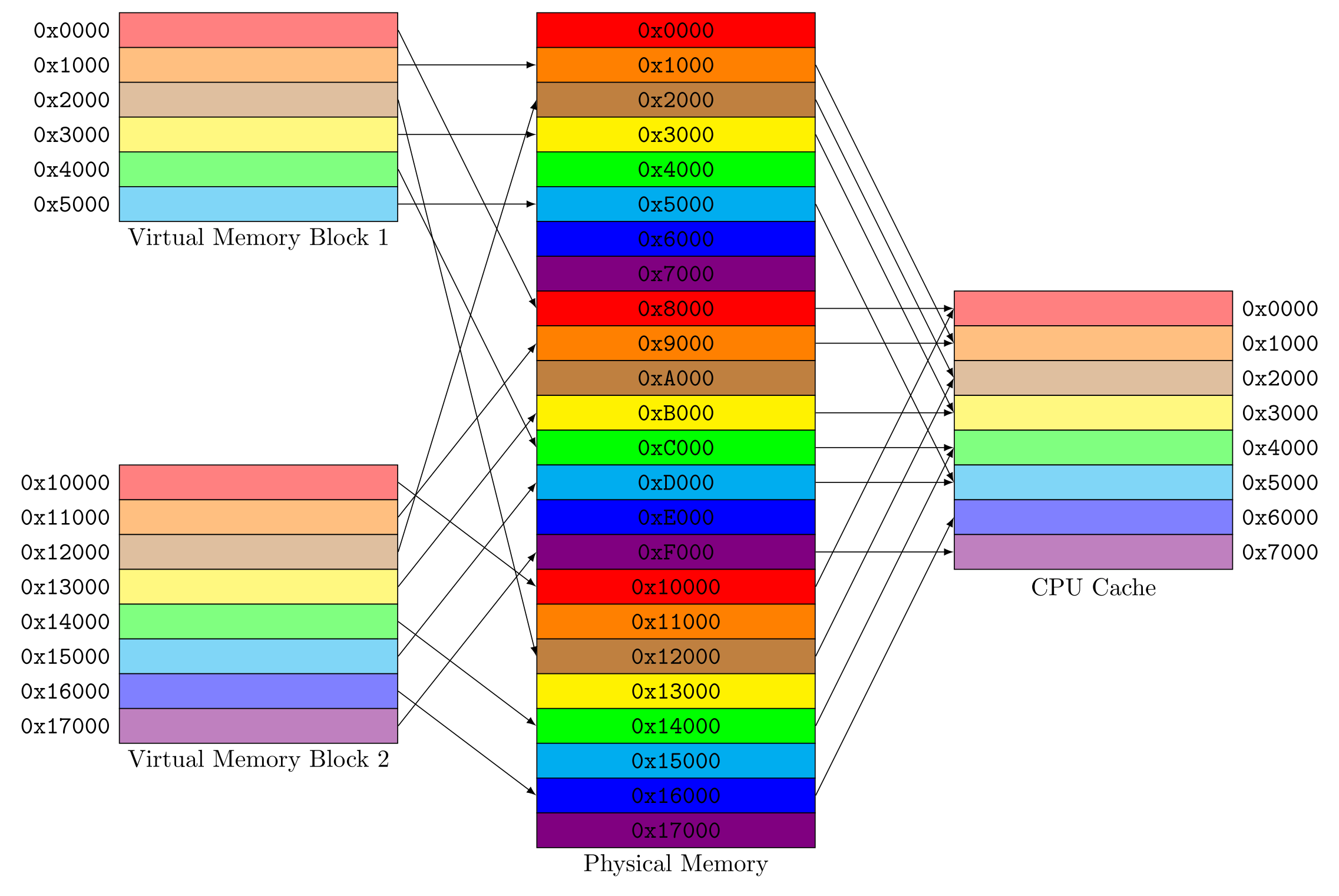

1.1 Still too slow? MORE CACHING

- Too slow to first access TLB to find physical address, then look up address in physical memory

- Instead, first level cache memory is virtually addressed

- That is, the data stored in the cache closest to the CPU is stored by its virtual address, not its physical address

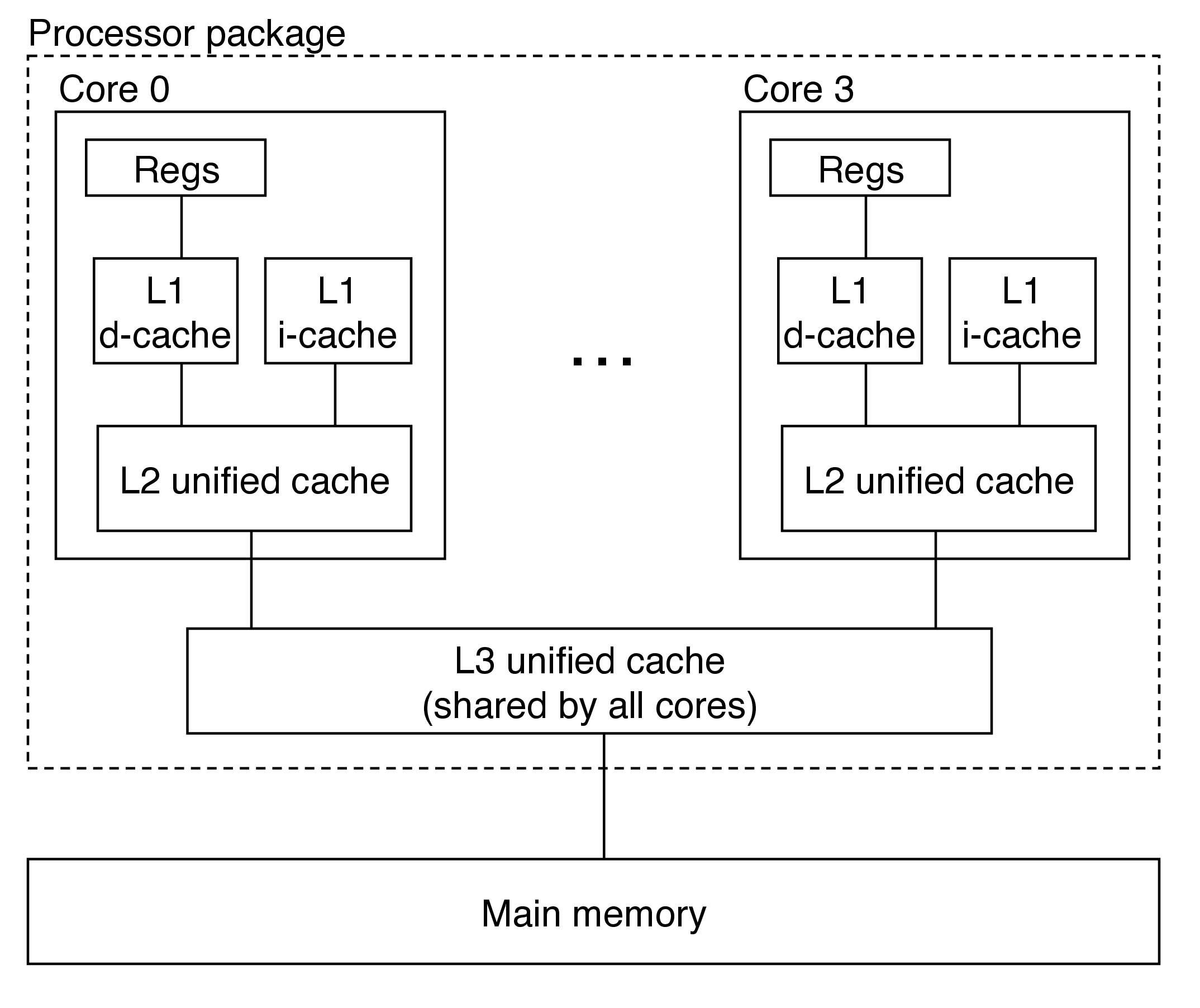

On the Intel Core i7, L1 caches are virtually addressed, while L2 and L3 caches are physically addressed

Putting it all together:

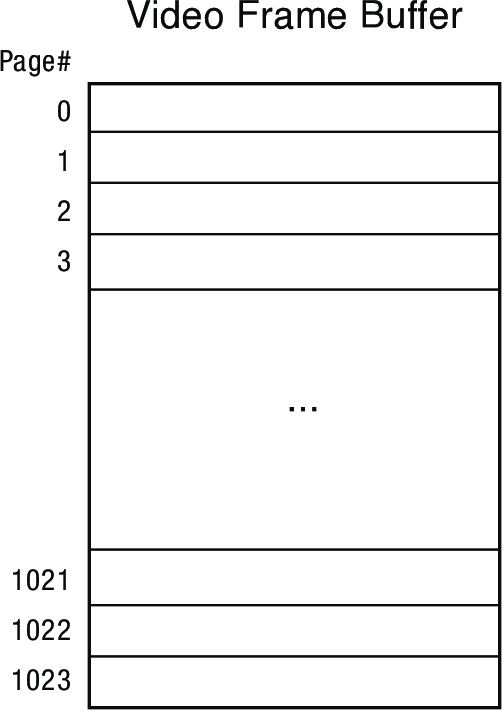

1.2 What if a common operation has bad locality?

- When redrawing the screen, CPU may touch every pixel

- HD display: 32 bits x 2K x 1K = 8MB = 2K pages

- Even large TLB (256 entries), only covers 1MB with 4KB pages

- Repeated page table lookups

- Worse when drawing vertical line

- Frame buffer (2D array of pixel data) in row-major order

- Each horizontal row of pixels on a separate page

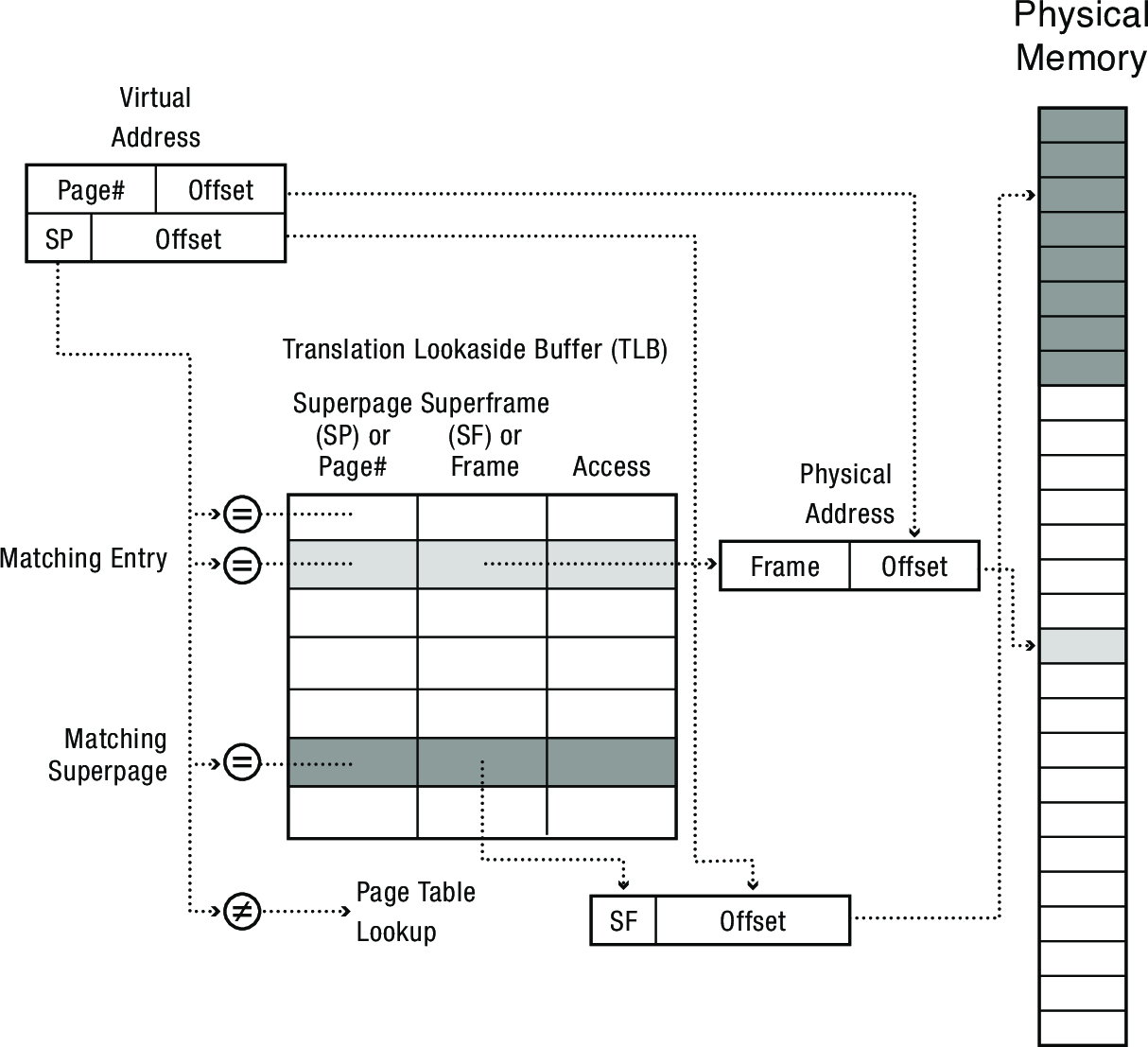

Solution: superpages

- On many systems, TLB entry can be

- A page

- A superpage: a set of contiguous pages

- x86: superpage is set of pages in one page table

- x86 TLB entries

- One page: 4KB

- One page table: 2MB

- One page table of page tables: 1GB

(not shown in diagram: each TLB entry has a flag indicating whether it is a regular page or a superpage)

- Pros:

- Vastly fewer TLB entries for large contiguous regions of memory

- Cons:

- Back to more complex memory management due to variable sized units

- Somewhat more complex TLB lookups/design

1.3 Keeping caches consistent

1.3.1 OS changes page permissions

- A common and very useful tool

- For demand paging, copy on write, zero on reference, …

- TLB may contain old entry

- When would this be ok? When would it be a problem?

- If permissions are reduced (e.g., copy-on-write), a stale entry could allow a bad operation

- OS must ask hardware to purge TLB entry

- Early computers discarded entire TLB, modern architectures support removing individual entries

1.3.2 Multiple CPUs

- With multiple CPUs, there is an additional complication: each CPU has its own TLB

- Thus, old entries must be removed from every CPU

- CPU making the change must initiate TLB shootdown

- Send interrupt to each other processor

- Each has to stop, clean TLB, then resume

- original CPU has to wait until all others have handled the interrupt

- TLB shootdown can have high overhead on systems with many CPUs, so OS may batch TLB shootdown requests to reduce number of interrupts

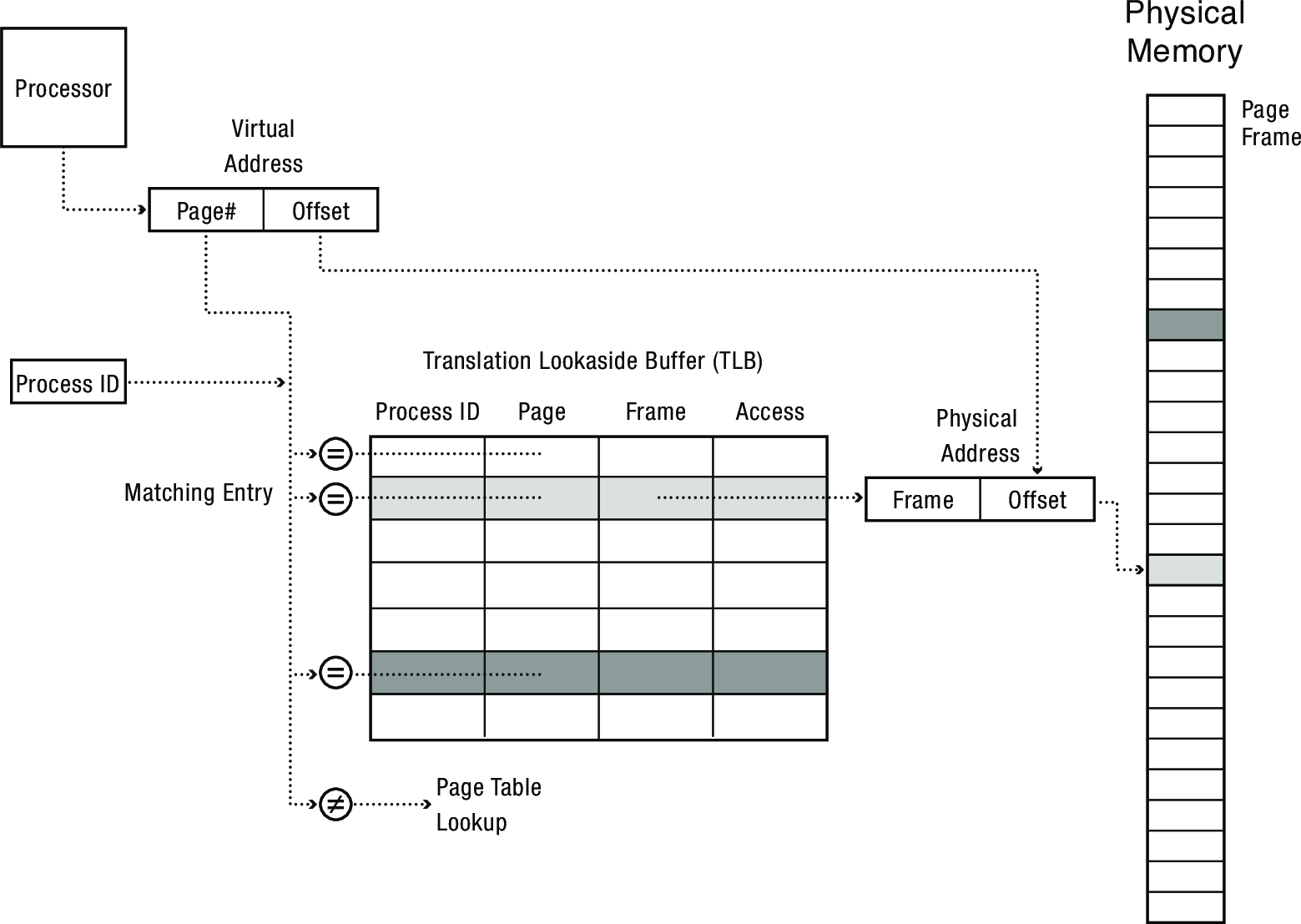

1.3.3 Context switch

- Reuse TLB?

- New process would be reading old processes data (bad)

- Discard TLB?

- Flush the TLB on every switch

- Performance suffers

- Solution: Tagged TLB

- Each TLB entry has process ID

- TLB hit only if process ID matches current process

2 Software-managed TLB

Could we handle a TLB miss in software instead of hardware?

- MIPS software-managed TLB

- MIPS is an instruction set architecture like Intel's x86 or ARM

- Software defined translation tables

- If translation is in TLB, cache hit as normal

- If translation is not in TLB, trap to kernel

- Kernel computes translation and loads TLB

- Using privileged instructions to manage the TLB directly

- Pros/cons?

- Pro: flexibility, OS can use whatever data structure it wants to handle address translation

- Pro: TLB hardware can be simpler, just has to trigger an exception on a miss

- Con: adds cost of kernel trap to a TLB miss (potentially significant overhead)

3 Address Translation: the gift that keeps on giving

- Process isolation

- Keep a process from touching anyone else's memory, or the kernel's

- Efficient interprocess communication

- Shared regions of memory between processes

- Shared code segments

- e.g., common libraries used by many different programs

- Program initialization

- Start running a program before it is entirely in memory

- Dynamic memory allocation

- Allocate and initialize stack/heap pages on demand

- Cache management

Page coloring

- Program debugging

- Data breakpoints when address is accessed

- Zero-copy I/O

- Directly from I/O device into/out of user memory

- Memory mapped files

- Access file data using load/store instructions

- Demand-paged virtual memory

- Illusion of near-infinite memory, backed by disk or memory on other machines

- Checkpointing/restart

- Transparently save a copy of a process, without stopping the program while the save happens

- Process migration

- Transparently move processes between machines

- Information flow control

- Track what data is being shared externally

- Distributed shared memory

- Illusion of memory that is shared between machines

4 Reading: Introduction to Paging

Read OSTEP chapter 19 covering TLBs. It provides a good overview with examples and goes into detail on what exactly is stored in a TLB entry.