Brief Introduction to Computer Systems

Table of Contents

1 Computer Memory

1.1 Bits & Bytes

- In hardware, data is represented as binary bits (1 or 0)

- A bit is the fundamental unit of data storage

- These are grouped into 8-bit chunks called bytes

- Naturally 4-bit chunks are called nybbles

- Memory can be viewed as a big array of bytes

- Each byte has a unique numical index (a memory address)

- A pointer is just a memory address referring to the first byte of some quantity stored in memory

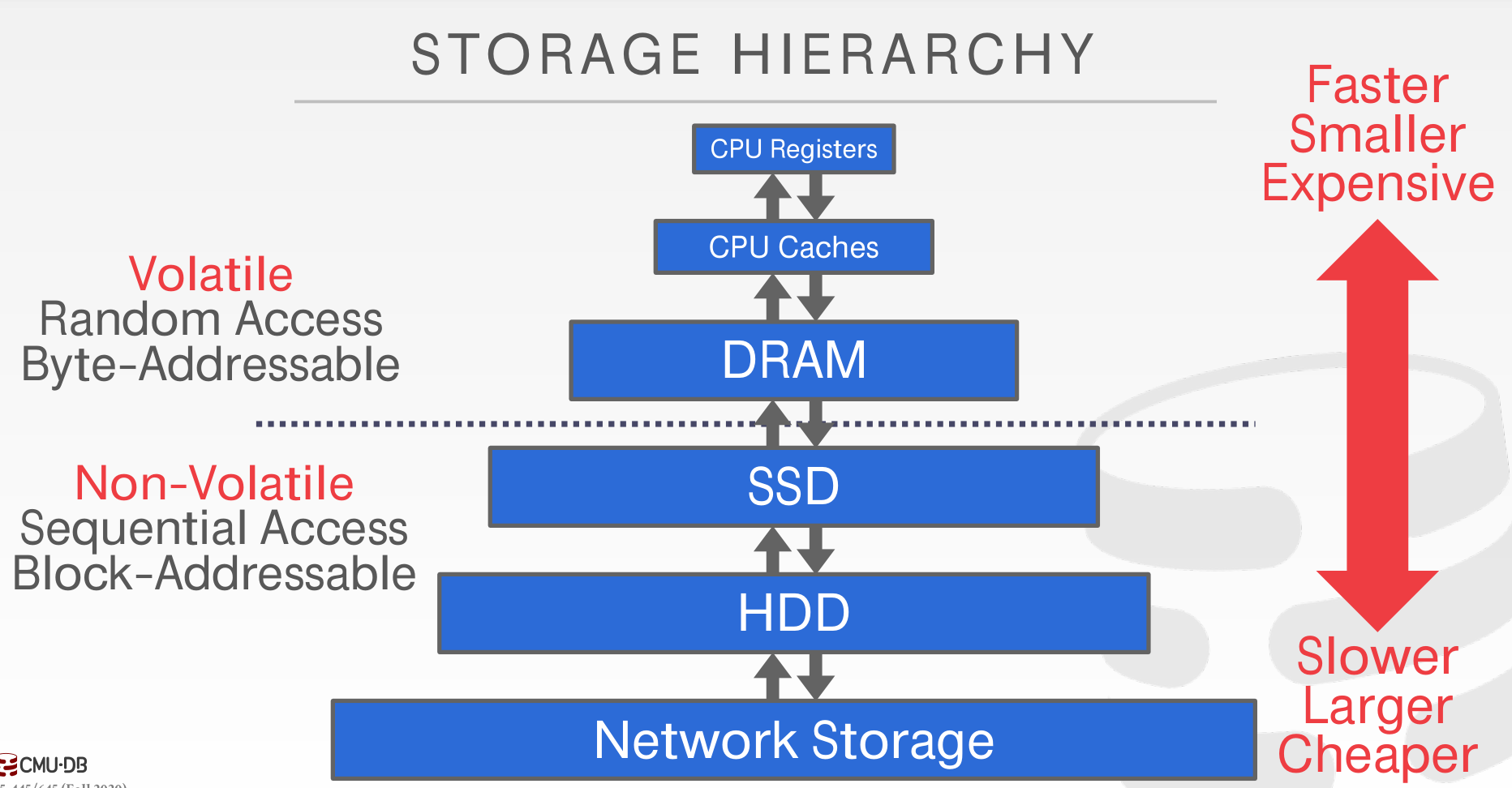

1.2 Storage Hierarchy

Cache reference ............................ 1-7 ns Main memory reference ...................... 100 ns Compress 1K bytes with Zippy ............. 3,000 ns = 3 µs Send 2K bytes over 1 Gbps network ....... 20,000 ns = 20 µs SSD random read ........................ 150,000 ns = 150 µs Read 1 MB sequentially from memory ..... 250,000 ns = 250 µs Round trip within same datacenter ...... 500,000 ns = 0.5 ms Read 1 MB sequentially from SSD* ..... 1,000,000 ns = 1 ms HDD seek ............................ 10,000,000 ns = 10 ms Read 1 MB sequentially from HDD ..... 20,000,000 ns = 20 ms Send packet CA->Netherlands->CA .... 150,000,000 ns = 150 ms *Assuming ~1 GB/sec SSD

Multiply these times by 1 billion to put them in human terms

Minute:

L1 cache reference 0.5 s One heart beat (0.5 s) Branch mispredict 5 s Yawn L2 cache reference 7 s Long yawn Mutex lock/unlock 25 s Making a coffee

Hour:

Main memory reference 100 s Brushing your teeth Compress 1K bytes with Zippy 50 min One episode of a TV show (including ad breaks)

Day:

Send 2K bytes over 1 Gbps network 5.5 hr From lunch to end of work day

Week

SSD random read 1.7 days A normal weekend Read 1 MB sequentially from memory 2.9 days A long weekend Round trip within same datacenter 5.8 days A medium vacation Read 1 MB sequentially from SSD 11.6 days Waiting for almost 2 weeks for a delivery

Year

Disk seek 16.5 weeks A semester in university Read 1 MB sequentially from disk 7.8 months Almost producing a new human being The above 2 together 1 year

Decade

Send packet CA->Netherlands->CA 4.8 years Average time it takes to complete a bachelor's degree

The takeaways from this are

- Reading/writing to disk is expensive, so it must be managed carefully to avoid large stalls and performance degradation.

- Random access on disk is usually much slower than sequential access, so the DBMS will want to maximize sequential access.

1.3 Caching

- Within the memory hierarchy, the idea of a cache is to store part or all of the data a program is currently using in a place with faster access.

- Caches generally operate on the principle of locality: when a particular piece of data is accessed, it's likely that same data (or data stored nearby) will be accessed again soon.

- Thus, by caching a chunk of data in a place with faster access (i.e., copying it from a lower level in the hierarchy to a higher level), we speed up future operations

- Faster storage is more expensive, however, so systems typically won't have enough to store all the data for a given program

- When new data is brought into a full cache, the system must select some existing piece of data to remove or evict to make room

- There are many different algorithms for deciding what data to evict—a common, simple approach is to evict the least-recently-used (LRU) data

- This is called an LRU policy

2 Role of the Operating System

- The operating system (OS) acts as an intermediary between programs and the underlying hardware

- Windows, macOS, Linux are examples of operating systems

- The OS also coordinates access to shared resources such as memory

- Specifically, the system does not trust user applications to always behave correctly and non-maliciously

- So the OS provides an interface (a.k.a an API) that programs use to ask the OS to perform operations/provide resources

- These OS functions are called system calls

- Programming language libraries typically implement functions/classes on top of the system calls

2.1 Memory Management

- There is a similar relationship when it comes to allocating memory

- The OS manages the system's memory, controlling which programs can use which chunks of memory

- User applications that need to dynamically allocate memory (like creating a data structure for a matrix whose size is not known at compile-time) have to ask the OS

- In C++ we the use

newoperator to allocate memory for a new object/array/etc., which underneath calls C'smallocfunction, which itself uses thesbrksystem call (on Linux) - We tell the system we are done with that memory (i.e., it can be reused for other things) with the

deleteoperator (which calls C'sfreefunction)

- Something that database systems often need to do is load the contents of a file on disk into memory

- Since there's often more data in the database than fits in memory at one time, the DBMS moves chunks of data to and from disk as needed

- These fixed-size chunks are called pages

- For project 1 you will implement the component of the database that manages this

3 Concurrency

3.1 Threads

- a thread is a single execution sequence that represents the minimal unit of scheduling

- four reasons to use threads:

- program structure: expressing logically concurrent tasks

- each thing a process is doing gets its own thread (e.g., drawing the UI, handling user input, fetching network data)

- responsiveness: shifting work to run in the background

- we want an application to continue responding to input in the middle of an operation

- when saving a file, write contents to a buffer and return control, leaving a background thread to actually write it to disk

- performance: exploiting multiple processors (also called cores or CPUs)

- performance: managing I/O devices

- program structure: expressing logically concurrent tasks

3.2 What the Problem?

- a multi-threaded program's execution depends on how instructions are interleaved

- threads sharing data may be affected by race conditions (or data races)

| Thread A | Thread B |

|---|---|

| Subtract $200 from my bank account | Add $100 to my bank account |

- Say my bank account has $500. What are the final possible balances? If everything works, it should be $400.

- But these account operations actually consist of several steps, and this makes the code vulnerable

| Thread A | Thread B |

|---|---|

| Read current balance | Read current balance |

| Subtract 200 | Add 100 |

| Update the balance | Update the balance |

- The vulnerability comes from the fact these steps can be interleaved in such a way as to result in the wrong balance

| Interleaving 1 | Interleaving 2 | Interleaving 3 | |||

|---|---|---|---|---|---|

| Read current balance | Read current balance | Read current balance | |||

| Subtract 200 | Read current balance | Read current balance | |||

| Update the balance | Subtract 200 | Subtract 200 | |||

| Read current balance | Add 100 | Add 100 | |||

| Add 100 | Update the balance | Update the balance | |||

| Update the balance | Update the balance | Update the balance |

- interleaving 1 results in a balance of $400

- interleaving 2 results in a balance of $600 (yay, bank error in my favor!)

- interleaving 3 results in a balance of $300 (I am sad)

4 Synchronization

- prevent bad interleavings of read/write operations by forcing threads to coordinate their accesses

- identify critical section of code where only one thread should operate at a time

- we need hardware support to make checking and acquiring the lock an atomic operation (impossible to interrupt)

- fortunately, C++ provides

std::mutex- when a thread calls the

lock()method it either- acquires the mutex and locks it (preventing other threads from acquiring it)

- or waits until it becomes unlocked

- a thread calls the

unlock()method when it's done with the critical section with a mutex object shared between our two threads, we can do

Thread A Thread B Acquire lock Acquire lock Read current balance Read current balance Subtract 200 Add 100 Update the balance Update the balance Release lock Release lock

- when a thread calls the

This prevents the bad interleavings shown above, forcing all of thread A to happen before thread B or vice versa