Lab 2021-04-16: Concurrency

Table of Contents

1 Project 1 questions

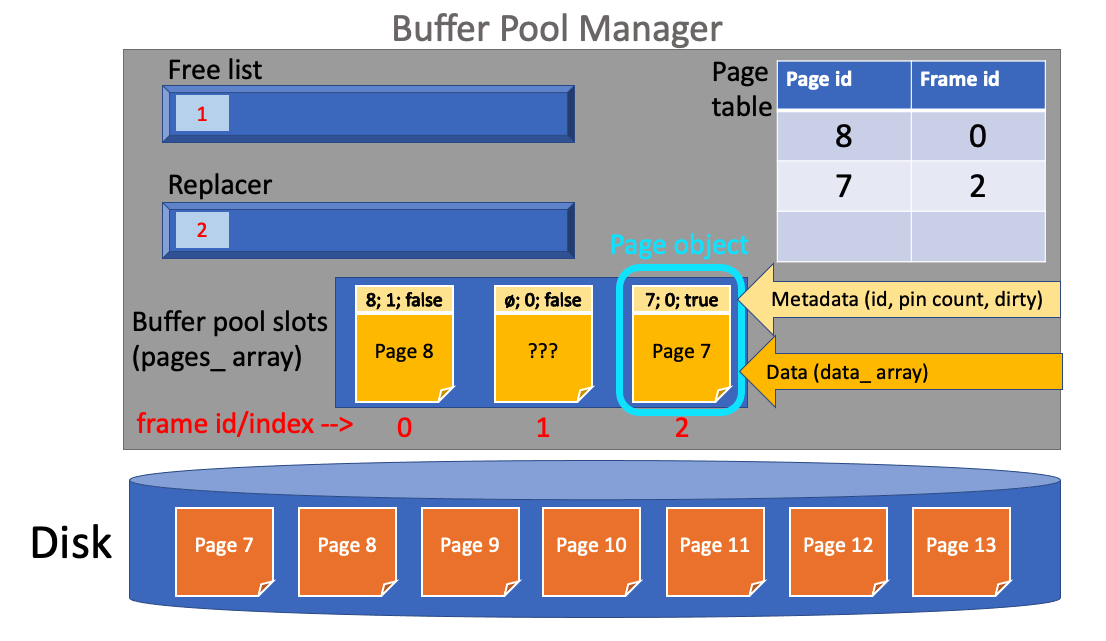

Visual example

2 Concurrency

2.1 Threads

- C++ provides

std::threadas a way of creating a new thread that will run in parallel - A new thread requires (a) a program to run and (b) any inputs that program requires

- That is, we'll provide a function to execute and any arguments to that function

#include <iostream> // std::cout #include <thread> // std::thread void hello() { std::cout << "hello\n"; } void goodbye() { std::cout << "goodbye\n"; } int main() { std::thread t1(hello); std::thread t2(goodbye); return 0; }

- Most of the time nothing gets printed by the above program

- We reach the end of

mainand exit before the threads ever get a chance to run

- We reach the end of

- We use

.join()to wait for a thread to finish (joining it back together with the original thread)

#include <iostream> // std::cout #include <thread> // std::thread void hello() { std::cout << "hello\n"; } void goodbye() { std::cout << "goodbye\n"; } int main() { std::thread t1(hello); std::thread t2(goodbye); t1.join(); t2.join(); return 0; }

- Note that hello and goodbye may be printed in either order

- The operating system controls the scheduling of threads (i.e., when they run and for how long)

- Thus, our code can't make any assumptions about one thread getting to run before another without some kind of explicit coordination

- Also crucial to keep in mind: the system can switch from one thread to another at any point

- And when threads compete for access to a shared resource (like printing to the terminal) which one "wins" may be unpredictable

#include <iostream> // std::cout #include <thread> // std::thread #include <list> void print_thread_id(int id) { std::cout << "thread #" << id << "\n"; } int main () { std::list<std::thread> threads; for (int i = 0; i < 10; i++) { threads.emplace_back(std::thread(print_thread_id, i)); } for (auto &t : threads) { t.join(); } return 0; }

2.2 Using a mutex

- So we need some mechanism to coordinate between threads

- There are many types of coodination we might want

- Today we'll focus on mutual exclusion

- Making it so only one thread can be executing a given region of code at one time

- C++ provides

std::mutexfor this purpose - A thread can attempt to lock (a.k.a. acquire) a mutex

- If the mutex is free (i.e., not currently held by some other thread), the thread acquires it and proceeds normally

- If the mutex is held, the thread blocks, waiting until the mutex becomes available

- Once it is finished, a thread will unlock (a.k.a. release) the mutex

- The region of code where we only want there to be one thread at a time is called the critical section

- In general, we want the critical section to be as short as possible

- To minimize the amount of time threads spend waiting

#include <iostream> // std::cout #include <thread> // std::thread #include <list> #include <mutex> std::mutex latch_; void print_thread_id(int id) { latch_.lock(); std::cout << "thread #" << id << "\n"; latch_.unlock(); } int main () { std::list<std::thread> threads; for (int i = 0; i < 10; i++) { threads.emplace_back(std::thread(print_thread_id, i)); } for (auto &t : threads) { t.join(); } return 0; }

2.3 Writing a multi-threaded test case

void ConcurrentSimplePage() { // scenario: 4 concurrent SimplePageTest const std::string db_name = "test.db"; const size_t buffer_pool_size = 10; auto *disk_manager = new DiskManager(db_name); auto *bpm = new BufferPoolManager(buffer_pool_size, disk_manager); std::vector<std::thread> threads; threads.reserve(10); for (int i = 0; i < 10; i++) { threads.emplace_back(std::thread(SimplePage, bpm, i)); } for (auto &th : threads) { th.join(); } EXPECT_EQ(10, disk_manager->GetNumWrites()); // Shutdown the disk manager and remove the temporary file we created. disk_manager->ShutDown(); remove("test.db"); delete bpm; delete disk_manager; } TEST(BufferPoolManagerTest, ConcurrentSimplePageTest) { for (int i = 0; i < 100; i++) { ConcurrentSimplePage(); } }

2.4 Concurrency bugs

Inconsistent internal data

[==========] Running 1 test from 1 test suite. [----------] Global test environment set-up. [----------] 1 test from BufferPoolManagerTest [ RUN ] BufferPoolManagerTest.ConcurrentSimplePageTest /Users/awb/Documents/teaching/cs334/bustub-cs334/test/buffer/buffer_pool_manager_test.cpp:481: Failure Value of: bpm->UnpinPage(page_id, true) Actual: false Expected: true /Users/awb/Documents/teaching/cs334/bustub-cs334/test/buffer/buffer_pool_manager_test.cpp:482: Failure Expected equality of these values: 0 page->GetPinCount() Which is: 1 /Users/awb/Documents/teaching/cs334/bustub-cs334/test/buffer/buffer_pool_manager_test.cpp:533: Failure Expected equality of these values: 10 disk_manager->GetNumWrites() Which is: 9Segmentation faults

[==========] Running 1 test from 1 test suite. [----------] Global test environment set-up. [----------] 1 test from BufferPoolManagerTest [ RUN ] BufferPoolManagerTest.ConcurrentSimplePageTest [1] 94800 segmentation fault ./test/buffer_pool_manager_test

Memory errors

[==========] Running 1 test from 1 test suite. [----------] Global test environment set-up. [----------] 1 test from BufferPoolManagerTest [ RUN ] BufferPoolManagerTest.ConcurrentSimplePageTest buffer_pool_manager_test(94839,0x700001a10000) malloc: Double free of object 0x7fe504e040a0 buffer_pool_manager_test(94839,0x700001a10000) malloc: *** set a breakpoint in malloc_error_break to debug [1] 94839 abort ./test/buffer_pool_manager_test

2.5 Thread-safe made simple

- An simple way to protect an object's internal data is to acquire a latch at the start of every method

- That way only one thread can be interacting with the object at a time

- This is terrible for parallelism, and so undesirable in real-world software

- For project 1, you are only evaluated on correctness

- In future projects you will be expected to make use of latches efficiently

Can use

std::lock_guardto hold a mutex for the duration of a function#include <iostream> // std::cout #include <thread> // std::thread #include <list> #include <mutex> std::mutex latch; void print_thread_id(int id) { std::lock_guard<std::mutex> guardo(latch); std::cout << "thread #" << id << "\n"; } int main () { std::list<std::thread> threads; for (int i = 0; i < 10; i++) { threads.emplace_back(std::thread(print_thread_id, i)); } for (auto &t : threads) { t.join(); } return 0; }

- The mutex will be released when the lock guard goes out of scope (i.e., when the function returns)

- Important note: the

std::mutexdoes not support a thread acquiring a mutex it already holds, see the documentation for details (this applies when using a lock guard as well)