CS 111 w20 lecture 24 outline

1 Merge Sort

An example of a divide and conquer approach: recursively split the problem into smaller pieces and solve those

1.1 merge Function

Take two sorted lists, combine them into a single sorted list. Pseudocode:

Given two sorted lists, left and right, and an empty list, merged

while elements remain in both left and right

compare the first element in left to the first element in right

remove the smaller one from its list and append it to merged

append any remaining elements from left or right to merged

def merge(left, right): """assume left and right are already sorted""" left_finger = 0 right_finger = 0 merged = [] while left_finger < len(left) and right_finger < len(right): if left[left_finger] <= right[right_finger]: merged.append(left[left_finger]) left_finger += 1 else: merged.append(right[right_finger]) right_finger += 1 # we've gone through all the items from one list # just append everything from the remaining list if left_finger == len(left): # append the right # for i in range(right_finger, len(right)): # merged.append(right[right_finger]) # merged.extend(right[right_finger:]) merged += right[right_finger:] else: # append the left merged += left[left_finger:] return merged

1.2 Recursive Sorting

def merge_sort(arr): # find an index to split arr in half midpoint = int(len(arr) / 2) left = merge_sort(arr[:midpoint]) right = merge_sort(arr[midpoint:]) return merge(left, right)

1.3 Base Case

def merge_sort(arr): # base case: nothing to sort for a list of 1 or 0 elements if len(arr) <= 1: return arr # find an index to split arr in half midpoint = int(len(arr) / 2) left = merge_sort(arr[:midpoint]) right = merge_sort(arr[midpoint:]) return merge(left, right)

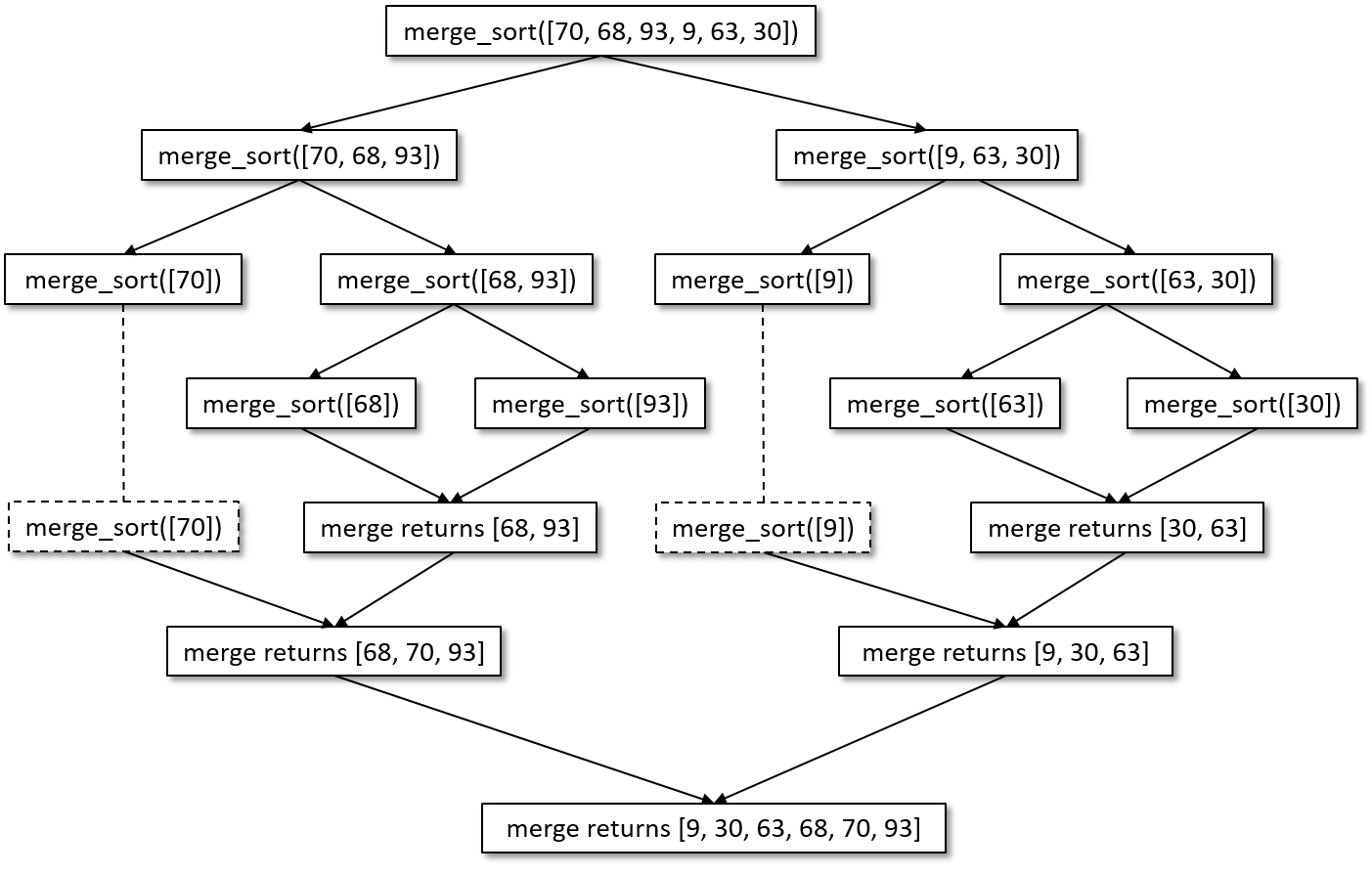

1.4 Diagram

1.5 Analysis

mergeis \(O(n)\)- how many merges?

- number of times \(n\) can be divided by 2 before we reach the base case: \(O(\log_2 n)\)

- so overall, mergesort is \(O(n\log_2 n)\)

- seems like it should be better than insertion sort's \(O(n^2)\)

2 Comparing Algorithms

2.1 Insertion Sort vs Merge Sort

- how do \(O(n^2)\) and \(O(n\log_2 n)\) compare empirically?

2.2 Scenarios

2.2.1 in-place vs not

- can the algorithm sort the original list or does it necessarily make a copy

- insertion sort is in-place, the merge sort we implemented is not

2.2.2 stability

- equal elements remain in the same relative order before and after sorting

- essential if we want to sort by one attribute and then another

- search results by prince and then by relevance (i.e., equally relevant items will remain sorted by price)

- both merge sort and insertion sort are stable, selection sort is not

2.2.3 streaming data

- insertion sort is great for sorting data as it comes in (\(O(n)\) to insert a single

element), merge sort we have to run the whole sort again

2.2.4 ideal algorithm

The ideal algorithm would have the following properties:

- Stable: Equal keys aren't reordered.

- Operates in place, requiring \(O(1)\) extra space.

- Worst-case \(O(n\log n)\) comparisons

- Worst-case \(O(n)\) swaps.

- Adaptive: Speeds up to \(O(n)\) when data is nearly sorted or when there are lots of duplicates.

There is no algorithm that has all of these properties, and so the choice of sorting algorithm depends on the application.