CS 208 s20 — The Memory Hierarchy

Table of Contents

1 Video

Here is a video lecture for the material outlined below. It covers CSPP sections 6.1.1, 6.2, and 6.3 (p. 581–588, 604–614). It contains sections on

- introduction (0:03)

- processor-memory gap (0:38)

- problem: waiting for memory (3:40)

- program's view of memory (7:41)

- locality (9:46)

- memory hierarchy (15:12)

- SRAM vs DRAM (23:32)

- each level is a cache for the one below (29:14)

- basic example of caching (31:10)

- end notes (40:54)

The Panopto viewer has table of contents entries for these sections. Link to the Panopto viewer: https://carleton.hosted.panopto.com/Panopto/Pages/Viewer.aspx?id=f16a308c-7329-4a62-9ae6-abbf0123e1b3

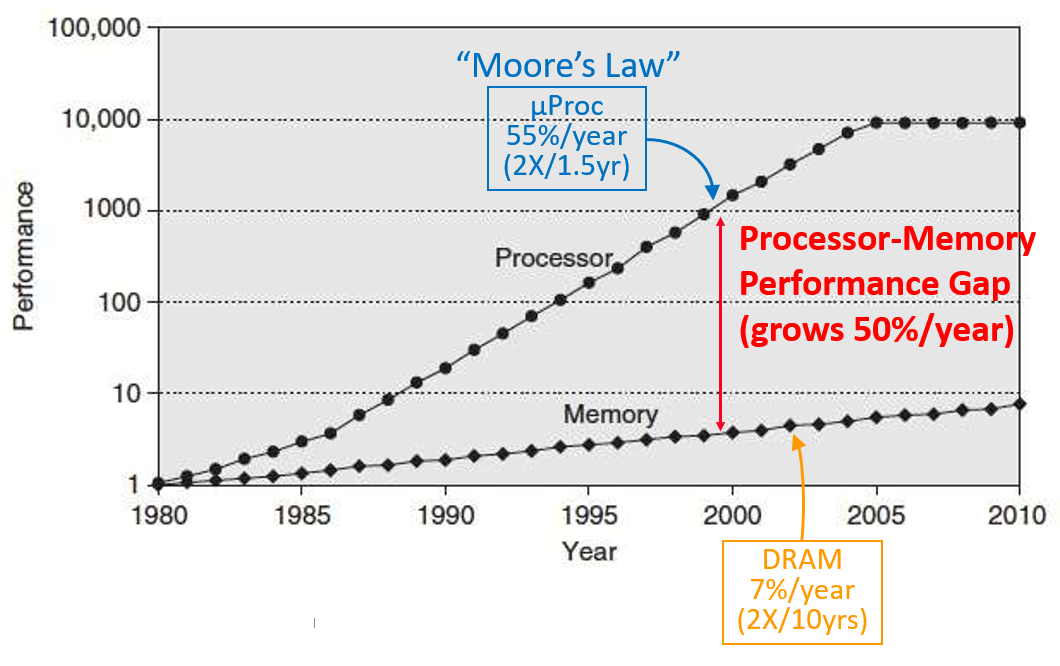

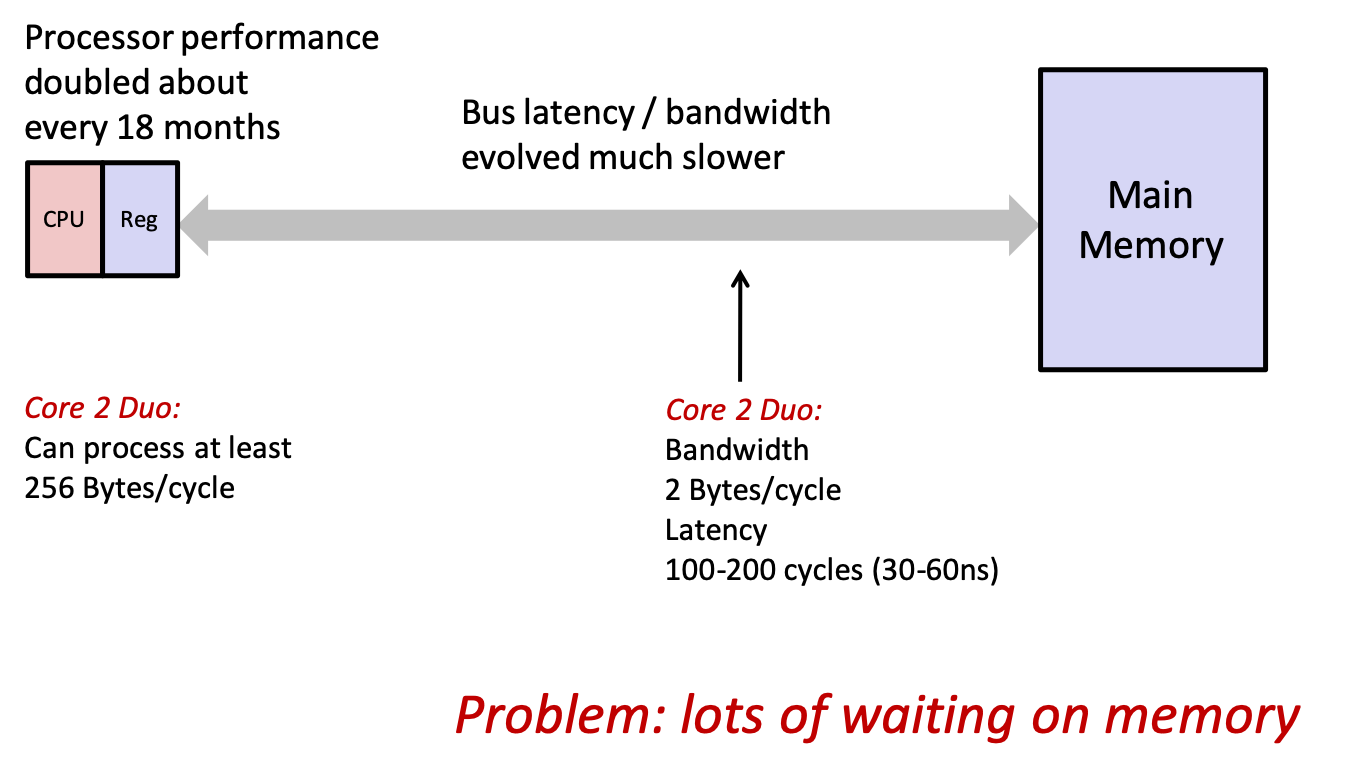

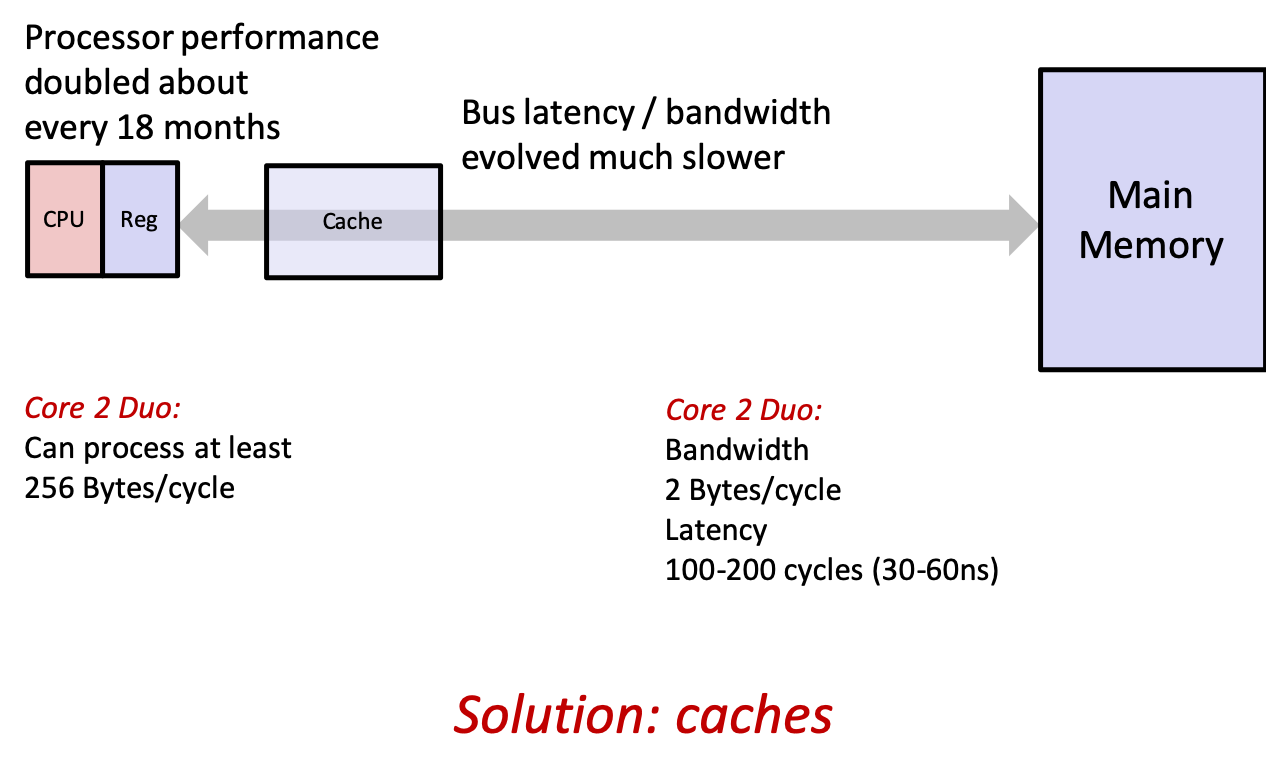

2 CPU-Memory Bottleneck

- processor speed has increased at a much faster rate than memory speed

- this creates an increasing bottleneck when the CPU needs to access memory

- the CPU wastes time waiting around

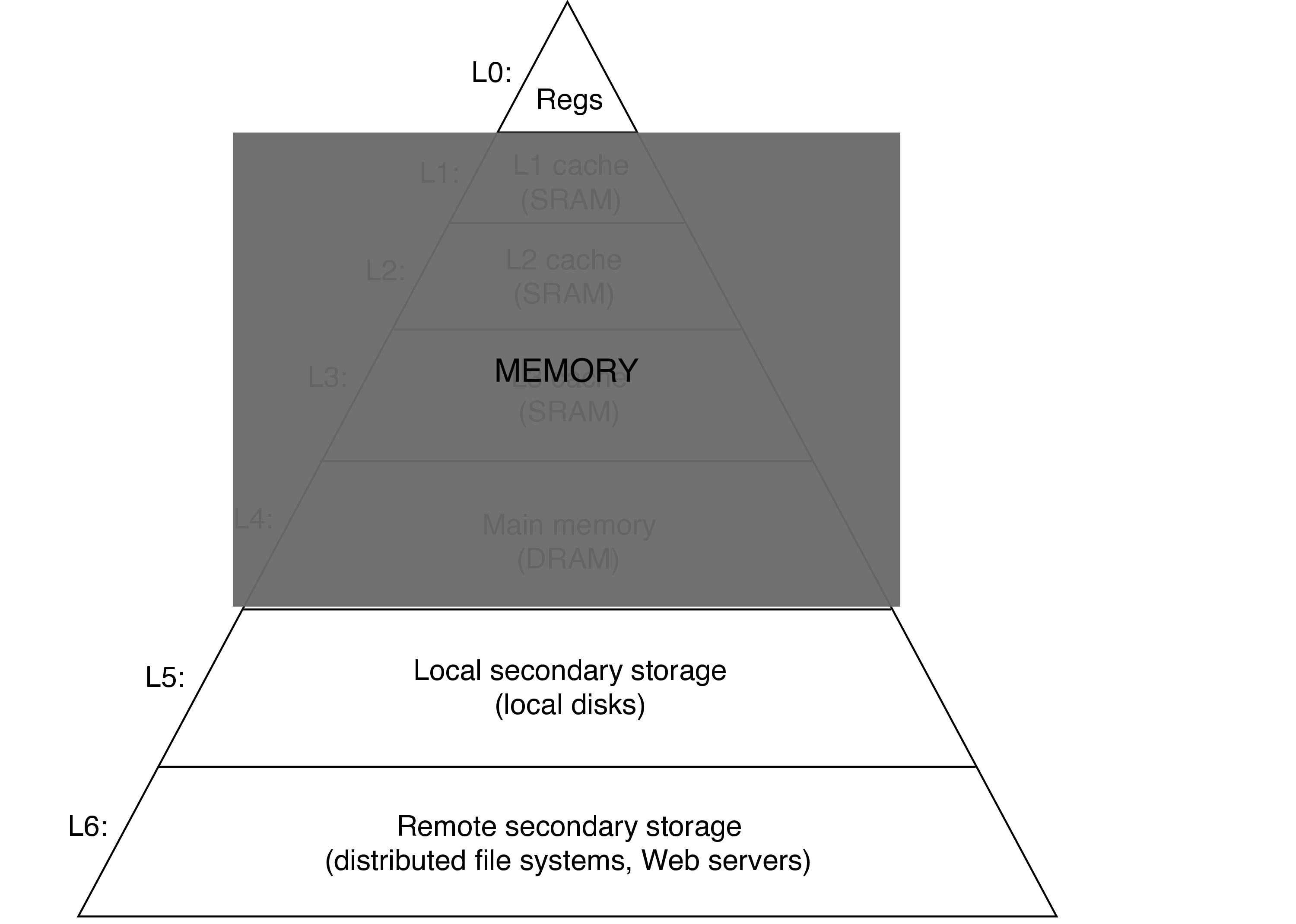

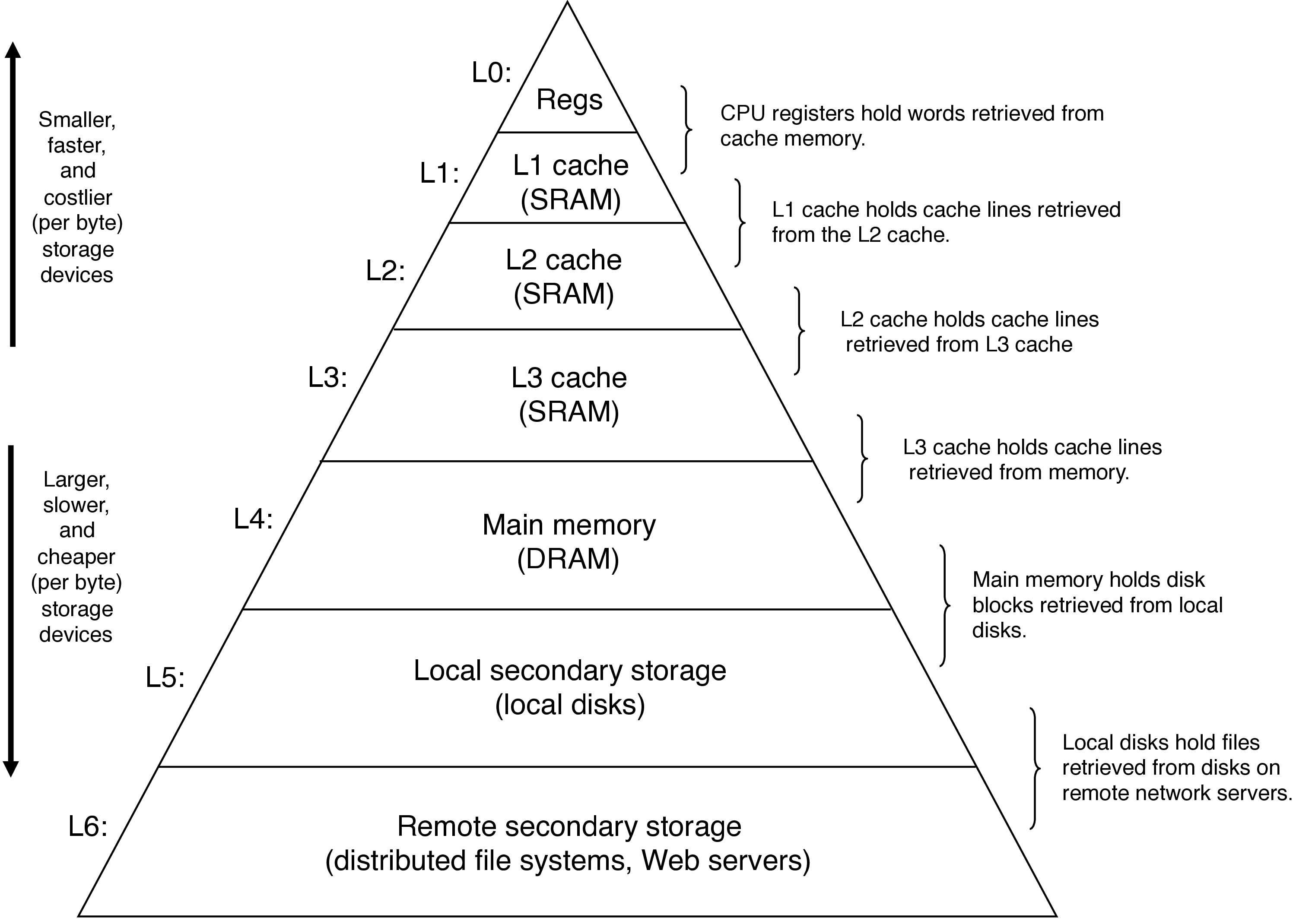

3 Program's View of Memory

- from the program's perspective, there's

- registers

- memory

- disk

- (possibly) remote storage

- however, we will add different levels of memory to make accessing some data much faster

4 Locality

- first, the principle that enables adding new levels of memory to increase performance: locality

- essentially: referencing data that was recently referenced or that is nearby recently referenced data

- if we assume recently referenced data will be referenced again, it's worth it to do some extra work after the first reference to make future references much faster

- temporal locality: a referenced memory location is likely to be referenced again in the near future

- spatial locality: if a memory location is referenced, nearby locations are likely to be referenced in the near future

- in general, programs with good locality run faster, as all levels of the system are designed to exploit locality

- hardware cache memories, OS caching recently referenced chunks of virtual address space and disk blocks, web browsers caching recent documents

5 Caches!

- 0 cycles to access a register (16×8 bytes)

- 4–75 cycles to access a cache (~10 MB)

- 100s to access main memory (GBs)

- 10s of millions to access disk (TBs)

5.1 Aside: SRAM vs DRAM

5.1.1 Static RAM (SRAM)

- bistable memory cell, 6 transitors per bit

- stable in two states, any other state quickly moves into a stable one

- resiliant to disturbance

5.1.2 Dynamic RAM (DRAM)

- single transitor and capacitor per cell, can be very dense

- sensitive to disturbance

- drains on a timescale of 10 to 100 ms, must be refreshed (read and re-written)

- error-correcting codes (extra bits associated with each word) can also be used

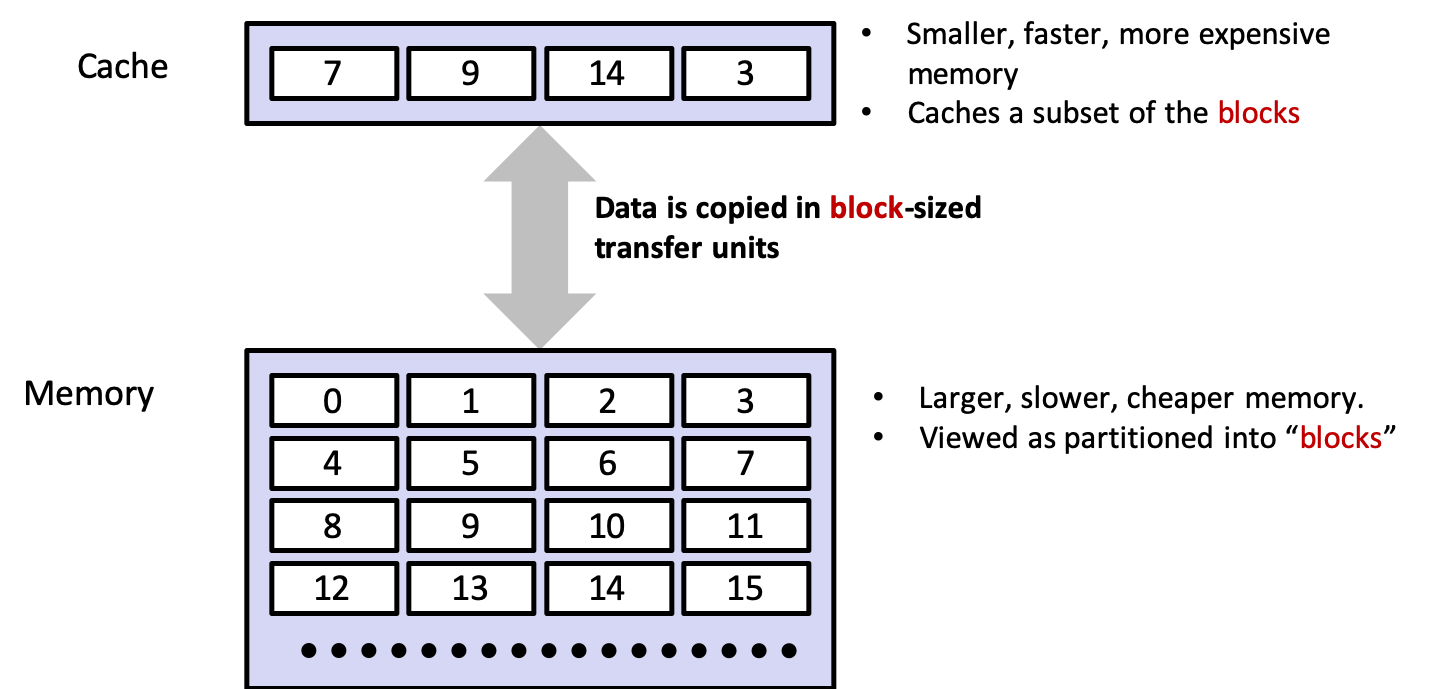

5.2 Basic Idea

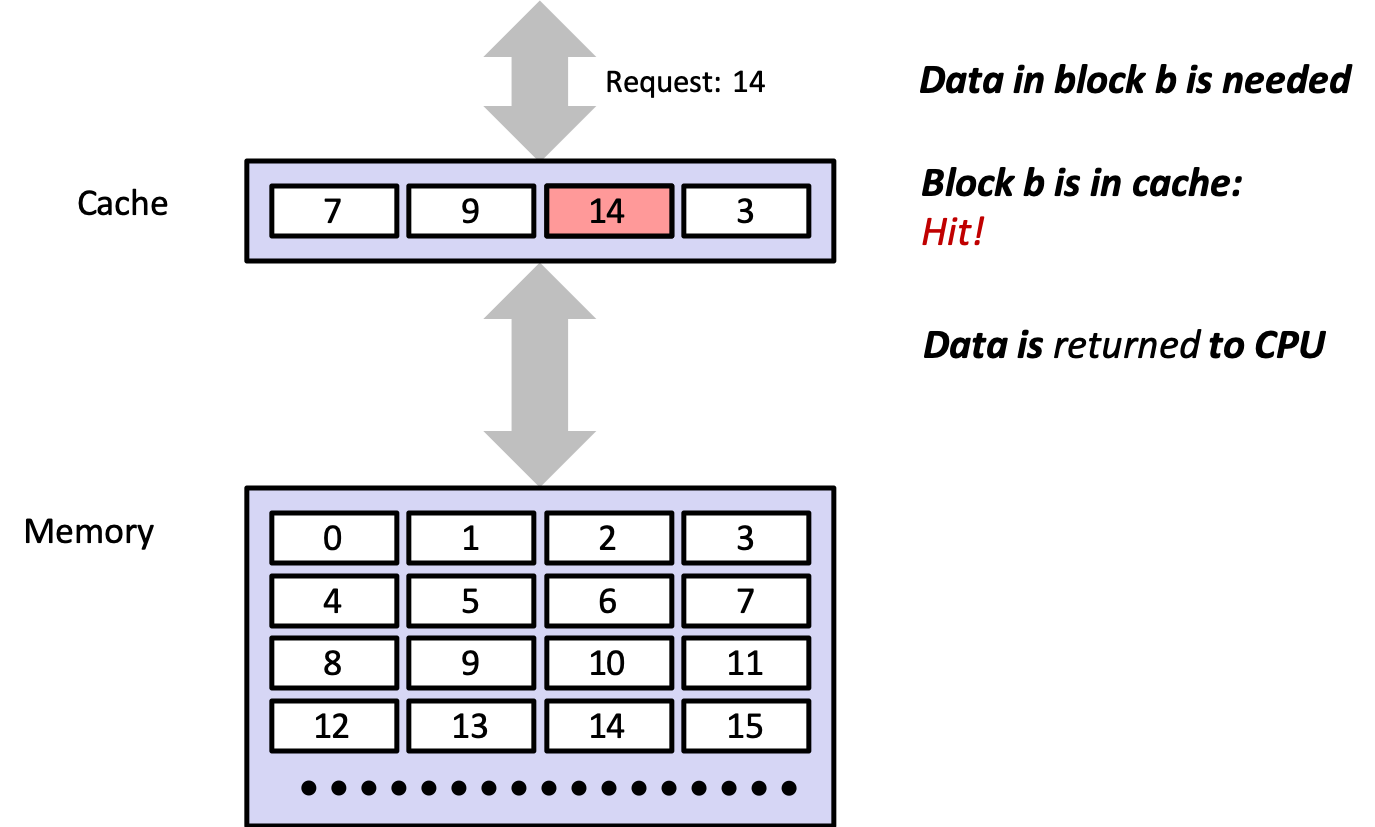

5.2.1 Cache Hit

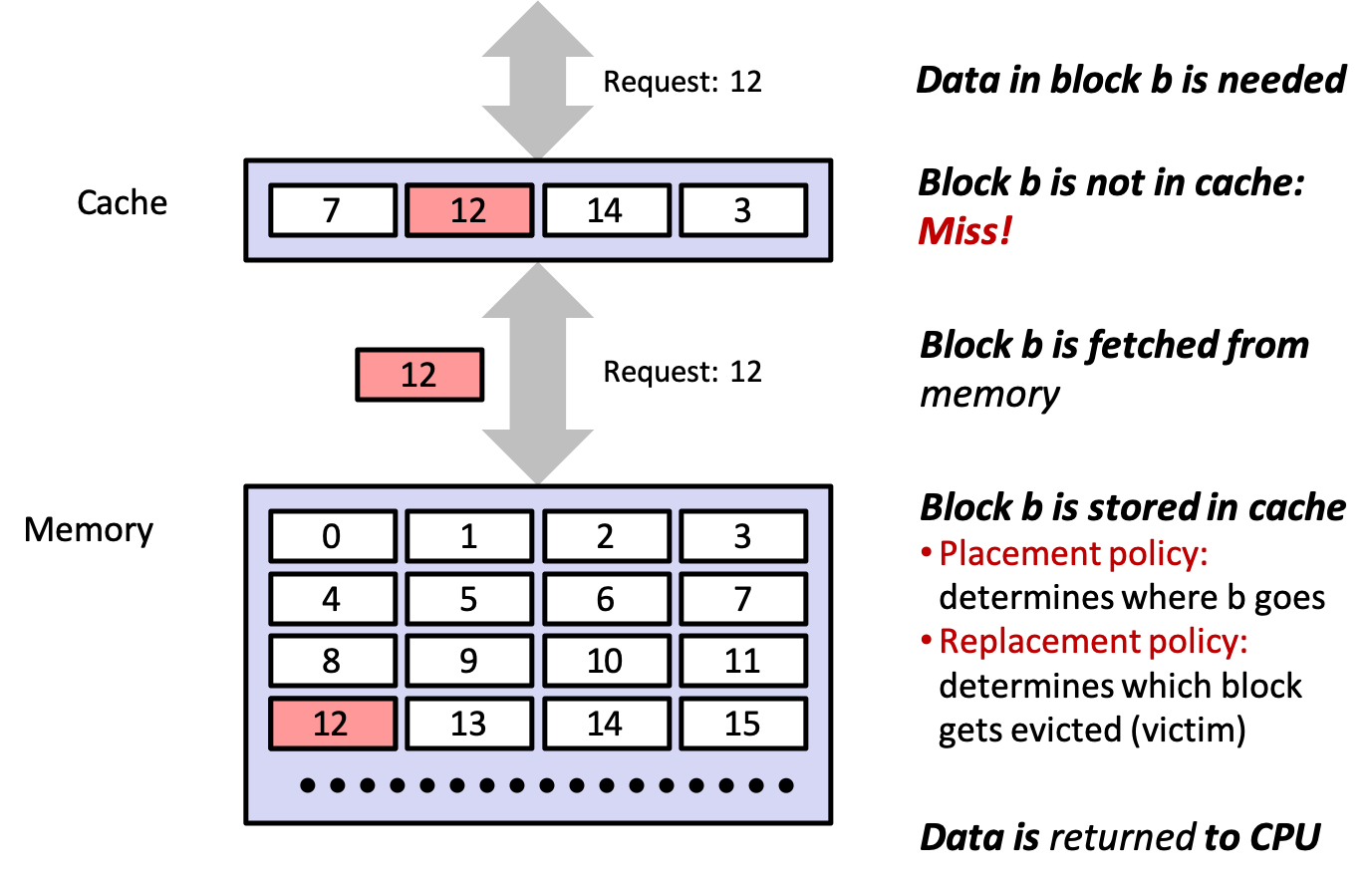

5.2.2 Cache Miss

6 Homework

- CSPP practice problems 6.8 (p. 609)

- The week 6 quiz is due 9pm Wednesday, May 20

- Make a post in the lab 3 check-in forum, due 9pm Monday, May 18