CS 208 s21 — IEEE Floating Point

Table of Contents

1 Introduction

- We will not cover all the complexities of the 50-page IEEE floating point standard

- It saved us from the wild west in the early days of computing where every manufacturer was designing their own floating point representation

- These were typically optimized for performance at the cose of accuracy

- What do we want from a floating point standard?

- Scientists/numerical analysts want them to be as real as possible

- Engineers want them to be easy to implement and fast

- Scientists mostly won, as floating-point operations can be several times slower than integer operations

- Basic idea: represent numbers in binary scientific notation

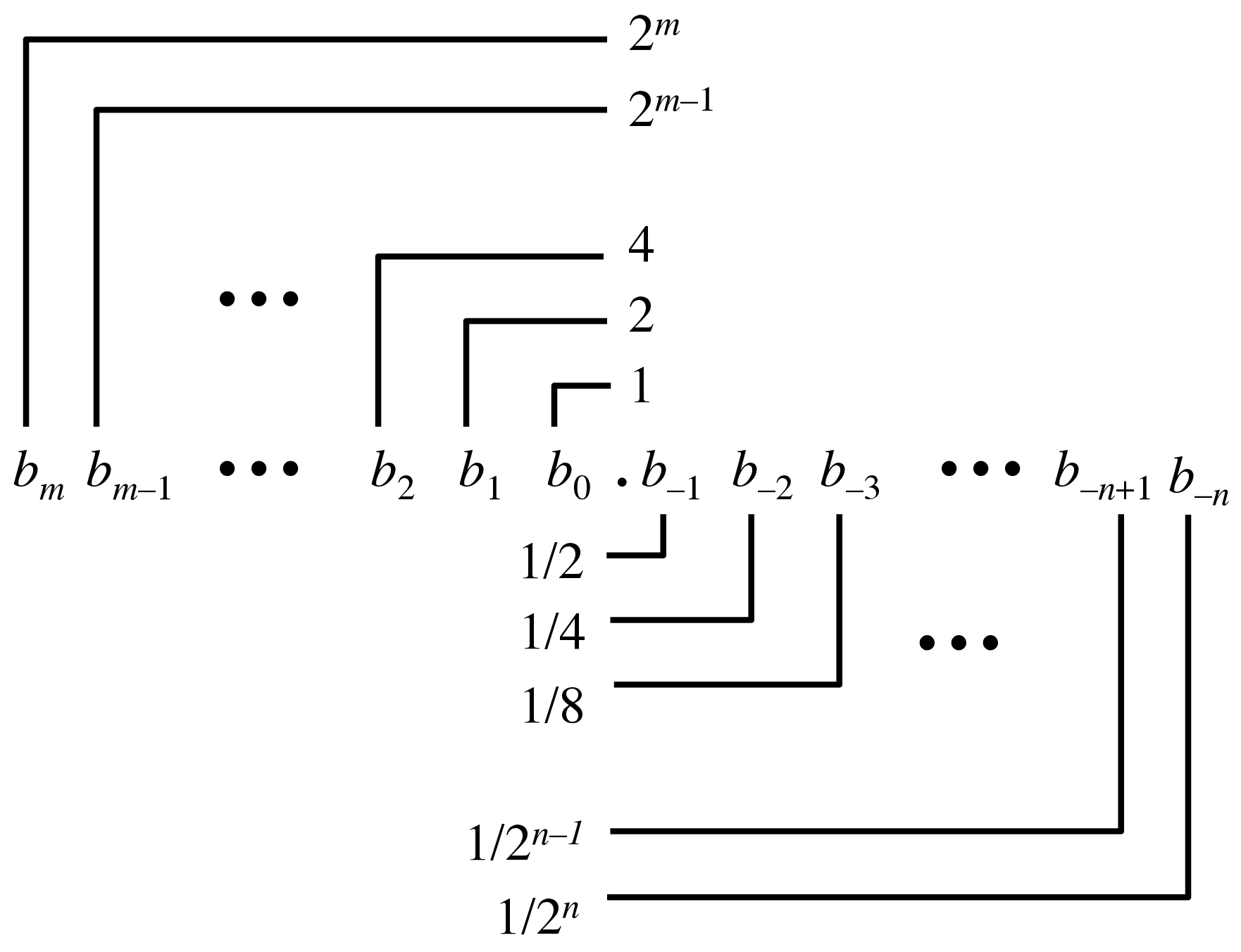

2 Fractional Binary Numbers

The idea of a binary fraction is part of the IEEE representation, so let's start with that.

- Example

0b10.1010= \(1\times2^1 + 1\times2^{-1} + 1\times2^{-3} = 2.625\)

3 IEEE floating point in 6 bits

- The value \(V\) of a floating point number is computed using the following formula: \(V = (-1)^s \times M \times 2^E\)

- sign: \(s\) indicates positive or negative (sign bit when \(V=0\) is special case)

- significand: \(M\) fractional binary number between 1 and \(2 - \epsilon\) or between 0 and \(1 - \epsilon\)

- exponent: \(E\) weights by a power of 2 (can be negative power)

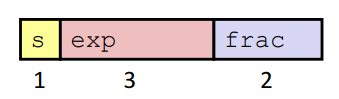

- We will choose to distribute our 6 bits to represent these quantities as follows:

sencodes \(s\)expencodes \(E\) in biased form- normally, \(E\) =

exp\(- Bias\) where the \(k\) bits ofexpare treated as an unsigned integer and \(Bias = 2^{k-1} - 1\)

- normally, \(E\) =

fracis the binary fraction \(0.f_{n-1}\cdots f_1f_0\), and the significand is \(M = 1 + f\)

3.1 Example

- Take the same six bits from before,

0b1 011 10, what value do they represent under this scheme?sis 1, so value is negativeexpis 3, so \(E = 3 - 3 = 0\)fracis 0.5, so \(M = 1 + 0.5 = 1.5\)- V = -1.5 × 20 = -1.5

3.2 Why the biased form for exponents?

- we want to represent very small and very large numbers, so we need the exponent to be signed

- this suggests encoding

expas a two's complement integer

- this suggests encoding

- we want floating-point operations to be fast in hardware

- easier to compare floats if more 1s in

expmeans bigger number

- easier to compare floats if more 1s in

- clever trick: store

expas unsigned with implicit bias- in fact, by putting

expin betweensandfrac, the same hardware can do two's complement comparisons and floating-point comparisons

- in fact, by putting

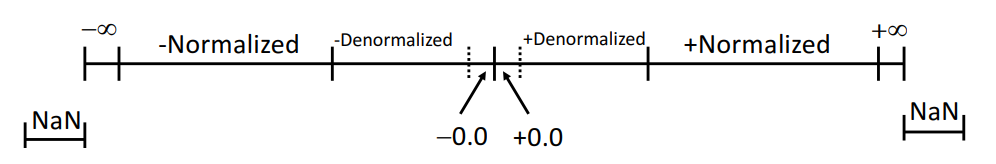

3.3 Denormalized values

- When

expis 0, the representation switches from normalized to denormalized form- \(E = 1 - Bias\)

- \(M = f\)

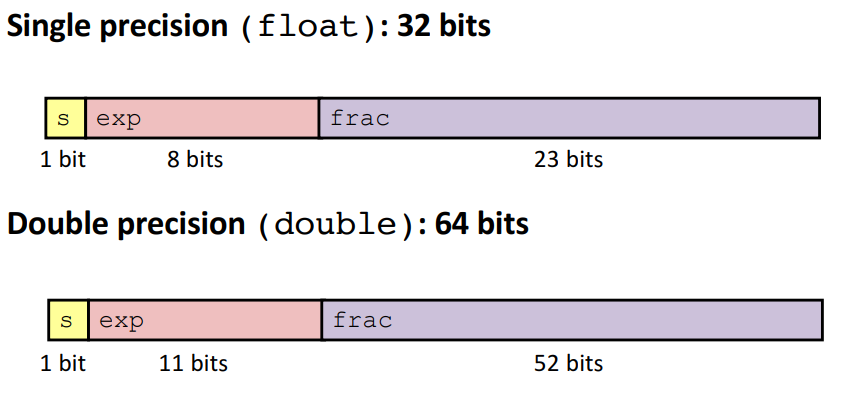

4 Real IEEE

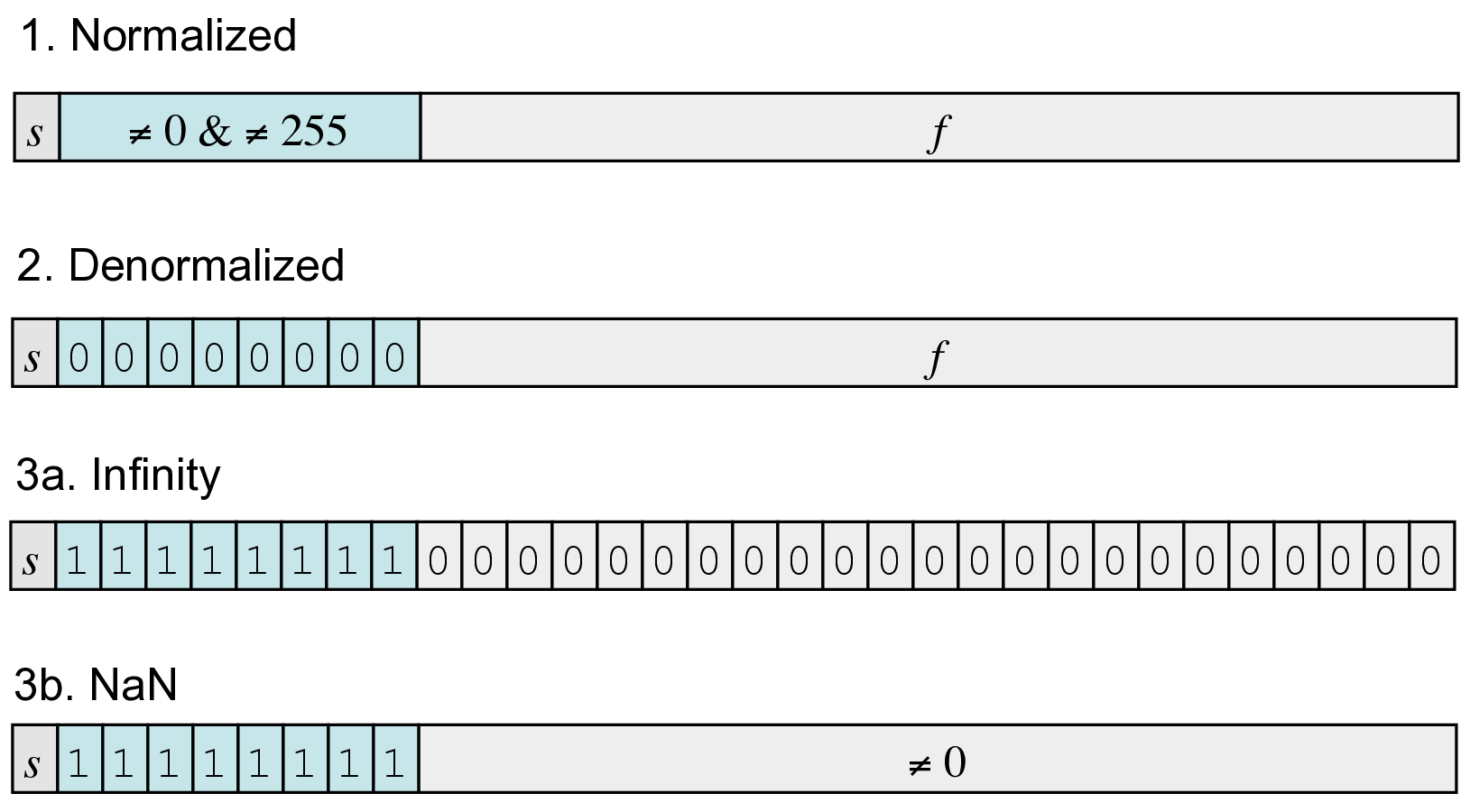

4.1 Special cases

5 Useful simulation

6 Arithmetic

- IEEE standard specifies four rounding modes

- typically round-to-even, helps avoid statistical bias in practice by distributing rounding between rounding up and rounding down

- In general, perform exact computation and then round to something representable with available bits

- can underflow if closest representable value is 0

- can overflow if \(E\) is too big to fit in

exp(result is \(\pm\infty\)) - rounding breaks associtivity