CS 208 w20 lecture 26 outline

1 Definitions

- concurrent computing: when the execution of multiple computations (or processes) overlap

- we need to correctly control access to shared resources

- parallel computing: when the execution of multiple computations (or processes) occur simultaneously

- using additional resources to produce an answer faster

- concurrency primarily about effective coordination, parallelism primarily about efficient division of labor

2 Why Parallelism?

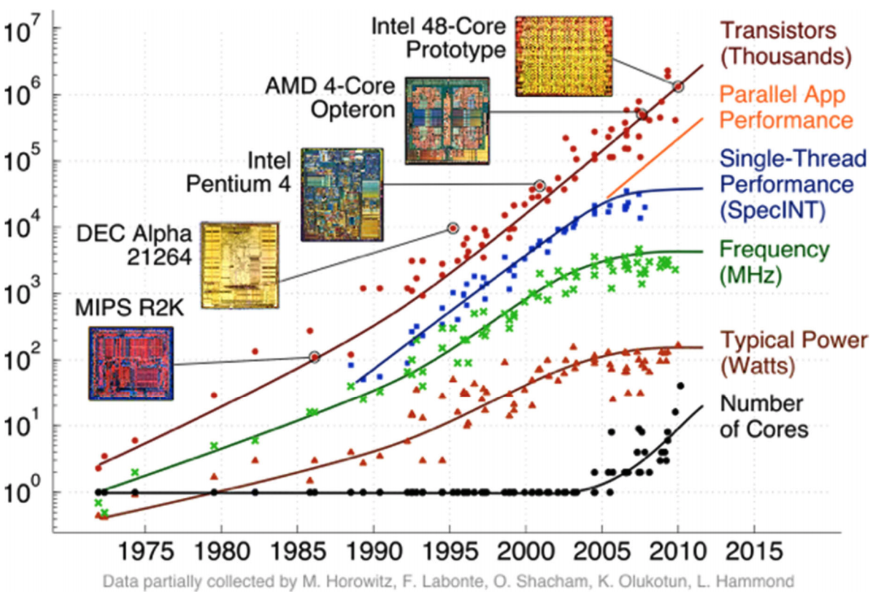

- running into physical limits:

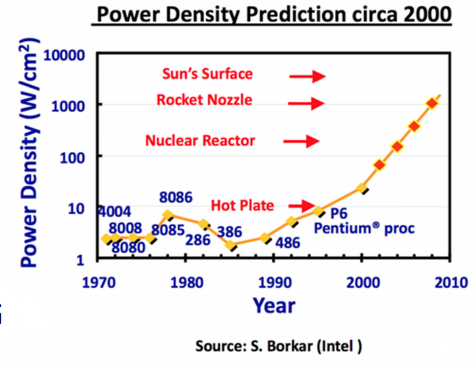

- power densities over time roughly constant from 1958 to late 1990s

- grow rapidly starting in late 1990s

- Since 2000, transistors too small to shrink further (just a few molecules thick)

- power densities over time roughly constant from 1958 to late 1990s

- heat death

- power scales with frequency

- processors stopped around 4 GHz

- solution: slap multiple processors on a single chip

- continues the trend of more and more transitors, but…

- requires new programming approach

3 Limits of Parallelism

- is it faster to pick up a deck of cards with two people instead of 1?

- how about 100 instead of 50?

- there are a finite number of independent tasks, putting a limit on how much can be parallelized

- some tasks need to wait on others, making them inherently sequential

- the amount of speedup that can be achieved through parallelism is limited by the non-parallel portion

4 Threads

- previously, we defined a process as an instance of a running program

- a thread is a single execution sequence that represents the minimal unit of scheduling

- one process may contain multiple threads

- four reasons to use threads:

- program structure: expressing logically concurrent tasks

- each thing a process is doing gets its own thread (e.g., drawing the UI, handling user input, fetching network data)

- responsiveness: shifting work to run in the background

- we want an application to continue responding to input in the middle of an operation

- when saving a file, write contents to a buffer and return control, leaving a background thread to actually write it to disk

- performance: exploiting multiple processors

- performance: managing I/O devices

- program structure: expressing logically concurrent tasks

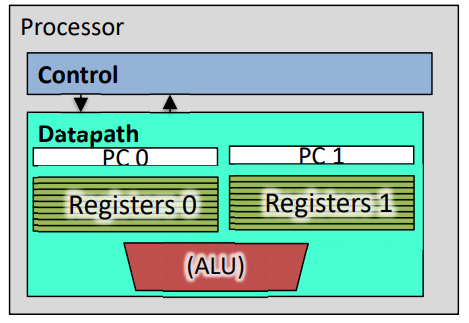

4.1 Hardware Support for Multithreading

- two copies of PC and Registers inside processor hardware:

- looks like two processors to software

- control logic decides which thread to execute an instruction from next

4.2 Threading Models

- POSIX Threads (pthread)

#include <pthread.h>, low-level interface giving fine-grained control

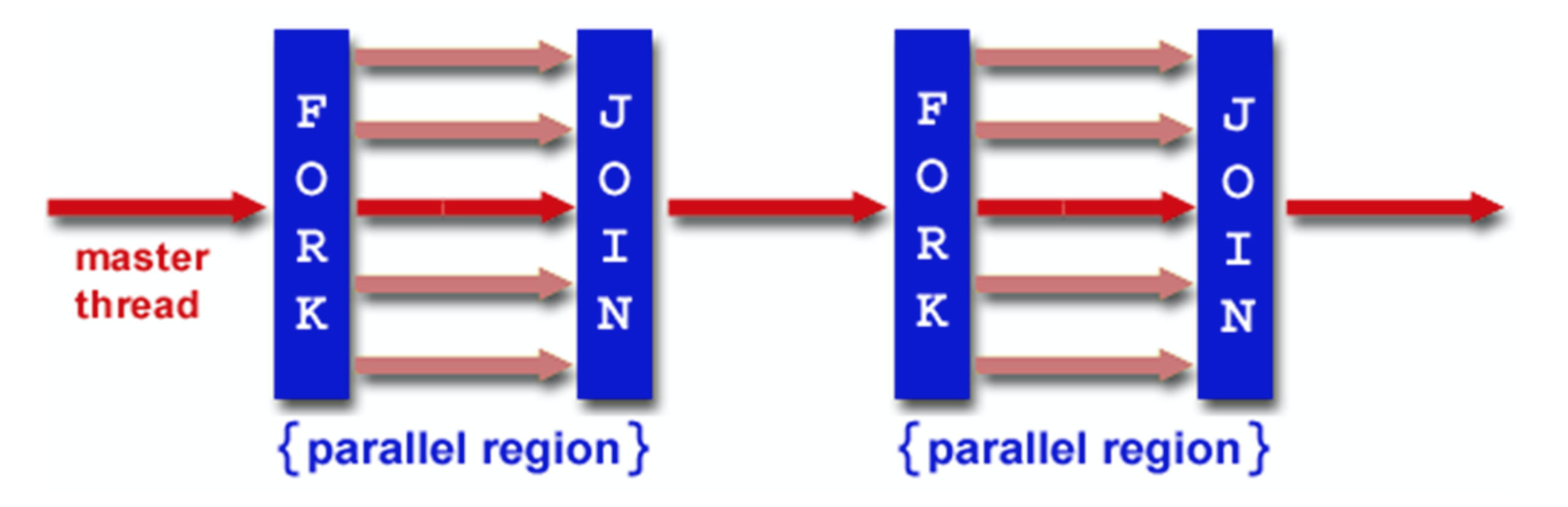

- Fork-Join model (OpenMP)

#include <omp.h>, higher-level interface for fork-join approach- pthreads can do fork-join manually

5 Instruction-Level Parallelism

- steps to execute the next x86-64 instruction:

- fetch the instruction

400540: 48 01 fe - decode the instruction

addq %rdi, %rsi - gather data values read

R[%rdi], R[%rsi] - perform operation calc

R[%rdi] + R[%rsi] - store result save into

%rsi

- fetch the instruction

- each of these steps is roughly associated with a part of the processor

- each part can work independently

5.1 By Analogy to Doing Laundry

- washer, dryer, folding, and putting away all take 30 minutes

- full task takes 2 hours

- four people doing laundry in sequence takes 8 hours

- but the washer and dryer sit idle for 1.5 hours at a time

- we can start the second load as soon as the first load is done with the washer

- pipelined laundry takes 3.5 hours for 4 loads

- does not improve the latency of a single load (still takes 2 hours), but improves throughput

- processors pipeline instruction execution steps in a similar way

6 Why Concurrency?

- a multi-threaded program's execution depends on how instructions are interleaved

- threads sharing data may be affected by race conditions (or data races)

| Thread A | Thread B |

|---|---|

x = 1 |

x = 2 |

- What are the final possible values of

x? Result depends on which thread "wins." - Suppose

y=12initially:

| Thread A | Thread B |

|---|---|

x = y + 1 |

y = y * 2 |

- What are the final possible values of

x? Either 13 or 25 depending on which thread goes first - Suppose

x = 0initially:

| Thread A | Thread B |

|---|---|

x = x + 1 |

x = x + 2 |

- What are the final possible values of

x? Remember that register values are preserved for each thread:

| Interleaving 1 | Interleaving 2 | Interleaving 3 | |||

|---|---|---|---|---|---|

load x into r1 |

load x into r1 |

load x into r1 |

|||

add 1 to r1 |

load x into r1 |

load x into r1 |

|||

store r1 in x |

add 1 to r1 |

add 1 to r1 |

|||

load x into r1 |

add 2 to r1 |

add 2 to r1 |

|||

add 2 to r1 |

store r1 in x |

store r1 in x |

|||

store r1 in x |

store r1 in x |

store r1 in x |

- interleaving 1 results in

x == 3 - interleaving 2 results in

x == 2 - interleaving 3 results in

x == 1

7 Synchronization

- prevent bad interleavings of read/write operations by forcing threads to coordinate their accesses

- identify critical section of code where only one thread should operate at a time

- pseudocode:

check lock // loop/idle here if locked acquire lock CRITICAL SECTION (e.g., change shared variables) release lock

- problem:

| Thread A | Thread B |

|---|---|

| read lock (check) | |

| read lock (check) | |

| conditional jump | |

| conditional jump | |

| write lock (acquire) | |

| write lock (acquire) | |

| CRITICAL SECTION… | |

| CRITICAL SECTION… |

- we need hardware support to make checking and acquiring the lock an atomic operation (impossible to interrupt)

- we want to identify the minimal critical section to maintain parallelism