CS 332 s20 — Thread API and Implementation

Table of Contents

1 Video Lecture

Please watch the video lecture on the material outlined below: https://carleton.hosted.panopto.com/Panopto/Pages/Viewer.aspx?id=9e07e9c3-bafc-459f-9ed0-aba7012e9c58.

2 Multi-threaded Hello, World

2.1 Simple Thread API

| call | description |

|---|---|

void thread_create(thread, func, arg) |

Create a new thread, storing information about it in thread. Concurrently with the calling thread, thread executes the function func with the argument arg |

void thread_yield() |

The call thread voluntarily gives up the processor to let some other thread(s) run. The scheduler can resume running the calling thread whenever it chooses to do so. |

int thread_join(thread) |

Wait for thread to finish if it has not already done so; then return the value passed to thread_exit by that thread. Note that thread_join may be called only once for each thread. |

void thread_exit(ret) |

Finish the current thread. Store the value ret in the current thread's data structure. If another thread is already wainting in a vall to thread_join, resume it. |

- thread API analogous to asynchronous procedure call

thread_createis similar tofork/exec,thread_joinis similar towait

2.2 Code

#include <stdio.h> #include "thread.h" static void go(int n); #define NTHREADS 10 static thread_t threads[NTHREADS]; int main(int argc, char **argv) { int i; long exitValue; for (i = 0; i < NTHREADS; i++){ thread_create(&(threads[i]), &go, i); } for (i = 0; i < NTHREADS; i++){ exitValue = thread_join(threads[i]); printf("Thread %d returned with %ld\n", i, exitValue); } printf("Main thread done.\n"); return 0; } void go(int n) { printf("Hello from thread %d\n", n); thread_exit(100 + n); // Not reached }

thread_tis a struct with thread-specific metadata- the second argument,

gois a function pointer—where the newly created thread show begin execution - the third argument,

i, is passed to the function

2.3 Output

$ ./threadHello Hello from thread 0 Hello from thread 1 Thread 0 returned 100 Hello from thread 3 Hello from thread 4 Thread 1 returned 101 Hello from thread 5 Hello from thread 2 Hello from thread 6 Hello from thread 8 Hello from thread 7 Hello from thread 9 Thread 2 returned 102 Thread 3 returned 103 Thread 4 returned 104 Thread 5 returned 105 Thread 6 returned 106 Thread 7 returned 107 Thread 8 returned 108 Thread 9 returned 109

- why might the "Hello" message from thread 2 print after the "Hello" message from thread 5, even though thread 2 was created before thread 5?

- creating and scheduling are separate operations: only assumption is that threads run on their own virtual processor with unpredictable speed, any interleaving is possible

- why must the "Thread returned" message from thread 2 print before the Thread returned message from thread 5?

- the threads can finish in any order, but the main thread waits for them in the order they were created

- what is the minimum and maximum number of threads that could exist when thread 5 prints "Hello"?

- minimum is 2 threads: thread 5 and the main thread

- maximum is 11 threads: all 10 could have been created, while 5 is the first to run

2.4 Links to source code

3 Reading: Thread API

Read Chapter 27 (p. 319–327) of the OSTEP book. It goes over the full-fledged POSIX thread API and introduces two synchronization primitives that will be central to our discussion of concurrency: locks and condition variables.

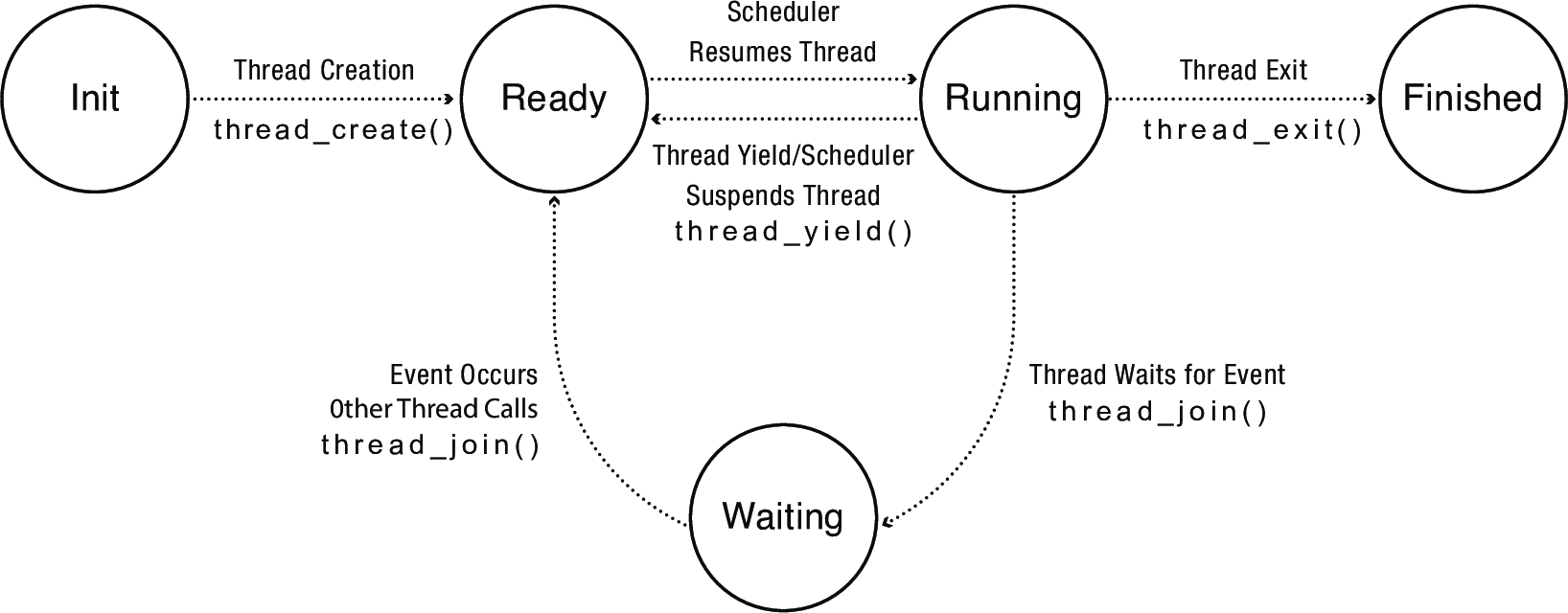

4 Thread Life Cycle

| State of Thread | Location of Thread Control Block (TCB) | Location of Registers |

|---|---|---|

| INIT | Being created | TCB |

| READY | Ready list | TCB |

| RUNNING | Running list | Processor |

| WAITING | Sychronization variable's waiting list | TCB |

| FINISHED | Finished list, then deleted | TCB or deleted |

Note: not all operating systems keep a separate ready and running lists. In Linux, the RUNNING thread is whichever thread is at the front of the ready list.

5 Where Do Threads Come From?

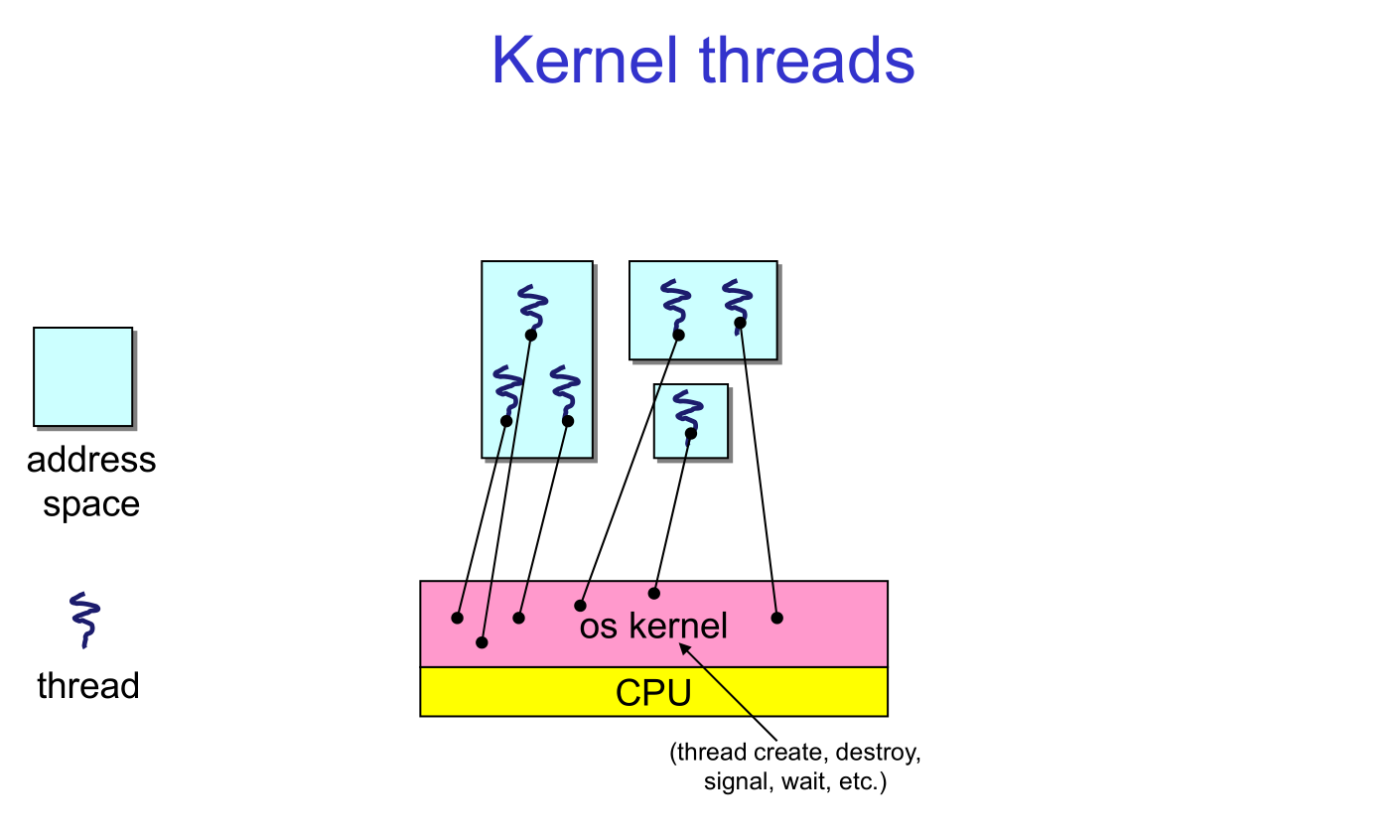

5.1 Kernel Threads

Natural answer: the OS is responsible for creating/managing threads

- For example, the kernel call to create a new thread would

- allocate an execution stack within the process address space

- create and initialize a Thread Control Block

- stack pointer, program counter, register values

- stick it on the ready queue

- We call these kernel threads

- There is a thread name space

- Thread ids (TIDs)

- TIDs are integers (surprise!)

- Why "must" the kernel be responsible for creating/managing threads?

- it's the scheduler

- OS now manages threads and processes / address spaces

- all thread operations are implemented in the kernel

- OS schedules all of the threads in a system

- if one thread in a process blocks (e.g., on I/O), the OS knows about it, and can run other threads from that process

- possible to overlap I/O and computation inside a process

- that is, under programmer control

- Kernel threads are cheaper than processes

- less state to allocate and initialize

- But, they're still pretty expensive for fine-grained use

- orders of magnitude more expensive than a procedure call

- thread operations are all system calls

- context switch

- argument checks

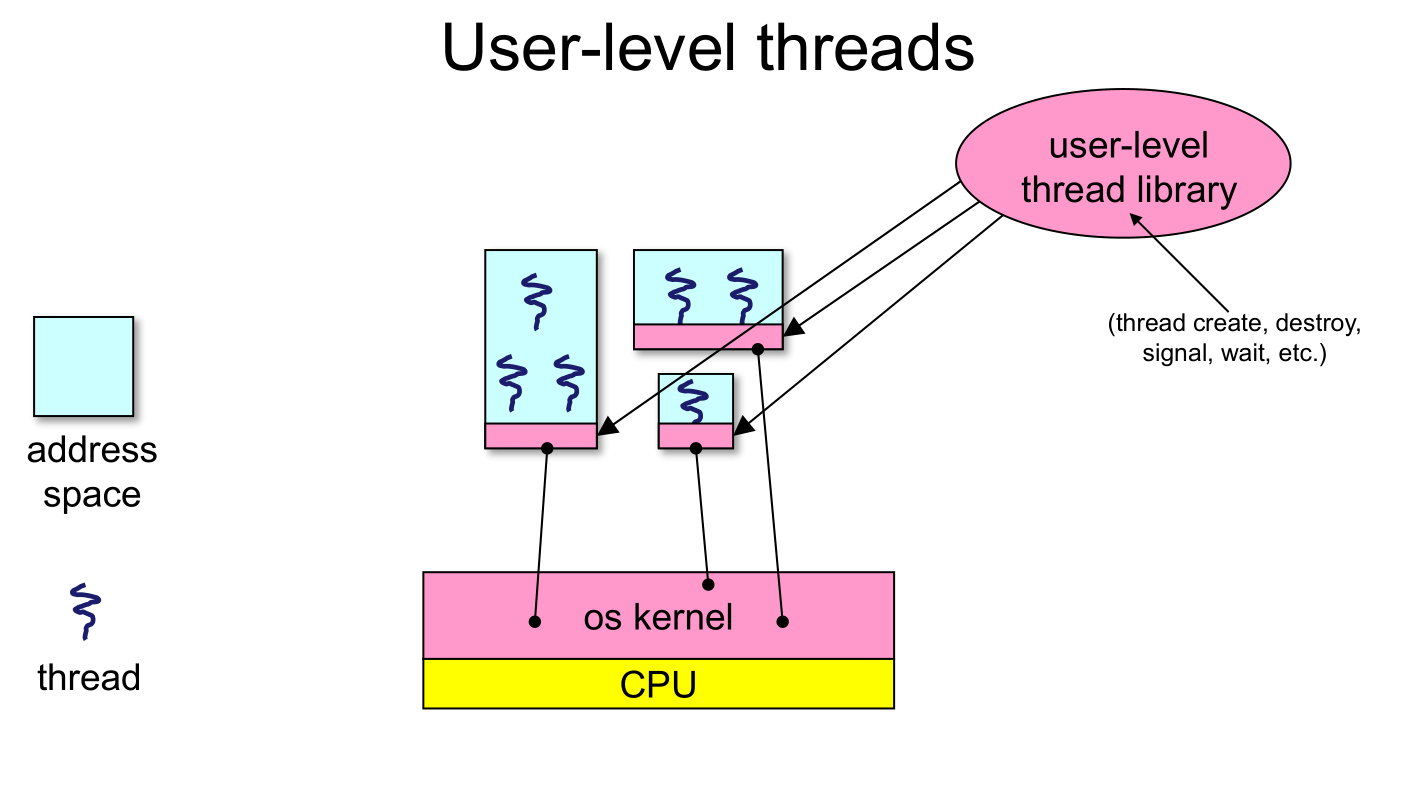

5.2 User-level Threads

- There is an alternative to kernel threads

- Note that thread management isn't doing anything that feels privileged

- Threads can also be managed at the user level (that is, entirely from within the process)

- a library linked into the program manages the threads

- because threads share the same address space, the thread manager doesn't need to manipulate address spaces (which only the kernel can do)

- threads differ (roughly) only in hardware contexts (PC, SP, registers), which can be manipulated by user-level code

- the thread package multiplexes user-level threads on top of kernel thread(s)

- each kernel thread is treated as a "virtual processor"

- we call these user-level threads

- a library linked into the program manages the threads

- User-level threads are small and fast

- managed entirely by user-level library

- e.g., pthreads (

libpthreads.a)

- e.g., pthreads (

- each thread is represented simply by a PC, registers, a stack, and a small thread control block (TCB)

- creating a thread, switching between threads, and synchronizing threads are done via procedure calls

- no kernel involvement is necessary!

- user-level thread operations can be 10-100x faster than kernel threads as a result

- managed entirely by user-level library

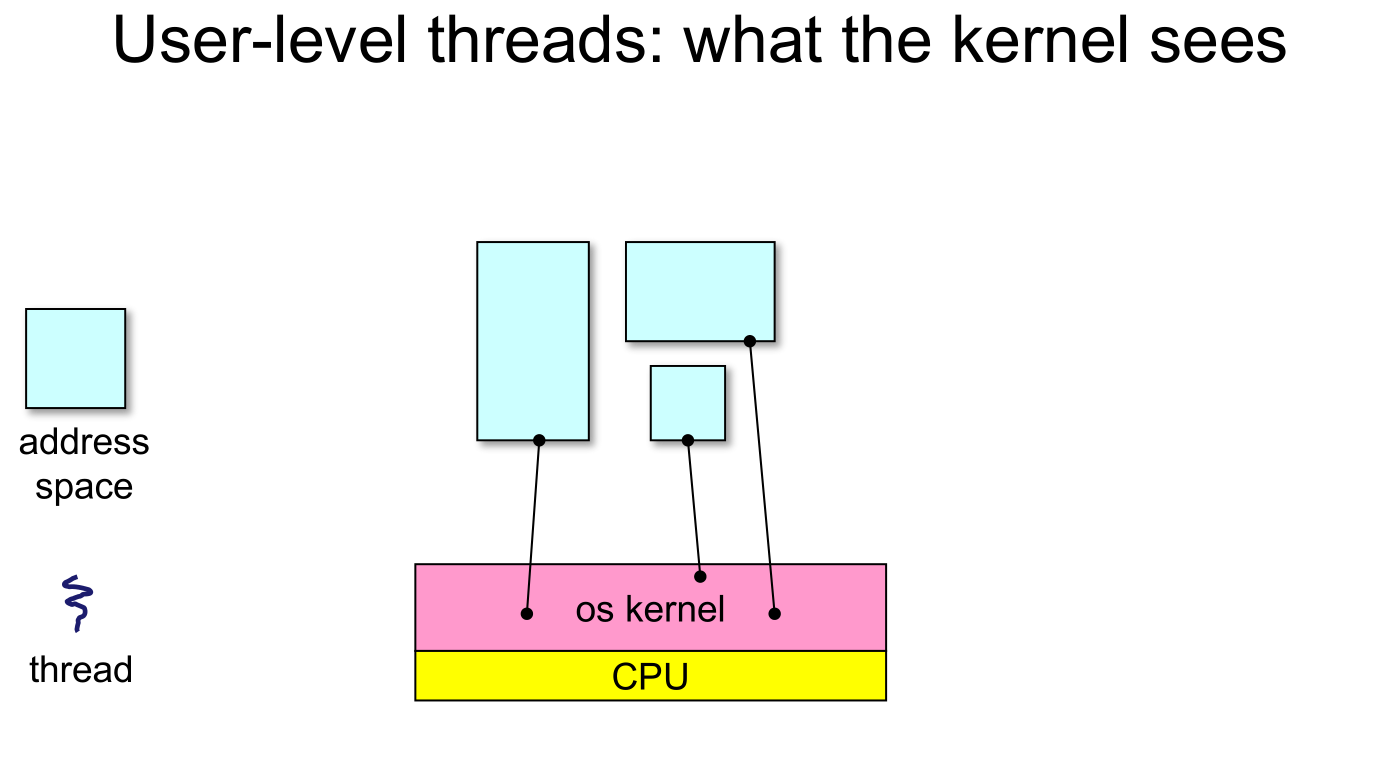

- The OS schedules the kernel thread

- The kernel thread executes user code, including the thread support library and its associated thread scheduler

- The thread scheduler determines when a user-level thread runs

- it uses queues to keep track of what threads are doing: run, ready, wait

- just like the OS and processes

- but, implemented at user-level as a library

- it uses queues to keep track of what threads are doing: run, ready, wait

5.2.1 User-level Context Switch

- Very simple for user-level threads:

- save context of currently running thread

- push CPU state onto thread stack

- restore context of the next thread

- pop CPU state from next thread’s stack

- return as the new thread

- execution resumes at PC of next thread

- Note: no changes to memory mapping required!

- save context of currently running thread

- This is all done by assembly language

- it works at the level of the procedure calling convention

- thus, it cannot be implemented using procedure calls

- it works at the level of the procedure calling convention

How to keep a user-level thread from hogging the CPU?

- Strategy 1: force everyone to cooperate

- a thread willingly gives up the CPU by calling

yield() yield()calls into the scheduler, which context switches to another ready thread- what happens if a thread never calls

yield()?

- a thread willingly gives up the CPU by calling

- Strategy 2: use preemption

- scheduler requests that a timer interrupt be delivered by the OS periodically

- usually delivered as a UNIX signal

- signals are just like software interrupts, but delivered to user-level by the OS instead of delivered to OS by hardware

- at each timer interrupt, scheduler gains control and context switches as appropriate

- scheduler requests that a timer interrupt be delivered by the OS periodically

5.2.2 User-level Thread I/O

- When a user-level thread does I/O, the kernel thread "powering" it is lost for the duration of the (synchronous) I/O operation!

- The kernel thread blocks in the OS, as always

- It maroons with it the state of the user-level thread

- Could have one kernel thread "powering" each user-level thread

- "common case" operations (e.g., synchronization) would be quick

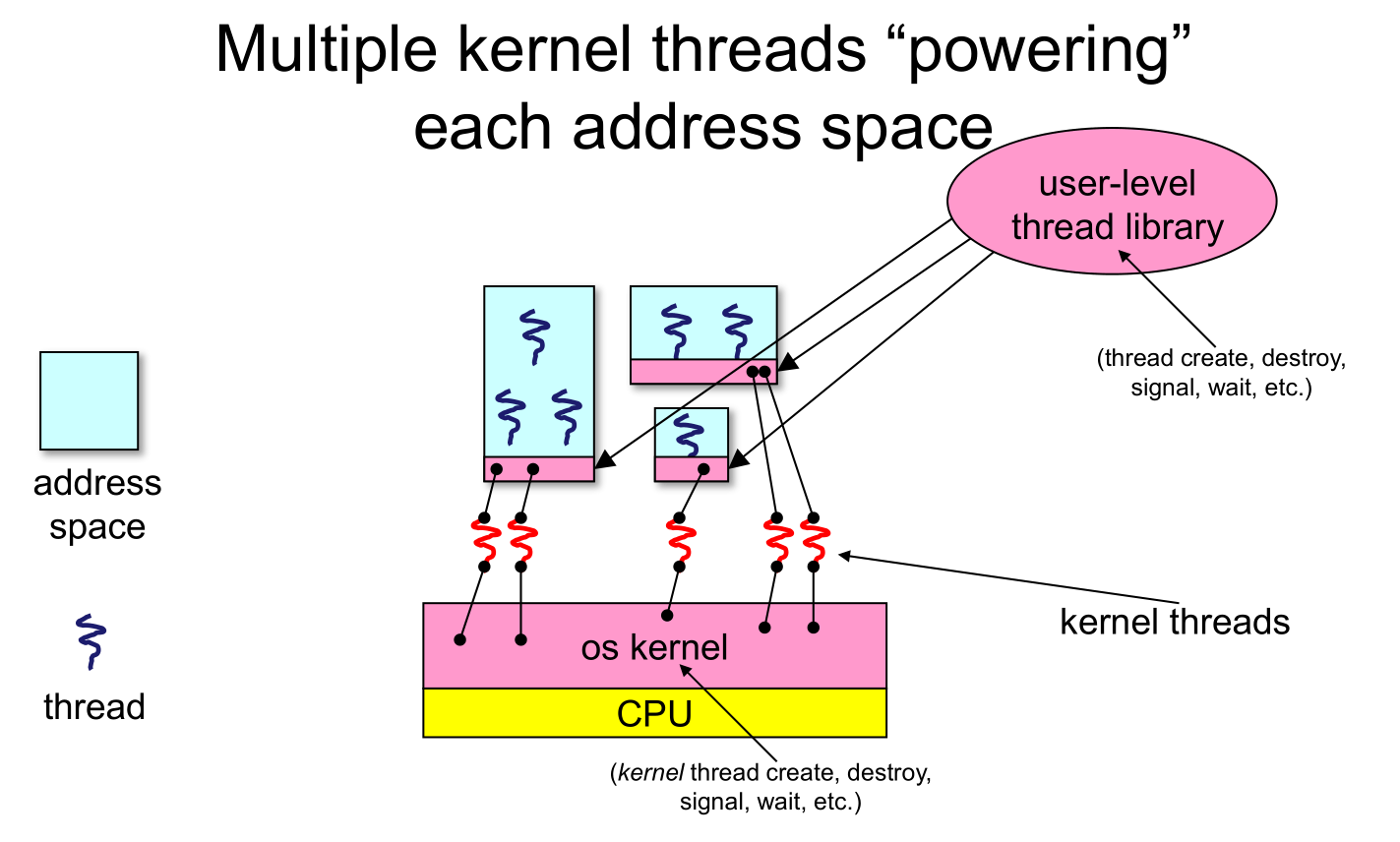

- Could have a limited-size “pool” of kernel threads "powering" all the user-level threads in the address space

- the kernel will be scheduling these threads, obliviously to what's going on at user-level

- What if the kernel preempts a thread holding a lock?

- Effective coordination of kernel decisions and user-level threads requires OS-to-user-level communication

- OS notifies user-level that it has suspended a kernel thread

- This is called scheduler activations

- a research paper from the 1990s with huge effect on practice

- each process can request one or more kernel threads

- process is given responsibility for mapping user-level threads onto kernel threads

- kernel promises to notify user-level before it suspends or destroys a kernel thread

6 Homework

- Week 3 quiz has been posted. It is due 9pm, Wednesday April 29.

- Work on lab 2. Post in the lab 2 check-in forum. Your post should be something surprising or difficult that you've learned that you think would help others, a point of confusion, something you're stuck on, a deeper question sparked by the lab, or a response to someone else's post.

Footnotes:

A lock is something a thread acquires before entering a critical section of code (protecting that section from bad interleaving), whereas a condition variable is something a thread waits on, blocking until it another thread sends a single on that variable.

If a thread holds a lock while waiting, and another thread needs to acquire that lock before it will signal the condition variable, then both threads will be waiting forever. This is called deadlock.