CS 332 w22 — Beyond Physical Memory

Table of Contents

- 1. (near) Infinite Memory

- 2. Demand Paging

- 3. Memory-Mapped Files

1 (near) Infinite Memory

- Can we provide the illusion of near infinite memory in limited physical memory?

- What's a source of storage that's much bigger and cheaper than memory? The disk!

- We will treat memory as a cache for the disk

- This will enable

- Demand-paged virtual memory, a general technique

- Memory-mapped files, a technique for efficient I/O

- Address translation will be a crucial tool, allowing kernel to intervene when accessing selected addresses

2 Demand Paging

- Illusion of (nearly) infinite memory, available to every process

- Multiplex virtual pages onto a limited amount of physical page frames

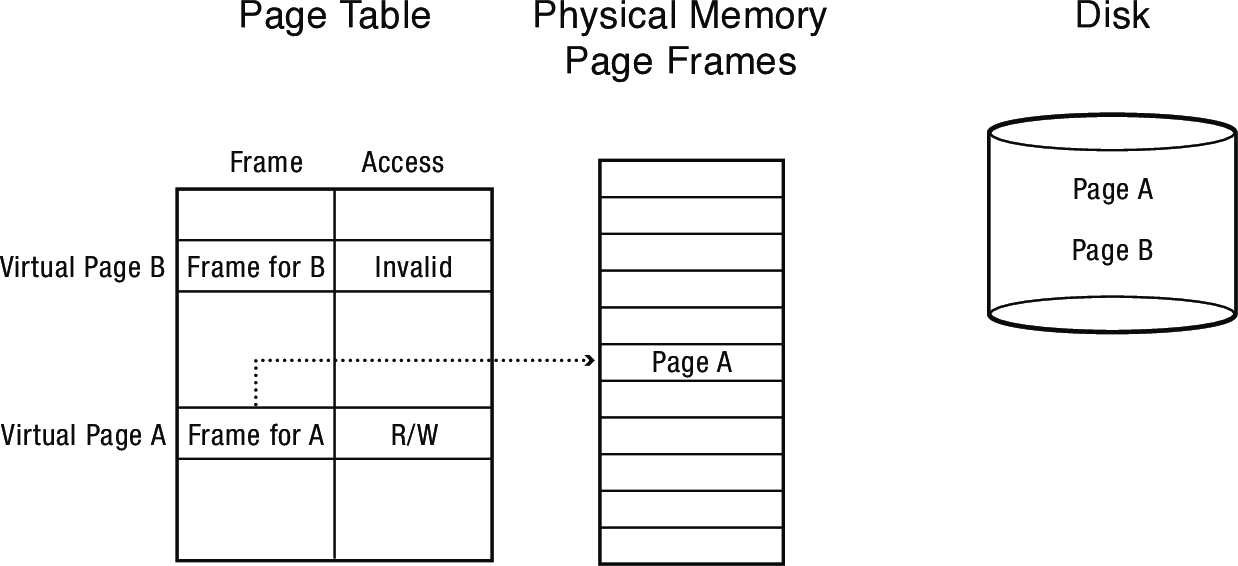

- Pages can be either

- resident (in physical memory, valid page table entry)

- non-resident (on disk, invalid page table entry)

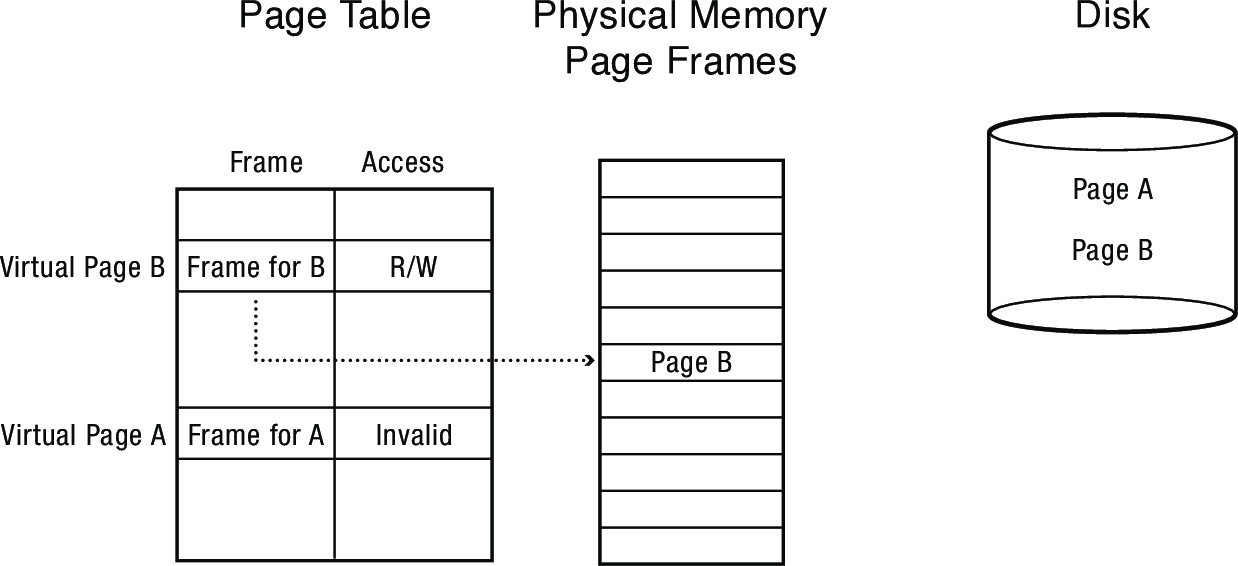

- On reference to non-resident page, copy into memory, replacing some resident page

- From the same process, or a different process

- A generalization of the on-demand paging from earlier

- Could start running a program after initializing the first code page, loaded others in the background or as needed

- Space on disk reserved for non-resident spaces is called swap space

What happens when a process accesses virtual page B?

2.1 How does the kernel provide the illusion that all pages are resident?

- TLB miss

- Page table lookup

- Page fault (page invalid in page table)

- Trap to kernel

- Locate page on disk

- Allocate page frame

- Evict page if needed

- Initiate disk block read into page frame

- Disk interrupt when read complete

- Mark page as valid

- Resume process at faulting instruction

- TLB miss (entry still not present in TLB)

- Page table lookup to fetch translation

- Execute instruction

2.2 Where are non-resident pages stored on disk?

- Option: Reuse page table entry

- If resident, entry contains the physical page frame number

- If non-resident, entry contains a disk block reference

- Option: Use file system

- Maintain a per-segment file in file system, offset within file corresponds to offset within segment

2.3 How do we find a free page frame?

- If there's an unused page, use it

- Otherwise, select old page to evict

- Find all page table entries that refer to old page

- If page frame is shared (use a core map, linking physical page → virtual pages)

- Set each page table entry to invalid

- Remove any TLB entries (on any core)

- Why not: remove TLB entries then set to invalid?1

- Write changes on page back to disk, if necessary

- Why not: write changes to disk then set to invalid?2

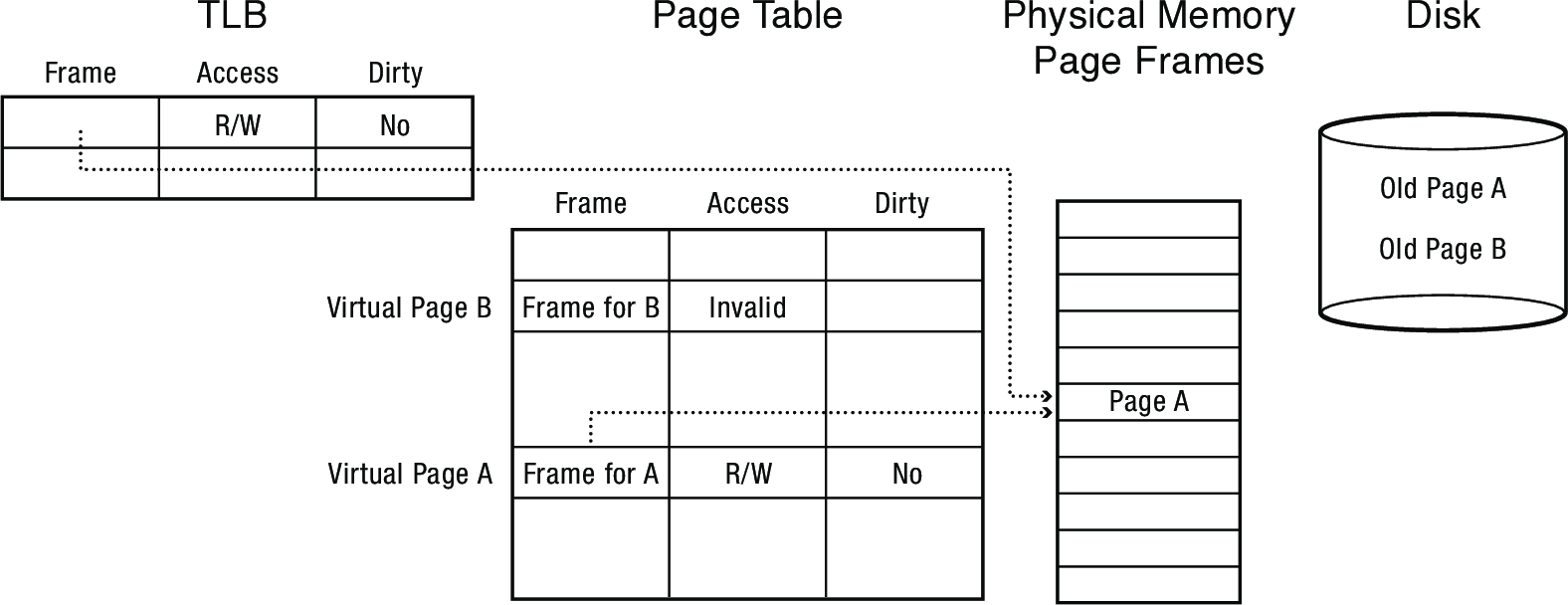

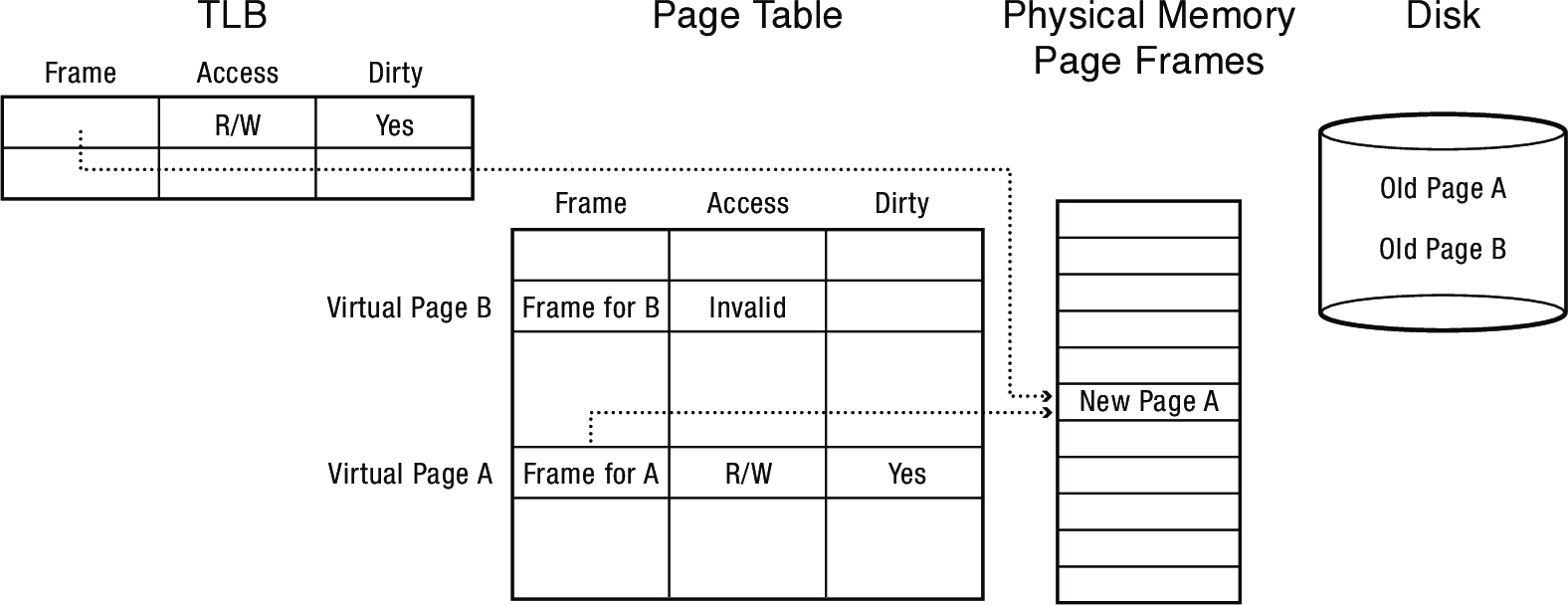

2.4 Which pages have been modified (must be written back to disk)?

- Every page table entry has some bookkeeping

- Has page been modified?

- Safe, but inefficient: treat every page as modified

- Better: dirty bit

- Set by hardware on store instruction

- In both TLB and page table entry

- Has page been modified?

- Bookkeeping bits can be reset by the OS kernel

- When changes to page are flushed to disk

- Memory is a write-back cache for the pages on disk

2.5 What policy should we use for choosing which page to evict?

- Random

- For first-level caches, speed may be paramount, and cost of making a wrong choice is small

- Avoids any bookkeeping overhead

- Unlikely to make the worst choice, but lack of predictable behavior could prevent optimization

- FIFO

- Treat cache entries as a queue, always evict the oldest one

- MIN

- Replace the cache entry that will not be used for the longest time into the future

- Optimality proof based on exchange: if evict an entry used sooner, that will trigger an earlier cache miss

- Least Recently Used (LRU)

- Replace the cache entry that has not been used for the longest time in the past

- Approximation of MIN

- Least Frequently Used (LFU)

- Replace the cache entry used the least often (in the recent past)

2.5.1 Approximating LRU

- Hardware does not track exact LRU information

- Would prohibitively expensive (e.g., update a linked list on every memory access)

- Instead, tracks whether a page been recently used

- Use bit

- Set by hardware in page table entry when entry brought into TLB

- How accurately do we need to track the least recently/least frequently used page?

- If miss cost is low, any approximation will do

- Clock algorithm

- OS periodically sweeps through all pages, reclaiming unused, clearing use bit

- Nth chance: not recently used

- Count number of sweeps since last use, reclaim page once count reaches N

3 Memory-Mapped Files

- Explicit read/write system calls

- Data copied to user process using system call

- Application operates on data

- Data copied back to kernel using system call

- Memory-mapped files

- Open file as a memory segment

- Program uses load/store instructions on segment memory, implicitly operating on the file

- Page fault if portion of file is not yet in memory

- Kernel brings missing blocks into memory, resumes process

- Advantages

- Programming simplicity, especially for large files

- Operate directly on file, instead of copy in/copy out

- Pipelining

- Process can start working before all the pages are populated

- Interprocess communication

- Shared memory segment will be more efficient than a shared temporary file

- Programming simplicity, especially for large files

Linux system calls:

void *mmap(void *addr, size_t length, int prot, int flags, int fd, off_t offset); int munmap(void *addr, size_t length);

- Memory-mapped file is a (logical) segment

- Per segment access control (read-only, read-write)

- File pages brought in on demand

- Using page fault handler

- Modifications written back to disk on eviction, file close

- Using per-page dirty bit to determine if writing is necessary